tl;dr - I wrote a small library for CountMin sketches in Haskell (you can find the code on GitLab, @ mrman/haskell-countmin). I then try to optimize it and fail, but throw in an example of doctest usage, CI with Gitlab, and how to host the generated Haddock on GitLab Pages.

In some work and exploration earlier this year I found some time to read more about sketches – probablistic data structures usually used for summarizing data. There are many interesting concepts and algorithms that power the higher-level functionality we’re seeing break out these days – bloom filters, perfect hashing, etc. By absolute chance, while talking about something completely different I got a chance to talk to the creator of Caffeine – and we got onto the subject of caching (LRU, etc), and I got to read his paper on TinyLFU and the associated paper on adaptive software cache management. While it’s fun to simply read papers (granted they’re interesting enough), I always want to try and implement some of the stuff I read about to make sure I really understand it. Haskell is one of my favorite languages as of late, and I did a quick search for any library that has implemented CountMin, and I couldn’t find one, so I figured I should write one!

Some readers might notice that I actually have an ongoing Haskell development multi-series blog post, that is sorely missing a part 4 (it’s been over 3 months since I wrote part 3) – that post is partially written but is so long that I’m going to sidestep it to make this post. I have no idae when I’m going to actually get to finish that blog series but I can say for certain that day is not today (sorry).

But getting back to the topic at hand, I want to produce a reasonably robust, best-practice packed Haskell library to do CountMin sketching called countmin.

In my own words, the CountMin sketch is a way to very quickly keep counts of some relatively uniquely identifiable piece of data, but with constant space. So for example, If you wanted to keep track of which users clicked on a given button on some interface, but you have millions of users, it’s very expensive space wise to do that with a regular hash, since every user ID would be a given key. It’s very similar to the bloom filter but slightly different in use.

The CountMin sketch is one of the simplest sketches conceptually – given that the keys are distinct/hashable, you can use a few hash functions to hash the keys (user IDs in the example above) and increment counters for those. This means that if a user ID was “123456789”, you would hash that value a few times (once for every hashing function), then increment a counter based on the hashed value. I found a blog post that offers a pretty good introduction.

So what are the distinct benefits of a sketch like CountMin?

What are some downsides?

So we give up precision for much less time/sace use, and easily parallelizable calculation. But don’t take my word for it, check out the original paper and a summary paper which is a much easier read:

There are a bunch of other implmentations of the CountMin sketch – here are a few:

You might note that most of these are in Rust – my other favorite language currently.

Since half of this project is doing the CountMin work and the other half is writing a good axiomatic Haskell library, I’ll take some time to go over the considerations I thought about before undertaking the project.

I like to think about how I can use the power that Haskell affords before starting any project – and while it’s possible to go crazy with too much abstraction (and trying to use too many tricks), I find it’s generally necessary to at least plan out a good interface/framework. some things I thought about:

We want non-cryptographic hashes (AKA fast) so that operations can be as fast as possible. As is tradition my first thought was to check what people thought on StackOverflow, which lead me to the MurMurHash on hackage. With my experience hashing for security/password storage, I know that certain hash functions should not be used for security due to how fast they can be performed, and I remembered that SHA1 is one of them, so it might make a good candidate. As far as I understand it isn’t necessarily designed for speed, but is fast on modern processors. I also ran into another useful SO post.

All in all I found the following hashes that look promising for use:

Note the libraries are all pure haskell libs – I purposefully avoided any C FFI based implementations just to keep things nice and easy, both for me and for those that might use the library. While investigating the difference between murmur2 and murmur3 I found a very informative wiki page.

Along with configuring which hash functions to use, there’s probably a bit of configuration that necessarily needs to go into hashes – things like seed values which can be altered should arguably be available to the user who instantiates a CountMin sketch to be used. to seed values can be alteredsome hashes have seeds, Maybe it should be possible to configure which hash functions to use? Use more hash functions to increase accuracy (by lowering chance of collisions)

Initially I thought it would be pretty cool to implement this at the type level via DataKinds, whereby I could have a type like CountMin '[SHA1 MurMur MD5] floating around my code. I’m opting against this for now and pursuing a simpler approach just to avoid complexity for myself and others using the library (if someone decides to open the library up, I don’t want to force them to understand kinds, singletons, and a bunch of other concepts). I think the simplest way to go about it will likely be with simple typeclasses & possible a bag-of-functions style.

There are a few development aids I think would be good to show off as I develop this tiny library.

ghcidWhile no one except me will be able to confirm that I used it during development, ghcid seems like an excellent tool and I’m looking forward to using it for this project. On othe projects I simply run ghci directly (or worst case a full stack build) for periodic checks that I haven’t done anything the compiler wouldn’t approve of, but ghcid should reduce that feedback loop dramatically.

Strictness is a stumbling block for many haskell programs and while I don’t know that this code will benefit a ton from it, I’ll be using the strictness operator liberally. This basically means most data declarations will make use of the ! operator (which denotes strictness).

doctestGood documentation is key, and doctest (along with doctest-discover improves on this by allowing placing tests

I’ll also be periodically generating the haddock documentation (with stack)as well.

We’re going to use FPComplete’s RIO (you can read about it on their site). There are a few other choices I could have gone with, for example the prolific Stephen Diehl mentioned Protolude in his lengthy compendium of Haskell tips. I’ve heard RIO mentioned at a few recorded FPComplete sessions on various subjects and I’ve been wanting to give it a try, so I’m using that for now. I haven’t put a large amount of thought into which is the best to use, but protolude is somewhat smaller and is mentioned as a “place to start” rather than an endpoint for custom preludes (and FPComplete seems to work very hard in keeping their methods/software up to date), so I think the choice to go with rio isn’t a bad one.

As the end result will be a re-usable library, and the problem domain is pretty mathematical, this project should lend itself very well to testing. I think it’d be a decent place to try to use just a dash of generative testing, either via the venerable quickcheck library or the more recent hotness hedgehog. I’m not 100% sure this will fit but we’ll see, at the very least we’ll have to randomize some inputs to store in the sketch.

I’ll also be making some attempts to improve the performance of the implementation I develop, and trying out some of the tools available in the haskell ecosystem for measuring performance and memory usage of programs. I don’t know how deep I’ll be able to get or how much the implementation will improve, but it should at least be somewhat interesting for others to watch (read about) someone going through it.

Here are some notes on the implementation that I arrived at – while I didn’t do a large amount of up-front design in my own head (more starting with the general idea of typeclasses & some bag-of-functions approach), this naive implementation didn’t turn out too horribly.

CountMin typeclassThe central piece of the functionality is the CountMin typeclass:

-- | Typeclass that captures minimal set of functionality for a CountMin sketch implementation

class CountMin sketch where

-- | Increment the count of a given key

increment :: sketch -> ByteString -> sketch

-- | Helper for incrementing with a regular string

incrementStr :: sketch -> String -> sketch

incrementStr s = increment s . encodeUtf8 . T.pack

-- | Get the count of items seen for a given key

count :: sketch -> ByteString -> Integer

-- | Retrieve the hash functions in use

hashFunctions :: sketch -> [HashMeta]

It’s quite a simple type class – if some type sketch wants to belong to the class of types that can support CountMin behaviour, then it needs to implement at least increment, count and hashFunctions. hashFunctions is less than strictly necessary but I felt it important to allow for a little introspection during practical use.

But what is a HashMeta? Well it’s the class I’ve build to represent/hold a hashing mechanism:

-- | Alias for hash functions that are usable in a CountMin sketch

type BSHashFn = ByteString -> Integer

-- | For use internally in CountMinSketch implementations

data HashMeta = HM { name :: !Text

, fn :: !BSHashFn

}

The next thing I did was start writing out the implementation of a type that would be able to inhabit the CountMin typeclass. The first implmentation looked like this:

-- The sketch

data Sketch = Sketch { hashFns :: [HashMeta]

-- TODO: use a two level vector by HashMeta name instead?

, values :: VB.Vector (HashMap Integer Integer)

}

Sketch uses a list of RIO’s boxed Vectors – not the most efficient implementation but not a bad one to start (obviously a HashMap String (HashMap Integer Integer) is a more obviously efficient choice). This data structure was pretty reasonable for getting started (we’ll get around to optimizations later). The implementation is a bit clunky, but it works:

-- | Implement CountMin for sketch

instance CountMin Sketch where

increment s key = Sketch (hashFns s) updatedValues

where

functions = VB.fromList (fn <$> hashFns s)

-- Insert update for hashed value, using the function with the appropriate index

update idx hmap = case functions VB.!? idx of

Nothing -> hmap -- should never get here, since (length values) == (length hashFns)

Just f -> HM.insertWith (+) (f key) 1 hmap

updatedValues = VB.imap update (values s)

count s key = getMin functions []

where

functions = fn <$> hashFns s

-- Recursive function to do all hashing and retrieve min counts

-- TODO: Should be possible to boil this down to fold + logic

getMin [] acc = fromMaybe 0 (L.minimumMaybe acc)

getMin (f:xs) acc = getMin xs $ acc ++ [v]

where

hash = f key

idx = length acc

-- Get the hash map (if present) for the current hash fn by index

v = case values s VB.!? idx of

Nothing -> 0

-- Look up the hashed value in the map that was provided

Just hmap -> fromMaybe 0 (HM.lookup hash hmap)

hashFunctions :: Sketch -> [HashMeta]

hashFunctions = hashFns

And of course, we’ll need to give users a small constructor:

-- | Create a sketch with the given functions and an empty vector of maps for the hash outputs

buildSketch :: [HashMeta] -> Maybe Sketch

buildSketch [] = Nothing

buildSketch fns = Just $ Sketch fns $ VB.replicate (length fns) HM.empty

At this point, all of this works theoretically, but I don’t have any HashMetas to put in that constructor – time to write some shims for the popular hashing function libraries I mentioned earlier.

The first hash implementation I started with was SHA1 – all I needed to do was build a HashMeta value out the functionality that SHA package provided (in it’s entirety):

{-# LANGUAGE OverloadedStrings #-}

{-# LANGUAGE NoImplicitPrelude #-}

-- | A SHA1 hash implementation

module Hashes.SHA1 (hSHA1) where

import RIO

import RIO.ByteString.Lazy (fromStrict)

import Data.Digest.Pure.SHA (sha1, integerDigest)

import Types (HashMeta(..))

hSHA1 :: HashMeta

hSHA1 = HM "SHA1" (integerDigest . sha1 . fromStrict)

Pretty easy! 99.9% of the hard work is being done by these libraries, and I’m just picking hashes that are considered fast and slightly repurposing them.

While writing the main logic I added an as-of-yet unused typeclass for sketches that could be dynamically re-configured:

Types.hs:

-- | Dynamic sketches allow for re-configuration of hash functions at any time

class CountMin sketch => DynamicCountMin sketch where

-- | Add a hash function for use with sketch

addHashMeta :: sketch -> HashMeta -> sketch

-- | Remove a hash function for use with the sketch

removeHashMeta :: sketch -> HashMeta -> sketch

Here’s what the naive implementation looks like:

Types.hs:

-- | EQ instance for HashMeta that does nothing more than hash names

-- ideally this should also check initialization vectors/other parameters if a hash allows it

instance Eq HashMeta where

a == b = (name a) == (name b)

Lib.hs:

instance DynamicCountMin Sketch where

addHashMeta s@(Sketch oldFns _) newFn = s { hashFns=oldFns <> [newFn] }

removeHashMeta s@(Sketch oldFns _) hashToRemove = s { hashFns=filter (hashToRemove==) oldFns }

Note that I needed to add the Eq instance for HashMeta manually since BSHashFn (AKA B.ByteString -> Integer) does not have a automatically derivableEq instance. This is pretty fragile but it works for now.

Why is this the “naive” implementation? While it’s pretty easy to simply modify the internal list of HashMetas, there’s a small wrinkle – when increment is called on a value that has been seen before (i.e. has an entry in one of the hash’s Maps), we have to make sure to use this minimum to initialize the values for the new hashes (otherwise 1 would get input and become the new minimum. To illustrate, consider this series of events:

Sketch with SHA1 as the only HashMeta is created"test" is incremented (we’ll say "test" hashes to x), and the Map for SHA1 looks like {x: 1}"test" is incremented (we’ll say "test" hashes to x1 under MD5), the Map for MD5 looks like {x1: 1} and SHA1 looks like {x: 2}count "test" is called and the minimum observed value is 1, so an incorrect value is chosenThe discrepancy doesn’t seem very large since the example is small, but CountMin is never supposed to be lower than the actual value (theoretically), and of course, this “resetting” behavior would work the same exact way if the previous could was 99999. Luckily the fix for this is pretty easy – we just need to be record the minimum that we see from existing hashes before we deal with new ones. Since we’re using a list of Maps (and we’ll assume new maps are added to the right end of the list), all we need to do is keep track of the minimum value – we must run through all hashes with possible values before we get to the new ones that are uninitialized.

Here’s what my first crack at the code looks like:

-- | Implement CountMin for sketch

instance CountMin Sketch where

increment s key = Sketch (hashFns s) updatedValues

where

functions = VB.fromList $ fn <$> hashFns s

maps = values s

-- Fold over the list of functions, keeping track of minimums seen from previous functions' map data,

-- producing an entirely new vector of modified hashmaps (very inefficient)

(_, updatedValues) = VB.ifoldl' updateMinAndVector (-1, VB.empty) functions

updateMinAndVector (prevMin, newVector) idx f = case maps VB.!? idx of

-- Should never reach this case, since # of fns is the same as # of hashmaps (one per hashmap)

Nothing -> error "hashmap count does not match hash function count"

-- Every case

Just oldMap -> let hashedKey = f key

in case HM.lookup hashedKey oldMap of

-- No count in the hashmap for this key yet

Nothing -> if prevMin == -1

-- No entry in map, prevMin @ orig value -1 means value must be new

-- This relies on the fact that older functions (which should have values)

-- are all processed *before* newer ones that won't have values

then (0, VB.snoc newVector (HM.insert hashedKey 0 oldMap))

else (prevMin, VB.snoc newVector (HM.insert hashedKey prevMin oldMap))

-- A count is already being maintained, so use minimum of previous min and count going forward

-- (if some hash function has a lower count we should use that when we get to new functions)

Just existingCount -> ( if existingCount < prevMin then existingCount else prevMin

, VB.snoc newVector (HM.insert hashedKey (existingCount+1) oldMap)

)

We can already see some design choices coming back to bite me:

VB.Vector (HashMap Integer Integer)? That made this code so much harderVB.Vector produces a case branch that should never occur, this is a pretty stinky code smell (at the very least I could use tuples)Design warts aside, after getting this to compile, I was suspicious so started writing a basic test:

describe "DynamicCountMin Sketch" $ do

it "can add hash function (SHA1 + FarmHash)" $ \_ -> (pure (buildSketch [hSHA1]))

>>= pure . fromJust

>>= \onlySHA -> pure (addHashMeta onlySHA hFarmHash)

>>= \updatedSketch -> length (hashFunctions updatedSketch) `shouldBe` 2

Well that test passed, but the changes to increment broke a bunch of other tests:

Lib

CountMin Sketch

cannot be created with no hashing functions

CountMin Sketch w/ SHA1

can be created with only sha1

generates a count properly FAILED [1]

Looks like I’ve managed to already get my first regression! Good thing I added these tests earlier rather than later. So there are actually two bugs in the code above, can you find them? Let me save you some trouble, here’s the first:

Just oldMap -> let hashedKey = f key

in case HM.lookup hashedKey oldMap of

-- No count in the hashmap for this key yet

Nothing -> if prevMin == -1

-- No entry in map, prevMin @ orig value -1 means value must be new

-- This relies on the fact that older functions (which should have values)

-- are all processed *before* newer ones that won't have values

then (0, VB.snoc newVector (HM.insert hashedKey 0 oldMap))

The problem there is that I’m not acknowledging the fact that the hashedKey has been witnessed. THe prevMin shouldn’t be 0 (and we shouldn’t be writing 0 into the map), it should be 1 to represent the fact that we hashed and got the key (with the current hash function f). Here’s the fixed line (with additional comment):

Nothing -> if prevMin == (-1)

-- No entry in map, prevMin @ orig value -1 means value must be new

-- This relies on the fact that older functions (which should have values)

-- are all processed *before* newer ones that won't have values

-- NOTE: prevMin is set to 1 because we *know* at least one has been seen now, for the current hash fn

then (1, VB.snoc newVector (HM.insert hashedKey 1 oldMap))

The second bug is in the logic for the case where there is an existing count – we should have been treating the prevMin == -1 case differently there as well, since if prevMin is -1, we should just be taking the existing count (no existingCount will ever be less than -1, and we want to replace prevMin with the lowest valid count we see):

-- A count is already being maintained, so use minimum of previous min and count going forward

-- (if some hash function has a lower count we should use that when we get to new functions)

Just existingCount -> ( if prevMin == -1 then existingCount else min2 prevMin existingCount

, VB.snoc newVector (HM.insert hashedKey (existingCount+1) oldMap)

)

After ironing these issues out I added a one more functional test:

it "generates a count properly (SHA1 + FarmHash)" $ \_ -> (pure (buildSketch [hSHA1]))

>>= pure . fromJust

>>= \onlySHA -> pure (addHashMeta onlySHA hFarmHash)

>>= \emptySketch -> pure (foldl' incrementStr emptySketch threeHits)

-- Ensure the sketch produced *at least* 3

>>= \s -> (count s "test" >= 3)`shouldBe` True

And of course, we exposed another bug! The case that I thought “would never happen” (tm) has immediately occurred:

DynamicCountMin Sketch

can add hash function (SHA1 + FarmHash)

generates a count properly (SHA1 + FarmHash) FAILED [1]

Failures:

src/Lib.hs:44:32:

1) Lib, DynamicCountMin Sketch, generates a count properly (SHA1 + FarmHash)

uncaught exception: ErrorCall

hashmap count does not match hash function count

CallStack (from HasCallStack):

error, called at src/Lib.hs:44:32 in countmin-0.1.0.0-6KtY4JbyfDX4fc2vVRFjd4:Lib

Well, it looks like I forgot to add the extra data (looking back at the code) – remember when I mentioned the danger of having to manually maintain this invariant (noting that I should have used a list of tuples or something) – it’s already come back to bite me, and I haven’t even left the launch pad yet. Anyhow, here are the fixes to the implementation of DynamicCountMin Sketch:

instance DynamicCountMin Sketch where

addHashMeta s@(Sketch oldFns oldValues) newFn = s { hashFns=oldFns <> [newFn]

, values=VB.snoc oldValues HM.empty

}

removeHashMeta s@(Sketch oldFns oldValues) hashToRemove = case L.elemIndex hashToRemove oldFns of

Nothing -> s

Just idx -> s { hashFns=filter (hashToRemove==) oldFns

, values=VB.take idx oldValues <> VB.drop (idx+1) oldValues

}

In this code, I actually update values along with hashFns in lock-step (again, it’s better that I don’t have to do this at all, but we’ll get to that later). With this I’ve got the passing tests I want:

Hashes.FarmHash

FarmHash hash function

produces the expected integer for 'count-min'

Hashes.MD5

MD5 hash function

produces the expected integer for 'count-min'

Hashes.SHA1

SHA1 hash function

produces the expected integer for 'count-min'

Lib

CountMin Sketch

cannot be created with no hashing functions

CountMin Sketch w/ SHA1

can be created with only sha1

generates a count properly

CountMin Sketch w/ MD5

can be created with only md5

generates a count properly

CountMin Sketch w/ FarmHash

can be created with only farmhash

generates a count properly

DynamicCountMin Sketch

can add hash function (SHA1 + FarmHash)

generates a count properly (SHA1 + FarmHash)

Finished in 0.0015 seconds

12 examples, 0 failures

I’ve only covered a few tests out of an essentially infinite test space, but at the very least I’ve got some indication that this works. Paradoxically, nothing builds confidence like finding bugs, fixing, and adding tests to prevent regressions. Somewhat nonsensically, we’re going to leave the design warts that made this code hard to implement in place, and leave our refactoring/improvement efforts after we start benchmarking – it will be nicer to backdrop code and efficiency improvements against a benchmark rather than just unit tests.

The code we’ve written up until now is pretty darn naive – the code is ugly and unoptimized. I expect potential users who want to use this library in some piece of performance-critical code to efficiently count hashable instances (whatever they represent), would at least like some speed/memory usage analysis. A few resources on benchmarking/optimization of Haskell that I used to get started:

Cabal offers the ability to easily configure & run benchmarks, which I’m going to be making use of (I’ll be running the benchmarks with stack bench). I wrote a simple benchmark that builds a random list of values and inserts a random amount of them in until we reach some amount, and have set it up to test with criterion, the go-to benchmarking library in the Haskell ecosystem (as far as I can tell):

package.yaml:

benchmarks:

countmin-bench:

main: Bench.hs

source-dirs: test

dependencies:

- countmin

- criterion

- QuickCheck

test/BenchData.hs:

module BenchData (genRandomByteStrings

, emails

) where

import RIO

import Test.QuickCheck.Arbitrary (arbitrary)

import Test.QuickCheck.Gen (Gen, generate, choose, vectorOf)

import Test.QuickCheck.Modifiers (PrintableString(..))

import qualified RIO.ByteString as BS

import qualified RIO.HashMap as HM

import qualified RIO.Text as T

-- Generated by https://www.randomlists.com/email-addresses?qty=20

emails :: [String]

emails = [ "schwaang@comcast.net"

, "stecoop@mac.com"

, "smcnabb@mac.com"

, "khris@aol.com"

, "corrada@optonline.net"

, "jwarren@live.com"

, "fraterk@sbcglobal.net"

, "eidac@mac.com"

, "jsnover@aol.com"

, "tedrlord@me.com"

, "ivoibs@msn.com"

, "sumdumass@mac.com"

, "ahuillet@yahoo.com"

, "carmena@hotmail.com"

, "kidehen@hotmail.com"

, "jdhildeb@live.com"

, "benanov@gmail.com"

, "rasca@yahoo.com"

, "portele@live.com"

, "torgox@gmail.com"

]

-- | Generate a printable string

genRandomString :: Gen PrintableString

genRandomString = arbitrary

-- | convert a PrintableString to a ByteString

toUTF8 :: PrintableString -> ByteString

toUTF8 = encodeUtf8 . T.pack . getPrintableString

-- | Generate a list of printable strings of a given size

genRandomStringListOfSize :: Int -> IO [ByteString]

genRandomStringListOfSize size = fmap toUTF8 <$> generate (vectorOf size genRandomString)

-- | Choose from 1 to a given n, inclusive

chooseFromOneTo :: Int -> IO Int

chooseFromOneTo n = generate (choose (1, n))

-- | Generate and combine randomized bytestrings, keeping count of which bytestrings had which counts

genRandomByteStrings :: Int -> IO ([BS.ByteString], HM.HashMap ByteString Int)

genRandomByteStrings total = randomStrings' 0 ([], HM.empty)

where

randomStrings' count acc@(l, m)

-- | If we've generated the required number, we're done

| count == total = pure acc

-- | Generate a random amount of a random value and insert

| otherwise = chooseFromOneTo (total - count)

>>= genRandomStringListOfSize

>>= \values -> randomStrings' (count + length values) (l ++ values, m)

test/Bench.hs:

import RIO

import Criterion.Main (defaultMain, bgroup, env, nf, bench)

import Data.Maybe (fromJust)

import BenchData (genRandomStrings)

import Hashes.FarmHash (hFarmHash)

import Hashes.MD5 (hMD5)

import Hashes.SHA1 (hSHA1)

import Lib (buildSketch, Sketch, CountMin(..))

-- | Default sketch, w/ all available hask fns used

defaultSketch :: Sketch

defaultSketch = fromJust $ buildSketch [hMD5, hFarmHash, hSHA1]

main :: IO ()

main = defaultMain [

env (genRandomStrings 1000) $ \ ~(values, _) ->

bgroup "counting random strings (1000)" [ bench "w/ Sketch" $ nf (totalCount . foldl' increment defaultSketch) values ]

]

NOTE: You can find all the code in the haskell-countmin GitLab repository.

And here’s the output from running stack bench:

countmin-0.1.0.0: benchmarks

Running 1 benchmarks...

Benchmark countmin-bench: RUNNING...

benchmarking counting random strings (1000)/w/ Sketch

time 32.92 μs (32.09 μs .. 34.49 μs)

0.993 R² (0.983 R² .. 0.999 R²)

mean 32.25 μs (31.88 μs .. 33.11 μs)

std dev 1.709 μs (857.7 ns .. 3.178 μs)

variance introduced by outliers: 59% (severely inflated)

Benchmark countmin-bench: FINISH

Completed 2 action (s).

Theoretically, it should have been as easy as running stack bench --profile to get a profile of this information, but things got a little sticky. I ended up filing an issue in criterion, which I’m still not 100% sure of, but I’ve found a workaround (using nfIO instead of nf) so I’m going to move past it. By the time I got past it though, Bench.hs looked very different:

{-# LANGUAGE NumericUnderscores #-}

import RIO

import Criterion.Main (defaultMain, bgroup, env, nfIO, bench)

import Data.Maybe (fromJust)

import Hashes.FarmHash (hFarmHash)

import Hashes.MD5 (hMD5)

import Hashes.SHA1 (hSHA1)

import Hashes.AlwaysZero (hAlwaysZero)

import Lib (buildSketch, Sketch, CountMin(..))

import BenchData (genRandomStrings)

-- | Default sketch, w/ all available hask fns used

onlyAlwaysZero :: Sketch

onlyAlwaysZero = fromJust $ buildSketch [hAlwaysZero]

-- | Only MD5

onlyMD5 :: Sketch

onlyMD5 = fromJust $ buildSketch [hMD5]

-- | Only SHA1

onlySHA1 :: Sketch

onlySHA1 = fromJust $ buildSketch [hSHA1]

-- | Only FarmHash

onlyFarmHash :: Sketch

onlyFarmHash = fromJust $ buildSketch [hFarmHash]

-- | All available hashes (except for AlwaysZero)

allHashes :: Sketch

allHashes = fromJust $ buildSketch [hMD5, hFarmHash, hSHA1]

main :: IO ()

main = defaultMain

[ env (genRandomStrings 1000) $ \ ~(values, _) ->

bgroup "counting random strings (1000) " [ bench "w/ Sketch (AlwaysZero)" $ nfIO $ pure $ totalCount (foldl' increment onlyAlwaysZero values)

, bench "w/ Sketch (MD5)" $ nfIO $ pure $ totalCount (foldl' increment onlyMD5 values)

, bench "w/ Sketch (FarmHash)" $ nfIO $ pure $ totalCount (foldl' increment onlyFarmHash values)

, bench "w/ Sketch (SHA1)" $ nfIO $ pure $ totalCount (foldl' increment onlySHA1 values)

, bench "w/ Sketch (MD5, FarmHash, SHA1)" $ nfIO $ pure $ totalCount (foldl' increment allHashes values)

]

, env (genRandomStrings 100_000) $ \ ~(values, _) ->

bgroup "counting random strings (100,000) " [ bench "w/ Sketch (AlwaysZero)" $ nfIO $ pure $ totalCount (foldl' increment onlyAlwaysZero values)

, bench "w/ Sketch (MD5)" $ nfIO $ pure $ totalCount (foldl' increment onlyMD5 values)

, bench "w/ Sketch (FarmHash)" $ nfIO $ pure $ totalCount (foldl' increment onlyFarmHash values)

, bench "w/ Sketch (SHA1)" $ nfIO $ pure $ totalCount (foldl' increment onlySHA1 values)

, bench "w/ Sketch (MD5, FarmHash, SHA1)" $ nfIO $ pure $ totalCount (foldl' increment allHashes values)

]

]

Note I introduced the AlwaysZero hash function as sort of a control, it doesn’t hash at all… it just always returns zero.

The output stack bench looks something like this thanks to criterion:

benchmarking counting random strings (1000) /w/ Sketch (AlwaysZero)

time 32.43 μs (32.15 μs .. 32.74 μs)

0.999 R² (0.999 R² .. 1.000 R²)

mean 32.26 μs (32.16 μs .. 32.48 μs)

std dev 449.5 ns (286.0 ns .. 760.7 ns)

benchmarking counting random strings (1000) /w/ Sketch (MD5)

time 33.10 μs (32.68 μs .. 33.58 μs)

0.998 R² (0.997 R² .. 0.999 R²)

mean 33.09 μs (32.87 μs .. 33.42 μs)

std dev 890.4 ns (779.4 ns .. 1.052 μs)

variance introduced by outliers: 27% (moderately inflated)

... more stuf ...

Here’s that data tabulated for easier consumption:

| Hash list | 1000 insertion mean |

100,000 insertion mean |

|---|---|---|

[AlwaysZero] |

32.26μs |

25.91ms |

[hMD5] |

33.09μs |

25.70ms |

[hFarmHash] |

32.54μs |

25.78ms |

[hSHA1] |

32.70μs |

25.80ms |

[hMD5, hFarmHash, hSHA1] |

32.65μs |

25.90ms |

A few things about these results:

AlwaysZero actually takes more time than the other hashing function combinations @ high insertion count, I think this has to do with literally every insertion being a collision (which it took me a few minutes to realize)FarmHash seems to be the fastest hash (and/or least collisions)Wait a second, this doesn’t make any sense – how could running 3 different hash functions be faster than running just one, or be anywhere close to literally doing nothing? Turns out I forgot a crucial strictness annotation on values for the Sketch data type:

-- The sketch

data Sketch = Sketch { hashFns :: ![HashMeta]

-- TODO: use a two level hashmap by HashMeta name instead?

, values :: !(VB.Vector (HashMap Integer Integer))

, eventsProcessed :: !Integer

}

After fixing that, I ran stack bench again, and here are the tabulated results:

| Hash list | 1000 insertion mean |

100,000 insertion mean |

|---|---|---|

[AlwaysZero] |

149.1μs |

15.78ms |

[hMD5] |

5.180ms (5180μs) |

658.4ms |

[hFarmHash] |

358.3μs |

114.5ms |

[hSHA1] |

7.048ms (7048μs) |

816.1ms |

[hMD5, hFarmHash, hSHA1] |

15.06ms (1506μs) |

1.894s (1894ms) |

Now this is more like it. These numbers make far more intuitive sense, running all 3 hashing functions looks like the sum of running them individually. FarmHash is clearly the fastest (it’s built to be fast), which means I should probably find some other meant-to-be-fast functions to use.

These numbers don’t mean much to me – they certainly seem fast enough but considering I’m not really applying this to a specific usecase/project, they serve as a baseline for performance improvements more than anything.

Finally we can get to memory usage and where time was spent – here’s the first part of the stack bench --profile output:

Sat Jun 1 13:50 2019 Time and Allocation Profiling Report (Final)

countmin-bench +RTS -N -p -RTS

total time = 86.68 secs (321163 ticks @ 1000 us, 8 processors)

total alloc = 201,808,071,608 bytes (excludes profiling overheads)

COST CENTRE MODULE SRC %time %alloc

getSHA1Sched Data.Digest.Pure.SHA src/Data/Digest/Pure/SHA.hs:(339,1)-(427,61) 35.9 6.6

increment.updateMinAndVector Lib src/Lib.hs:(41,11)-(62,59) 6.3 4.3

*>.w' Data.Serialize.Put src/Data/Serialize/Put.hs:164:17-36 3.9 6.2

insert.go Data.HashMap.Base Data/HashMap/Base.hs:(494,5)-(523,76) 3.8 3.5

integerDigest.addShift Data.Digest.Pure.SHA src/Data/Digest/Pure/SHA.hs:1160:8-55 3.1 6.8

fn Types src/Types.hs:22:22-23 3.0 4.1

>>= Data.Vector.Fusion.Util Data/Vector/Fusion/Util.hs:36:3-18 2.9 2.8

increment.functions Lib src/Lib.hs:35:11-46 2.4 1.9

*> Data.Serialize.Put src/Data/Serialize/Put.hs:(162,9)-(165,41) 2.1 10.8

primitive Control.Monad.Primitive Control/Monad/Primitive.hs:195:3-16 2.1 1.2

lookup.go Data.HashMap.Base Data/HashMap/Base.hs:(437,5)-(448,29) 1.7 0.6

md5Finalize.padBS Data.Digest.Pure.MD5 Data/Digest/Pure/MD5.hs:(103,13)-(106,55) 1.6 10.4

applyMD5Rounds Data.Digest.Pure.MD5 Data/Digest/Pure/MD5.hs:(147,9)-(215,33) 1.5 0.5

fmap Data.Vector.Fusion.Stream.Monadic Data/Vector/Fusion/Stream/Monadic.hs:(133,3)-(135,20) 1.3 0.9

runSHA Data.Digest.Pure.SHA src/Data/Digest/Pure/SHA.hs:(960,1)-(966,36) 1.1 2.1

clone16 Data.HashMap.Base Data/HashMap/Base.hs:(1312,1)-(1313,19) 0.9 3.7

sha1.bs_out Data.Digest.Pure.SHA src/Data/Digest/Pure/SHA.hs:994:3-42 0.9 7.6

copy.\ Data.HashMap.Array Data/HashMap/Array.hs:(247,9)-(248,30) 0.8 1.5

basicUnsafeFreeze Data.Vector Data/Vector.hs:(263,3)-(264,47) 0.7 1.3

toBigEndianSBS Data.Digest.Pure.SHA src/Data/Digest/Pure/SHA.hs:(260,1)-(262,55) 0.6 1.4

... more stuff ...

A few things to pull from the cost center breakdown

SHA1 is pretty slow, we’re spending 35% of our time in there, but it doesn’t allocate a crazy amount moreupdateMinAndVector (that big recursive updater function) is pretty prominent, probably a good place to try and optimize if possibleData.HashMap, Data.Serialize, Data.Vector show up, but I know I’m not using the best data structures alreadyAs you might expect, the speed data for the profiled run is not really so important since the benchmarking overhead is present, but the cost center data gives some hints on where to start optimizing.

Before we get started optimizing there’s one more thing – I want to know that I at least built the sketch right to do what sketches were supposed to do – reduce memory usage for these counts! Luckily for me, there is an awesome library called weigh which was developed by the folks at FPComplete. I’m going to use it to measure the data usage separately in a pseudo benchmark, but before I can do that, I need to create a Sketch that represents the naive approach, simply stuffing the counts in a regular HashMap with the counts as values. We’ll call it NotASketch:

-- | A sketch that isn't one, but instead uses the naive just-put-the-value-in-a-map approach

data NotASketch = NotASketch { nasValues :: !(HashMap ByteString Integer)

, nasEventsProcessed :: !Integer

}

instance CountMin NotASketch where

increment :: NotASketch -> ByteString -> NotASketch

increment (NotASketch oldValues nep) key = NotASketch { nasValues=HM.insertWith (+) key 1 oldValues

, nasEventsProcessed=nep+1

}

count :: NotASketch -> ByteString -> Integer

count s key = HM.lookupDefault 0 key (nasValues s)

hashFunctions :: NotASketch -> [HashMeta]

hashFunctions _ = []

totalCount :: NotASketch -> Integer

totalCount = nasEventsProcessed

Now to take a stab at using weigh to properly benchmark the usage (and hopefully seeing a drop in weight of the object afterwards:

-- | Memory tests for various sketches

testMemory :: IO ()

testMemory = sequence [genRandomByteStrings 1000, genRandomByteStrings 100_000]

>>= \[(v, _), (vv, _)] -> mainWith ( action "1000 increments (NotASketch)" (pure $ totalCount $ foldl' increment notASketch v) -- ^ 1000 increment tests

>> action "1000 increments (Sketch)" (pure $ totalCount $ foldl' increment allHashes v)

>> action "100,000 increments (NotASketch)" (pure $ totalCount $ foldl' increment notASketch vv) -- ^ 100,000 increment tests

>> action "100,000 increments (Sketch)" (pure $ totalCount $ foldl' increment allHashes vv)

)

And when I run stack bench the memory tests give me:

Case Allocated GCs

1000 increments (NotASketch) 53,375,168 51

1000 increments (Sketch) 89,301,416 78

100,000 increments (NotASketch) 5,583,442,200 5,386

100,000 increments (Sketch) 9,041,520,832 7,938

Benchmark countmin-bench: FINISH

Completed 2 action(s).

Well, that’s not what I expected at all… Sketch is allocating more memory and causing more GCs than NotASketch! Well that makes sense, given that Sketch does work, but this isn’t quite what I’m looking for – I’m looking for how much memory Sketch is using at steady state – it should be a matter of storing integers versus longer bytestrings! At least now we know have another angle on optimization – we probably don’t want to be allocating near 2x the objects and causing near 2x the GCs of the naive solution!

After some searching I came across an SO post which lead me to the GHC.DataSize module in the ghc-datasize package, let’s use that?

$ stack bench

Error: While constructing the build plan, the following exceptions were encountered:

In the dependencies for countmin-0.1.0.0:

ghc-datasize needed, but the stack configuration has no specified version (latest matching version is 0.2.0)

needed since countmin is a build target.

Some different approaches to resolving this:

* Consider trying 'stack solver', which uses the cabal-install solver to attempt to find some working build configuration. This can be convenient when dealing with many complicated constraint

errors, but results may be unpredictable.

* Recommended action: try adding the following to your extra-deps in /home/mrman/Projects/foss/haskell-countmin/stack.yaml:

- ghc-datasize-0.2.0

Plan construction failed.

Uh-oh, looks like ghc-datasize isn’t part of the resolver, let’s try and see if it will work as an extra-dep:

$ stack bench

Error: While constructing the build plan, the following exceptions were encountered:

In the dependencies for ghc-datasize-0.2.0:

ghc-heap-view must match >=0.5, but the stack configuration has no specified version (latest matching version is 0.5.10)

needed due to countmin-0.1.0.0 -> ghc-datasize-0.2.0

Some different approaches to resolving this:

* Consider trying 'stack solver', which uses the cabal-install solver to attempt to find some working build configuration. This can be convenient when dealing with many complicated constraint

errors, but results may be unpredictable.

* Recommended action: try adding the following to your extra-deps in /home/mrman/Projects/foss/haskell-countmin/stack.yaml:

- ghc-heap-view-0.5.10

Plan construction failed.

Uh-oh Uh-oh, looks like ghc-heap-view is also required, that’s a bad sign… Let’s try adding it?

$ stack bench

Error: While constructing the build plan, the following exceptions were encountered:

In the dependencies for ghc-heap-view-0.5.10:

Cabal-2.4.1.0 from stack configuration does not match >=1.24 && <2.1 (latest matching version is 2.0.1.1)

base-4.12.0.0 from stack configuration does not match >=4.5 && <4.11 (latest matching version is 4.10.1.0)

ghc-8.6.5 from stack configuration does not match >=8.0 && <8.2

needed due to countmin-0.1.0.0 -> ghc-heap-view-0.5.10

Some different approaches to resolving this:

* Set 'allow-newer: true' to ignore all version constraints and build anyway.

* Consider trying 'stack solver', which uses the cabal-install solver to attempt to find some working build configuration. This can be convenient when dealing with many complicated constraint

errors, but results may be unpredictable.

* Recommended action: try adding the following to your extra-deps in /home/mrman/Projects/foss/haskell-countmin/stack.yaml:

- Cabal-2.0.1.1

- base-4.10.1.0

Plan construction failed.

Uh-oh, looks like it requires a completely different version of base, this code only works on GHC versions 8.0 to 8.2 it looks like! While I could downgrade versions, maybe I’ll just use something else instead… Some looking around in that SO post also brought up Foreign.Storable’s sizeOf function, maybe that could work? Hopefully I can just derive Storable? Well, after enabling DeriveAnyClass and giving it a shot, it looks like that won’t be a thing:

$ stack bench

countmin-0.1.0.0: build (lib + bench)

Preprocessing library for countmin-0.1.0.0..

Building library for countmin-0.1.0.0..

[7 of 7] Compiling Lib ( src/Lib.hs, .stack-work/dist/x86_64-linux-tinfo6/Cabal-2.4.0.1/build/Lib.o)

/home/mrman/Projects/foss/haskell-countmin/src/Lib.hs:18:1: warning: [-Wunused-imports]

The import of ‘Foreign.Storable’ is redundant

except perhaps to import instances from ‘Foreign.Storable’

To import instances alone, use: import Foreign.Storable ()

|

18 | import Foreign.Storable

| ^^^^^^^^^^^^^^^^^^^^^^^

/home/mrman/Projects/foss/haskell-countmin/src/Lib.hs:25:34: warning: [-Wmissing-methods]

• No explicit implementation for

‘sizeOf’, ‘alignment’,

(either ‘peek’

or

‘peekElemOff’

or

‘peekByteOff’), and (either ‘poke’

or

‘pokeElemOff’

or

‘pokeByteOff’)

• In the instance declaration for ‘Storable Sketch’

|

25 | } deriving (Storable)

| ^^^^^^^^

Well, that didn’t work out so well, Storable is definitely not so easily derivable… I may need to write my own implementation for measuring the size which feels a little bit like cheating. A quick look at the minimal requirements for Storable (sizeOf, alignment, (peek | peekElemOff | peekByteOff), (poke | pokeElemOff | pokeByteOff)) tell me I don’t want to go down this rabbit hole and basically define a way to pass Sketch objects between C and haskell programs… AN SO post I came across did have a link to a pretty excellent article on computing the size of a HashMap in haskell, which I’ve bookmarked to read through but I definitely don’t want to deal with right now.

At this point I’ve run into 3 completely separate SO posts on the issue but none have a solution I can just use out of the box (on this version of ghc that is):

Unfortunately RIO’s HashMap doesn’t have an instance for Storable either so there’s no dice there as far as easily calculating the usage. Looks like it’s time to look outside haskell. It looks like I might be able to use pmap and it might be somewhere near accurate if things are kept relatively constant and I make sure to force a GC (Lucky for us performGC exists). It’s a pretty wild hypothesis so let’s just try it out without thinking too hard. We should be able to at least try this idea by doing the following:

genRandomByteStrings like we’ve been doing), make sure it’s fully evaluated (we’re going to use deepseq thanks to SO)pmap to get the memory usageBy following these steps and doubling the amount of random bytestrings we generate we should be able to see a ~2x increase in whatever numbers we get out (that should be some indication it’s working. Anyway, let’s try it out:

main :: IO ()

main = genRandomByteStrings 1000

>>= pure . force

>>= \values -> performGC

>> getLine

>> putStrLn "DONE"

Here’s the output of pmap:

$ pmap $(pgrep stack) -x

31239: stack bench

Address Kbytes RSS Dirty Mode Mapping

0000000000400000 56408 36644 0 r-x-- stack

0000000003d15000 7376 6364 2484 rw--- stack

0000000004449000 12 8 8 rw--- [ anon ]

000000000481e000 352 128 128 rw--- [ anon ]

... lots more lines ...

---------------- ------- ------- -------

total kB 1074268092 79116 35540

Now, let’s double that number of random strings to 2000, and see how the memory changes:

total kB 1074268092 79020 35636

Welp, KBytes is exactly the same and RSS is actually less than it was before. Dirty grew by (96 Kb) so that’s something but, yeah, this is not going to work.

Looks like I’m going to have to give this up for now, since I don’t want to spend the next few weeks trying to figure out how to convince GHC to provide me this information. This is a bit of intuition I would have loved to be able to get solid data for, but I just don’t know how – I’m going to have to just cross my fingers and hope that HashMap ByteString Integer is smaller than Vector (HashMap Integer Integer). Not all hope is lost though – I have one more idea for testing the usefulness of this library overall (more on this at the end).

Sketch typeWe didn’t quite get all the empirical data we wanted, but at least we have some number to help guide our optimization! One of the most obvious low hanging fruits is to replace the VB.Vector (HashMap Integer Integer) I was using to store the counts to something more efficient. Here’s the new code, which I’m calling BetterSketch:

-- | Constructor for building the better sketch

buildBetterSketch :: [HashMeta] -> Maybe BetterSketch

buildBetterSketch [] = Nothing

buildBetterSketch fns = Just $ BetterSketch fns HM.empty 0

type HashValueMap = HashMap Text (HashMap Integer Integer)

-- | A sketch that isn't one, but instead uses the naive just-put-the-value-in-a-map approach

data BetterSketch = BetterSketch { bsHashFns :: ![HashMeta]

, bsValues :: !HashValueMap

, bsEventsProcessed :: !Integer

}

-- | Process a hash meta to produce the name of the meta and the hashed value of some bytestring

processMeta :: ByteString -> HashMeta -> (Text, Integer)

processMeta k (HM n f) = (n, f k)

getValueOrZero :: ByteString -> HashValueMap -> HashMeta -> Integer

getValueOrZero key values (HM n f) = case HM.lookup n values of

Nothing -> 0

Just m -> fromMaybe 0 $ HM.lookup (f key) m

instance CountMin BetterSketch where

increment :: BetterSketch -> ByteString -> BetterSketch

increment (BetterSketch fns oldMap ep) key = BetterSketch { bsHashFns=fns

, bsValues=newMap

, bsEventsProcessed=ep+1

}

where

hashedValues = processMeta key <$> fns

updateMap m (hashName, hashedValue) = HM.alter (Just . HM.insertWith (+) hashedValue 1 . fromMaybe HM.empty) hashName m

newMap = foldl' updateMap oldMap hashedValues

count :: BetterSketch -> ByteString -> Integer

count (BetterSketch fns values _ ) key = fromMaybe 0 $ L.minimumMaybe counts

where

counts = getValueOrZero key values <$> fns

hashFunctions :: BetterSketch -> [HashMeta]

hashFunctions = bsHashFns

totalCount :: BetterSketch -> Integer

totalCount = bsEventsProcessed

And here are the benchmark results comparing Sketch, and BetterSketchin particular

| Sketch | Hash list | 1000 insertion mean |

100,000 insertion mean |

|---|---|---|---|

Sketch |

[AlwaysZero] |

150.6μs (stddev 13.69μs) |

15.70ms (stddev 2.799ms) |

BetterSketch |

[AlwaysZero] |

141.0μs (stddev 10.77μs) |

13.94ms (stddev 652.5μs) |

Sketch |

[hMD5] |

5.317ms (stddev 387.2μs) |

628.7ms (stddev 22.84ms) |

BetterSketch |

[hMD5] |

5.560ms (stddev 1.333ms) |

629.0ms (stddev 44.55ms) |

Sketch |

[hFarmHash] |

364.6μs (stddev 39.43μs) |

119.8ms (stddev 5.907ms) |

BetterSketch |

[hFarmHash] |

343.9μs (stddev 99.14μs) |

117.5ms (stddev 14.06ms) |

Sketch |

[hSHA1] |

8.078ms (stddev 1.937ms) |

848.4ms (stddev 8.605ms) |

BetterSketch |

[hSHA1] |

7.285ms (stddev 266.2μs) |

831.4ms (stddev 20.08ms) |

Sketch |

[hMD5, hFarmHash, hSHA1] |

16.01ms (stddev 263.8μs) |

1.940s (stddev 28.83ms) |

BetterSketch |

[hMD5, hFarmHash, hSHA1] |

15.60ms (stddev 448.4μs) |

1.894s (stddev 33.84ms) |

So this is only barely a better result – at 1000 insertions we see a ~<6% improvement in the mean with a higher standard deviation most times. In 100,000 insertions we see lower averages (still basically <5%) but more deviation (so less consistency).

Welp, looks like we didn’t get that much out of thi schange, what about memory efficiency?

| Sketch | # of increments | Bytes Allocated | GCs |

|---|---|---|---|

Sketch |

1000 | 88,872,912 | 77 |

BetterSketch |

1000 | 89,842,816 | 78 |

Sketch |

100,000 | 9,004,924,136 | 7,902 |

BetterSketch |

100,000 | 9,062,164,056 | 7,958 |

Looks like we’re more memory, and causing more GCs as BetterSketch runs (roughly 1%~2%) – not really very compelling. BetterSketch isn’t really earning the “better” part just yet, so maybe we should try a little more to optimize. looking at this my first thought is to unroll the HM.alter bit:

instance CountMin BetterSketch where

increment :: BetterSketch -> ByteString -> BetterSketch

increment (BetterSketch fns oldMap ep) key = BetterSketch { bsHashFns=fns

, bsValues=newMap

, bsEventsProcessed=ep+1

}

where

hashedValues = processMeta key <$> fns -- <-- this is necessary, can't get out of hashing the values

updateMap m (hashName, hashedValue) = HM.alter (Just . HM.insertWith (+) hashedValue 1 . fromMaybe HM.empty) hashName m -- <--- this is concise/pretty but maybe inefficient?

newMap = foldl' updateMap oldMap hashedValues -- <----- this is necessary (we need to do the update for every hash value), but maybe we could combine w/ updateMap?

If we unroll this some more, we get longer code:

instance CountMin BetterSketch where

increment :: BetterSketch -> ByteString -> BetterSketch

increment (BetterSketch fns oldMap ep) key = BetterSketch { bsHashFns=fns

, bsValues=newMap

, bsEventsProcessed=ep+1

}

where

doInsertWithFn outerMap (HM hashName hashFn) = let hashedValue = hashFn key

-- | first level is the hashName

in case HM.lookup hashName outerMap of

Nothing -> HM.insert hashName (HM.singleton hashedValue 1) outerMap

Just innerMap -> case HM.lookup hashedValue innerMap of

Nothing -> HM.insert hashName (HM.insert hashedValue 1 innerMap) outerMap

Just v -> HM.insert hashName (HM.insert hashedValue (v+1) innerMap) outerMap

newMap = foldl' doInsertWithFn oldMap fns

Here’s the performance & memory usage that I observed:

| Sketch | Hash list | 1000 insertion mean |

100,000 insertion mean |

|---|---|---|---|

Sketch |

[AlwaysZero] |

174.7μs (stddev 28.57μs) |

18.12ms (stddev 3.555ms) |

BetterSketch |

[AlwaysZero] |

150.5μs (stddev 31.52μs) |

14.54ms (stddev 392.7μs) |

Sketch |

[hMD5] |

5.607ms (stddev 913.6μs) |

671.1ms (stddev 38.92ms) |

BetterSketch |

[hMD5] |

5.374ms (stddev 428.1μs) |

691.1ms (stddev 77.05ms) |

Sketch |

[hFarmHash] |

393.4μs (stddev 44.62μs) |

120.7ms (stddev 3.635ms) |

BetterSketch |

[hFarmHash] |

354.2μs (stddev 35.84μs) |

115.7ms (stddev 3.299ms) |

Sketch |

[hSHA1] |

7.762ms (stddev 100.6ms) |

885.1ms (stddev 22.46ms) |

BetterSketch |

[hSHA1] |

7.745ms (stddev 294.0μs) |

874.5ms (stddev 13.94ms) |

Sketch |

[hMD5, hFarmHash, hSHA1] |

17.12ms (stddev 1.086ms) |

2.141s (stddev 169.5ms) |

BetterSketch |

[hMD5, hFarmHash, hSHA1] |

20.05ms (stddev 13.40ms) |

2.030s (stddev 39.11ms) |

| Sketch | # of increments | Bytes Allocated | GCs |

|---|---|---|---|

Sketch |

1000 | 90,175,120 | 79 |

BetterSketch |

1000 | 88,768,912 | 77 |

Sketch |

100,000 | 9,008,873,472 | 7,906 |

BetterSketch |

100,000 | 9,024,937,080 | 7,921 |

OK, so a little faster with the more unrolled code – in some cases the average is worse, but overall the time (which I didn’t print above) coming out of criterion for each testcase was consistently lower for BetterSketch. In the memory efficiency case the results were basically even (with some runs where BetterSketch had less bytes allocated and GCs – so the unrolling did help there. All in all this seems like I’m trying to pull water from a stone so I’m going to move on to other optimization approaches – evidently the difference between nested HashMaps and a list of HashMaps isn’t that great, for this particular usecase.

There are maybe a few reasons for the lack of a clear improvement despite what you’d think was better data structure usage:

I think the key take-aways can be summed up as the following:

HM.alter) didn’t matter much for performance but did help memory efficiencymRight now we’re building and using immutable maps. This means that even if we’re using some sort of persistent immutable data structure, at the end of the day we’re doing more work than strictly necessary to update the maps that we’re modifying. Let’s use a less “safe” but more efficient implementation of maps that we can use. The strict HashMap I’m using in RIO has an update of O(logN) complexity, but the hashtables package’s Data.HashTable uses mutable data structures and has O(1) complexity, but requires use of the IO monad (or at least some monad m).

NOTE Whenever you see O(logN), you can almost certainly bet the solution/underlying data is tree-structured in some way.

Let’s add a typeclass called CountMinM which represents CountMin functionality under some monad m (likely to be IO):

-- | Maximum performance sketches that reside in some monad m (likely IO)

class CountMinM m sketch where

-- | Increment the count of a given key

incrementM :: sketch -> ByteString -> m ()

-- | Helper for incrementing with a regular string

incrementStrM :: sketch -> String -> m ()

incrementStrM s = incrementM s . encodeUtf8 . T.pack

-- | Get the count of items seen for a given key

countM :: sketch -> ByteString -> m Integer

-- | Retrieve the hash functions in use

hashFunctionsM :: sketch -> m [HashMeta]

Here’s the implementation for BestSketch (I’m definitely painting myself into a corner with these names):

{-# LANGUAGE MultiParamTypeClasses #-} -- we'll need this for multi parameter type class implementations

type HashValueMap t = t Text (t Integer Integer) -- needed to change so I could use HashValueMap w/ hashtables lib

instance CountMinM IO BestSketch where

incrementM :: BestSketch -> ByteString -> IO ()

incrementM (BestSketch fns oldMap ep) key = mapM_ doMutation fns

>> modifyIORef' ep (+1)

where

doMutation (HM hashName hashFn) = let hashedValue = hashFn key

in HTIO.lookup oldMap hashName

>>= \case

Nothing -> HTIO.fromList [(hashedValue, 1)]

>>= \createdMap -> HTIO.insert oldMap hashName createdMap

Just innerMap -> HTIO.lookup innerMap hashedValue

>>= \case

Nothing -> HTIO.insert innerMap hashedValue 1

Just v -> HTIO.insert innerMap hashedValue (v+1)

countM :: BestSketch -> ByteString -> IO Integer

countM (BestSketch fns values _ ) key = mapM lookup2 fns

>>= pure . catMaybes

>>= pure . fromMaybe 0 . L.minimumMaybe

where

lookup2 (HM hashName hashFn) = HTIO.lookup values hashName

>>= \case

Nothing -> pure Nothing

Just innerMap -> HTIO.lookup innerMap (hashFn key)

hashFunctionsM :: BestSketch -> IO [HashMeta]

hashFunctionsM = pure . bstHashFns

totalCountM :: BestSketch -> IO Integer

totalCountM = readIORef . bstEventsProcessed

I didn’t really check this too thoroughly but it looks right (which reminds me, I really need to run these new better sketches through the tests). I’ve got some high hopes for this implementation! Let’s see what the benchmarks (compared between BetterSketch and BestSketch)look like:

| Sketch | Hash list | 1000 insertion mean |

100,000 insertion mean |

|---|---|---|---|

BetterSketch |

[AlwaysZero] |

143.1μs (stddev 2.065μs) |

14.82ms (stddev 632.1μs) |

BestSketch |

[AlwaysZero] |

270.1μs (stddev 8.407μs) |

27.21ms (stddev 310.7μs) |

BetterSketch |

[hMD5] |

5.263ms (stddev 478.7μs) |

625.1ms (stddev 19ms) |

BestSketch |

[hMD5] |

5.781ms (stddev 253.7μs) |

880.4ms (stddev 32.68ms) |

BetterSketch |

[hFarmHash] |

343.1μs (stddev 33.80μs) |

112.6ms (stddev 12.94ms) |

BestSketch |

[hFarmHash] |

694.6μs (stddev 64.02μs) |

136.8ms (stddev 2.630ms) |

BetterSketch |

[hSHA1] |

7.514ms (stddev 1.980ms) |

845.5ms (stddev 78.60ms) |

BestSketch |

[hSHA1] |

7.807ms (stddev 1.011ms) |

1.106s (stddev 92.74ms) |

BetterSketch |

[hMD5, hFarmHash, hSHA1] |

15.01ms (stddev 150.7μs) |

1.958s (stddev 111.3ms) |

BestSketch |

[hMD5, hFarmHash, hSHA1] |

16.20ms (stddev 298.6μs) |

2.536s (stddev 82.96ms) |

Welp, color me super perplexed – I was really expecting things to get considerably faster once I was operating in the IO monad, but they actually got worse. My implementation is either incredibly naive, or something else I can’t see is horribly wrong. Let’s look at memory efficiency (again comparing BetterSketch with BestSketch):

| Sketch | # of increments | Bytes Allocated | GCs |

|---|---|---|---|

BetterSketch |

1000 | 88,710,032 | 77 |

BestSketch |

1000 | 88,306,808 | 77 |

BetterSketch |

100,000 | 9,026,440,448 | 7,923 |

BestSketch |

100,000 | 8,781,738,552 | 7,670 |

OK, no crazy surprised here, but we’re seeing slightly more memory efficiency than BetterSketch, so that’s a good, expected sign at least.

It’s still not super clear why the IO version would be slower than the pure version – the iteration & rebuilding that would normally be forced to happen in the pure case, should not be happening in the IO case.

It’s really disappointing but this post is already super long so I’m going to avoid any further optimization – let’s hope 100,000 insertions in ~1 second is good enough! If you’ve got some thoughts on the optimization and what I’m doing wrong, please send me an email!

By this point you might have forgotten that we were going to use doctest, but I wanted to show an example on how to get started with it so let’s add a doctest! There’s also a related package called doctest-discover which exists to make it really easy to run the doc tests as well.

Here are the changes I needed to make (with one example of a doctest):

package.yaml

tests:

# ... other test settings are elided ...

countmin-doctest:

main: Spec.hs

source-dirs: doc-test

dependencies:

- countmin

- doctest

- doctest-discover

ghc-options:

- -threaded

- -rtsopts

- -with-rtsopts=-N

doc-test/Spec.hs

{-# OPTIONS_GHC -F -pgmF doctest-discover #-}

src/Lib.hs:

-- | Create a sketch with the given functions and an empty vector of maps for the hash outputs

--

-- Examples:

--

-- >>> buildSketch []

-- Nothing

--

-- >>> import Hashes.FarmHash (hFarmHash)

-- >>> isJust $ buildSketch [hFarmHash]

-- True

buildSketch :: [HashMeta] -> Maybe Sketch

buildSketch [] = Nothing

buildSketch fns = Just $ Sketch fns (VB.replicate (length fns) HM.empty) 0

I only added one doctest (again this post is getting mega long) but you can imagine the rest! If I ever get around to polishing & releasing this library then there (hopefully) will be a lot more.

If you read this blog you’ll know I love GitLab – one of the awesome features that comes with GitLab is GitLab Pages, which allows hosting a site through GitLab (similar to Github Pages). I’ll be using GitLab pages for this project in order to host the Haddock documentation so others can view it online (and someday published package can link to it). This post isn’t a detailed introduction/how-to so it will be light on details, but luckily there isn’t much to it – all we’ll need is to write some YAML for the .gitlab-ci.yml.

The GitLab documentation on Pages is pretty great, so feel free to give that a read. If you want to skip straight to the YAML, here’s what I added:

.gitlab-ci.yml

image: haskell:8.6.5

cache:

paths:

- .stack-root

- .stack-work

stages:

- prepare

- test

- deploy

variables:

# Set the STACK_ROOT so stack installs to folders gitlab can cache

STACK_ROOT: /builds/mrman/haskell-countmin/.stack-root

before_script:

- stack install

update_cache:

stage: prepare

cache:

key: default

paths:

- .stack-root

- .stack-work

policy: pull-push

only:

- web

script:

- stack install

test:

stage: test

cache:

key: default

paths:

- .stack-root

- .stack-work

policy: pull # only pull from the cache don't update it

script:

- stack test

pages:

stage: deploy

# only:

# - master

cache:

key: default

paths:

- .stack-root

- .stack-work

policy: pull # only pull from cache don't update it

script:

- stack haddock

- cp -r .stack-work/dist/*/*/doc/html/countmin public

artifacts:

paths:

- public

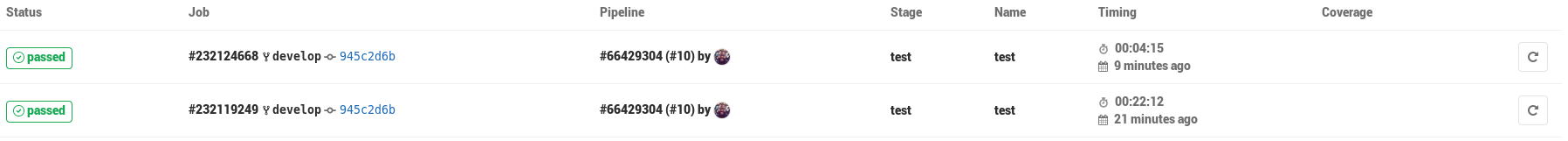

BTW, that caching-related settings (STACK_ROOT, the cache YAML settings) are hugely important. The first build without it was 20 minutes, and the one after was 4 minutes 15 seconds.

After some experimentation with the cache setting – changing cache:policy to pull and adding a web-triggered stage for updating the cache manually – the test stage was down to 1 minute 53 seconds!

Along with some nice fast tests in CI, the haddock documentation is available via GitLab pages @ https://mrman.gitlab.com/haskell-countmin!

It was fun to get away from the usual programs (web/API servers) I write and write something a little more “low level”. It wasn’t much more than just gluing together some existing libraries and using them to implement the rough functionality of a sketch but this was a good chance to use some best practices in the Haskell ecosystem on a project. I was pretty unpleasantly surprised by how unintuitive (and difficult to get started with) the optimization process was – it didn’t go very well for me at least and I spent most of my time figuring out the tooling and just having what I expected to happen completely trounced, but it’s likely that’s just due to my own lack of experience with what GHC is doing under the covers. How much of this is Haskell being difficult or myself being inexperienced at writing lower level Haskell is unclear (I expect more of the latter).

Either way, hopefully some people out there will enjoy the code (which is open sourced under the MIT license), I know I enjoyed writing it.