tl;dr - A combination of pre-baked very heavy docker images, sccache, and explicitly setting CARGO_HOME and CARGO_TARGET_DIR will do it. If you’re squeamish about large docker images in your build pipeline, close this tab.

Today was the last day I put up with unnecessary rebuilds in my Rust CI pipelines.

Sure, I probably shouldn’t have ported this particular project to Rust (Nimbus is powered by Typescript and Rust), but when someone gives you strong type safety, performance, and static binary builds and tells you to pick 3, you start getting a bit trigger happy with language choice.

This isn’t about that decision anyway, it’s about how long I was waiting for builds. Let’s talk more about that.

cargo is awesome, but it often compiles and rebuilds things you wouldn’t expect during dockerized CI runs. I’m not the only one who’s had issues getting it right:

Now not all the problems above are relevant here, but the point is that builds were taking a long time, and they shouldn’t have, because I was already using an explicitly built -builder image (more on that later).

Builds should have been no-ops, built times should have been under a second, not minutes (builder and the application have ~100% overlap in crates!).

I chose to explicitly pay a image size penalty (you don’t want to know how many GBs this -builder container is) with a builder.Dockerfile like this:

FROM rust:1.66.0-alpine3.16@sha256:b7ca6566f725e9067a55dac02db34abe470b64e4c36fd7c600abdb40a733fc05

# Install deps

RUN apk add musl-dev openssl openssl-dev coreutils git openssh

# Install development/testing deps

RUN cargo install just cargo-get cargo-edit cargo-cache

RUN rustup component add clippy

# Install the project

COPY . /app

WORKDIR /app

RUN just lint build

You’d expect no building after this right? Well it works… on my machine (inside a docker container):

# ... building the builder image ...

Successfully built 0dfa4e9aec3b

Successfully tagged registry.gitlab.com/my/awesome/project/builder:0.x.x

$ docker run --rm -it registry.gitlab.com/my/awesome/project/builder:0.x.x

/app # ls

CHANGELOG Cargo.lock Cargo.toml Justfile README.md build.rs cliff.toml docs infra secrets src target templates

/app # cargo build

Finished dev [unoptimized + debuginfo] target(s) in 0.26s

/app # just lint

Finished dev [unoptimized + debuginfo] target(s) in 0.38s

But that wasn’t the case in CI (which is also containerized) – running just lint (if you’ve never heard of just, you’re welcome) triggered some building:

$ just lint

Compiling libc v0.2.137

Compiling autocfg v1.1.0

Compiling proc-macro2 v1.0.47

Compiling quote v1.0.21

Compiling unicode-ident v1.0.5

Compiling version_check v0.9.4

Compiling syn v1.0.103

Checking cfg-if v1.0.0

Compiling memchr v2.5.0

Checking once_cell v1.16.0

Compiling serde_derive v1.0.147

Compiling cc v1.0.74

Compiling futures-core v0.3.25

Compiling ahash v0.7.6

Compiling log v0.4.17

Checking getrandom v0.2.8

Compiling lock_api v0.4.9

Compiling parking_lot_core v0.9.4

Checking pin-project-lite v0.2.9

# ... you get the idea

Quite perplexing.

A reasonable first solution is to use the built-in caching features of the platform – excellent CI systems like GitLab CI has excellent documentation and great features:

gitlab-ci.yml

image: registry.gitlab.com/my/awesome/project/builder:0.x.x

cache:

paths:

- target

- .cargo/bin

- .cargo/registry/index

- .cargo/registry/cache

- .cargo/git/db

variables:

CARGO_HOME: $CI_PROJECT_DIR/.cargo

stages:

# ...elided

There’s only one problem with that – it can take over a minute to simply get the files out of cache:

Skipping Git submodules setup

Restoring cache 01:33

Checking cache for default-non_protected...

No URL provided, cache will not be downloaded from shared cache server. Instead a local version of cache will be extracted.

Successfully extracted cache

Executing "step_script" stage of the job script 02:04

Using docker image sha256:865cd3bf6a0dae464f45c2d08e9e96688af68d7dd789ea5ce34a25895ea141de for registry.gitlab.com/my/awesome/project/builder:0.x.x with digest registry.gitlab.com/my/awesome/project/builder@sha256:bbe09176090cbadf5b75105739ed450f719a39072afc9fcc0cf9fc7a7dab5270 ...

$ just build test-unit

Compiling once_cell v1.16.0

Compiling log v0.4.17

You’ll also notice that despite all that, the project was still building stuff! Something just wasn’t caching obviously.

But why am I needing to use the CI filesystem cache at all?

The built code is already in the image – I’m lugging around this humongous image just so I avoid building costs later right?

Well some hours later, I’ve found a solution that works, and here it is:

-builder Docker image (kind of like a janky manual multi-stage build) to pack build deps into the build image itselfCARGO_HOME to a neutral location like /usr/local/cargo (i.e. not the $HOME directory of the user, and not the folder your build will end up running in)CARGO_TARGET_DIR to a neutral location like /usr/local/build/targetSCCACHE_DIR to a neutral location like /usr/local/sccacheCargo.toml to include cargo-wrapper = "/path/to/sccache" (see sccache README)Here are the files that make this work out for me, and the relevant content.

builder.Dockerfile

FROM rust:1.66.0-alpine3.16@sha256:b7ca6566f725e9067a55dac02db34abe470b64e4c36fd7c600abdb40a733fc05

# Install deps

RUN apk add musl-dev openssl openssl-dev coreutils git openssh sccache

ENV CARGO_HOME=/usr/local/cargo

ENV CARGO_TARGET_DIR=/usr/local/build/target

ENV SCCACHE_DIR=/usr/local/sccache

# Install development/testing deps

RUN cargo install just cargo-get cargo-edit cargo-cache

RUN rustup component add clippy

# Install the project

COPY . /app

WORKDIR /app

RUN just lint build

Cargo.toml

[package]

name = "....."

# ... other stuff

[build]

rustc-wrapper = "/usr/bin/sccache"

[dependencies]

# .... other stuff

[build-dependencies]

# .... other stuff

gitlab-ci.yml

image: registry.gitlab.com/my/awesome/project/builder:0.x.x

stages:

- lint

- build

- test

- publish

- extra

clippy:

stage: lint

script:

- cargo cache

- cargo clippy --frozen --offline --locked -- -D warnings # You don't need all this.

- cargo cache

build:

# ...elided

unit:

# ...elided

e2e:

# ...elided

docker_image:

# ...elided

tag_new_version:

# ...elided

Dockerfile

FROM registry.gitlab.com/my/awesome/project/builder:0.x.x AS builder

ENV CARGO_HOME=/usr/local/cargo

ENV CARGO_TARGET_DIR=/usr/local/build/target

ENV SCCACHE_DIR=/usr/local/sccache

# Add updated code

COPY . /app

WORKDIR /app

RUN just build-release

FROM rust:1.66.0-alpine3.16@sha256:b7ca6566f725e9067a55dac02db34abe470b64e4c36fd7c600abdb40a733fc05

RUN apk add openssl # unfortunately rusttls doesn't work quite right for this project

COPY --from=builder /app/target/release/nws-provider-cache-redis /app/nws-provider-cache-redis

CMD [ "/app/nws-provider-cache-redis" ]

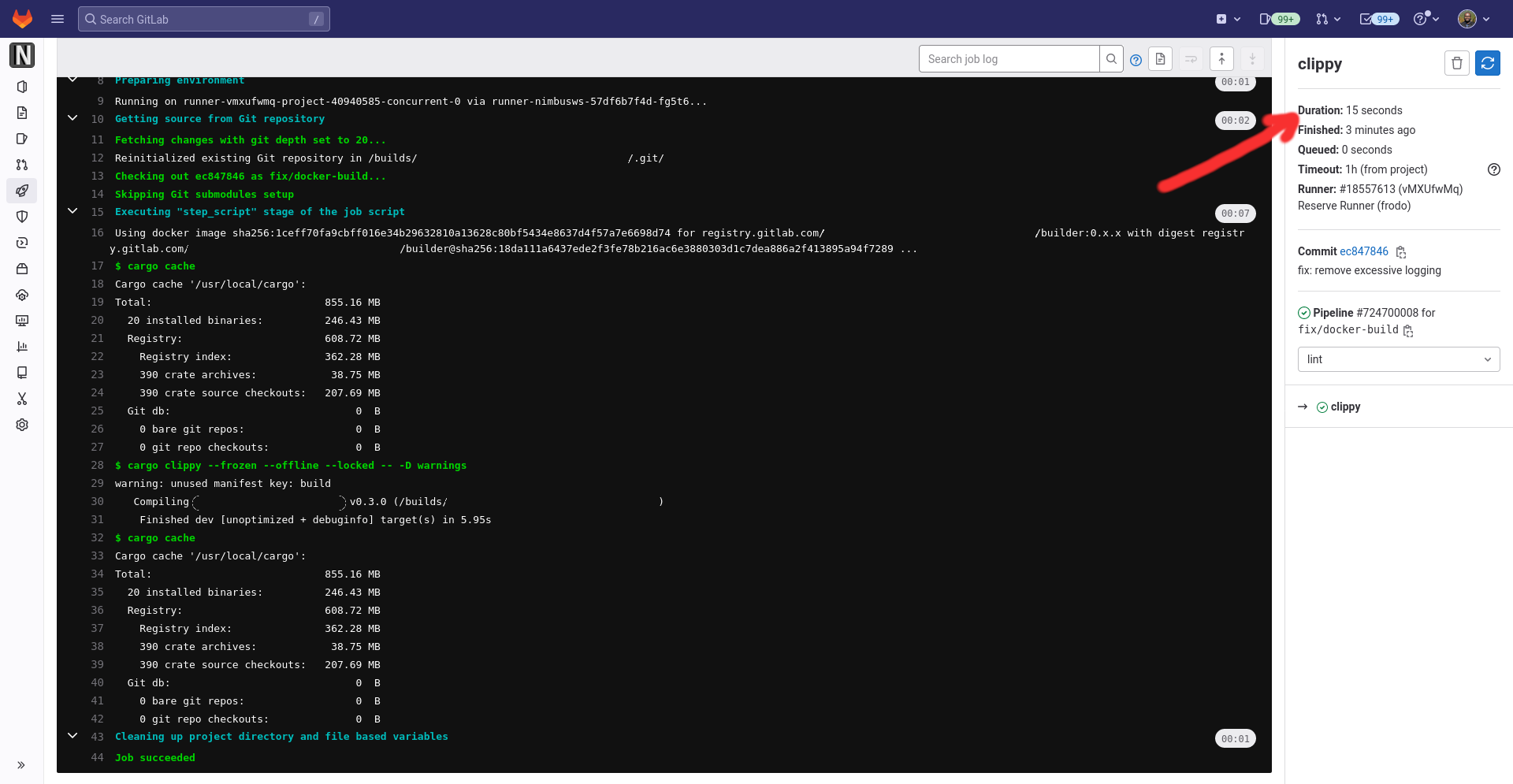

And when it all works together, I get a beautiful 15 second build:

I hope this works for you and yours this holiday season. 🎁

Well, your build systems are going to have multi-GB images running around on them.

In practice, this probably doesn’t matter – as long as you have a reasonably powerful machine pull (and don’t run on a machine without any images too often), the base image is just already there (yay Copy on Write filesystems!).

I’m absolutely willing to trade a little hard-disk space, a requirement of very fast internet for builds that finish in seconds. Sure, I can’t push up my builder image from cafe WiFi, but maybe some day when we have powerful quantum networks even that will be solved too.

Being annoyed by build times in compile-time type-checked languages with superior type systems happens to me from time to time:

What have I learned this time? sccache is cool, and outside of that absolutely nothing.

See you next time I break/slow down my build and burn hours trying to get it zippy again.