tl;dr - Rancher 2.0 is out, Check out the demo video, it’s pretty slick. I start to set up Rancher, mess up, do some debugging, and eventually get it working with a bit of a hack. Skip to the end section (named “The whole process, abdridged”) before wrap up to see the full list of steps I took for getting Rancher running on my own local single node Kubernetes cluster.

UPDATE - There were two major problems with this post: the first problem is that the configuration posted here DOESN’T mount a place for rancher/server to store it’s information (this is described as a type of HA setup in the docs, but it’s required for any sort of persistence). The second was that I ended up using a direct ClusterIP for the agent (modifying the auto-generated rancher config along the way). Both things have been fixed, check out the updated resource configuration at the bottom of this post.

Very very early on in my series about installing Kubernetes I mentioned just a tiny bit about Rancher and RancherOS. While I found RancherOS to be a little too far (I’m not quite ready to have system services run in containers), I thought Rancher was an amazing looking (I hadn’t tried it) administration tool for my Kubernetes cluster. With the recent announcement of Rancher 2.0 being released I thought this was a good time to get it set up on my small cluster and kick the tires.

Turns out, Rancher 2.0 has just recently been released, and there’s an awesome demo video to show some of the features that have been added and how things have changed. A quick list of changes I noticed:

It really does look awesome so I’m going to give it a try on my super small cluster, and see what it looks like!

One of the things I love about Rancher is it’s relatively simple startup/configuration path. If I remember correctly, all I need to do is run a single container and I can get in and start setting things up.

As usual, the first trip is to the documentation on how to set up Rancher. I still find that documentation a little daunting, but the bit I really care about is a non High Availability (HA) set up, which is pretty easy… All I’ve gotta do is run the docker image!

Since I’m running a Kubernetes already, I should make a Kubernetes Deployment, and likely a Kubernetes Service to expose the Rancher Server (which has the admin console AND the API):

---

apiVersion: v1

kind: Service

metadata:

name: rancher-svc

labels:

app: rancher

spec:

type: LoadBalancer

selector:

app: rancher

ports:

- name: rancher-ui

port: 8080

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: rancher

labels:

app: rancher

spec:

replicas: 1

template:

metadata:

labels:

app: rancher

spec:

containers:

- name: rancher

image: rancher/server:v2.0.0-alpha7

ports:

- containerPort: 8080

For now, I’ll skip the Ingress Resource that would expose it to the outside world, I’ll kubectl port-forward to it for now.

After a little bit of waiting post kubectl apply, here’s the pod:

$ kubectl get pods | grep rancher

rancher-812871915-nh9pm 1/1 Running 0 49s

And to port-forward it to my local machine:

$ kubectl port-forward rancher-812871915-nh9pm 8080:8080

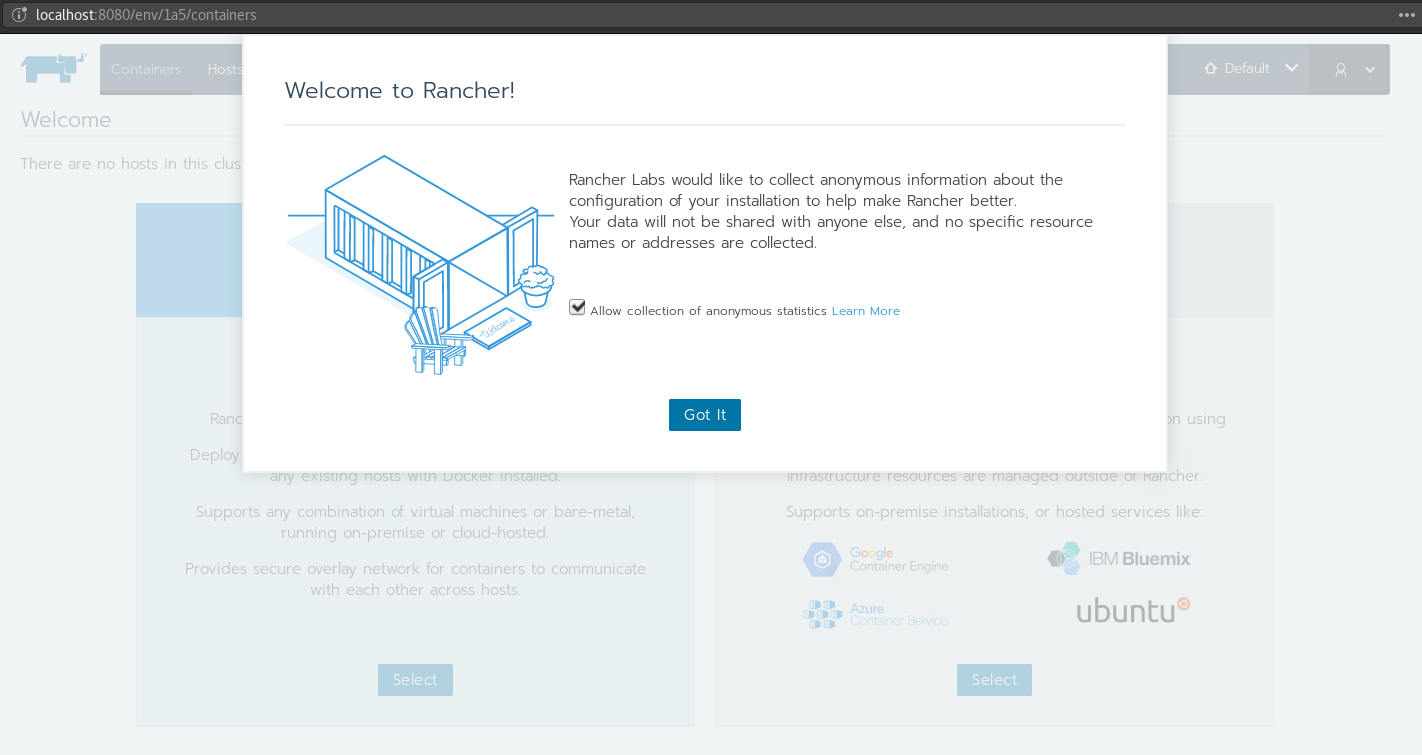

EZ PZ! Here’s what I see:

Now I can walk through the web-based setup! After clicking the “Existing Kubernetes” option, I was directed to how to connect to the Kubernetes API.

Since I forgot how exactly my Kubernetes API server was set up, it was time to check my manifest.yaml file for the Kubernetes API on the actual server… I actually didn’t see anytihng that would tell me how the Kubernetes API was made available to pods, and my instinct is that it actually isn’t – I didn’t set up a Service, and unless Kubernetes exposes the API automatically to containers for you, I’d have to use the Cluster IP (which I can get with kubectl get pods -n kube-system -o wide), but that could theoretically change…

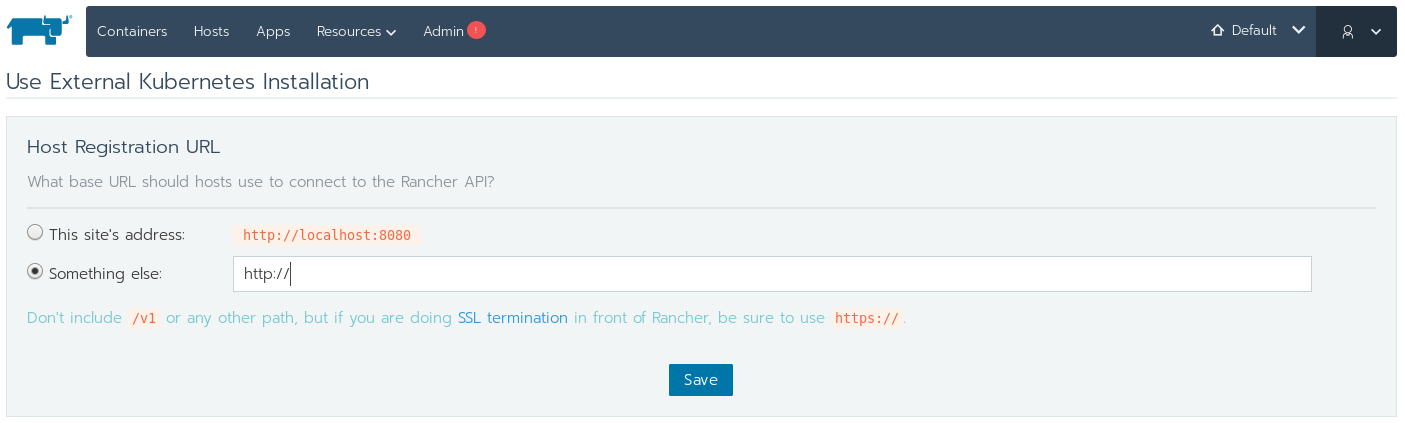

Looking back at the configuration for the API dashboard, it turns out it has the capability of automatically discovering the API server host… I don’t have that luxury here, but let’s just leave in localhost:8080 for now. Here’s what you see when you click through:

Note from the future - This is wrong, the setup guide was actually asking how to connect to rancher server from other nodes, not the Kubernetes API. I figure this out after a bunch of wandering, so skip to the end if you want to skip the wandering.

Here’s the resource configuration that Rancher generated and wants me to kubectl apply (you can just download it with wget or curl):

---

apiVersion: v1

kind: Namespace

metadata:

name: cattle-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: rancher

namespace: cattle-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: rancher

namespace: cattle-system

subjects:

- kind: ServiceAccount

name: rancher

namespace: cattle-system

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

---

apiVersion: v1

kind: Secret

metadata:

name: rancher-credentials-d01e8bfe

namespace: cattle-system

type: Opaque

data:

url: <REDACTED>

access-key: <REDACTED>

secret-key: <REDACTED>

---

apiVersion: v1

kind: Pod

metadata:

name: cluster-register-d01e8bfe

namespace: cattle-system

spec:

serviceAccountName: rancher

restartPolicy: Never

containers:

- name: cluster-register

image: rancher/cluster-register:v0.1.0

volumeMounts:

- name: rancher-credentials

mountPath: /rancher-credentials

readOnly: true

volumes:

- name: rancher-credentials

secret:

secretName: rancher-credentials-d01e8bfe

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: rancher-agent

namespace: cattle-system

spec:

template:

metadata:

labels:

app: rancher

type: agent

spec:

containers:

- name: rancher-agent

command:

- /run.sh

- k8srun

env:

- name: CATTLE_ORCHESTRATION

value: "kubernetes"

- name: CATTLE_SKIP_UPGRADE

value: "true"

- name: CATTLE_REGISTRATION_URL

value: http://localhost:8080/v3/scripts/02FF05C9FBD614E8AD60:1483142400000:zA0q1T02D2iJqfi0X2TjhjT5fiY

- name: CATTLE_URL

value: "http://localhost:8080/v3"

- name: CATTLE_NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

image: rancher/agent:v2.0-alpha4

securityContext:

privileged: true

volumeMounts:

- name: rancher

mountPath: /var/lib/rancher

- name: rancher-storage

mountPath: /var/run/rancher/storage

- name: rancher-agent-state

mountPath: /var/lib/cattle

- name: docker-socket

mountPath: /var/run/docker.sock

- name: lib-modules

mountPath: /lib/modules

readOnly: true

- name: proc

mountPath: /host/proc

- name: dev

mountPath: /host/dev

hostNetwork: true

hostPID: true

volumes:

- name: rancher

hostPath:

path: /var/lib/rancher

- name: rancher-storage

hostPath:

path: /var/run/rancher/storage

- name: rancher-agent-state

hostPath:

path: /var/lib/cattle

- name: docker-socket

hostPath:

path: /var/run/docker.sock

- name: lib-modules

hostPath:

path: /lib/modules

- name: proc

hostPath:

path: /proc

- name: dev

hostPath:

path: /dev

updateStrategy:

type: RollingUpdate

This affords a tiny peek behind the veil at how the Rancher team has made set up so awesomely easy.

Since I saved this config, before I actually run it, I’m going to try and be more correct about the location of the API server. I’d rather not use the Cluster IP so I’m going to see if it’s made available somehow, or maybe even if I should expose it with a service or something. Welp Kubernetes documentation to the rescue – The kubernetes DNS name is the preferred way to do it. I’m going to go ahead and replace localhost:8080 with that. After the changes:

- name: CATTLE_REGISTRATION_URL

value: http://kubernetes:8080/v3/scripts/02FF05C9FBD614E8AD60:1483142400000:zA0q1T02D2iJqfi0X2TjhjT5fiY

- name: CATTLE_URL

value: "http://kubernetes:8080/v3"

Note from the future - Again, this is wrong, This URL was supposed to direct the agent to how to access the Rancher API.

OK now that I think I’ve got it, let’s kubectl apply -f the file like they wanted to.

$ kubectl apply -f rancher-generated-kubeneretes-resource.yaml

namespace "cattle-system" created

serviceaccount "rancher" created

clusterrolebinding "rancher" created

secret "rancher-credentials-d01e8bfe" created

pod "cluster-register-d01e8bfe" created

daemonset "rancher-agent" created

As you might have hoped/expected, everything goes off without a hitch!

Also when I went back to the rancher/server deployment that I started off, and clicked the “Close” button, I got welcomed to Rancher proper! :D

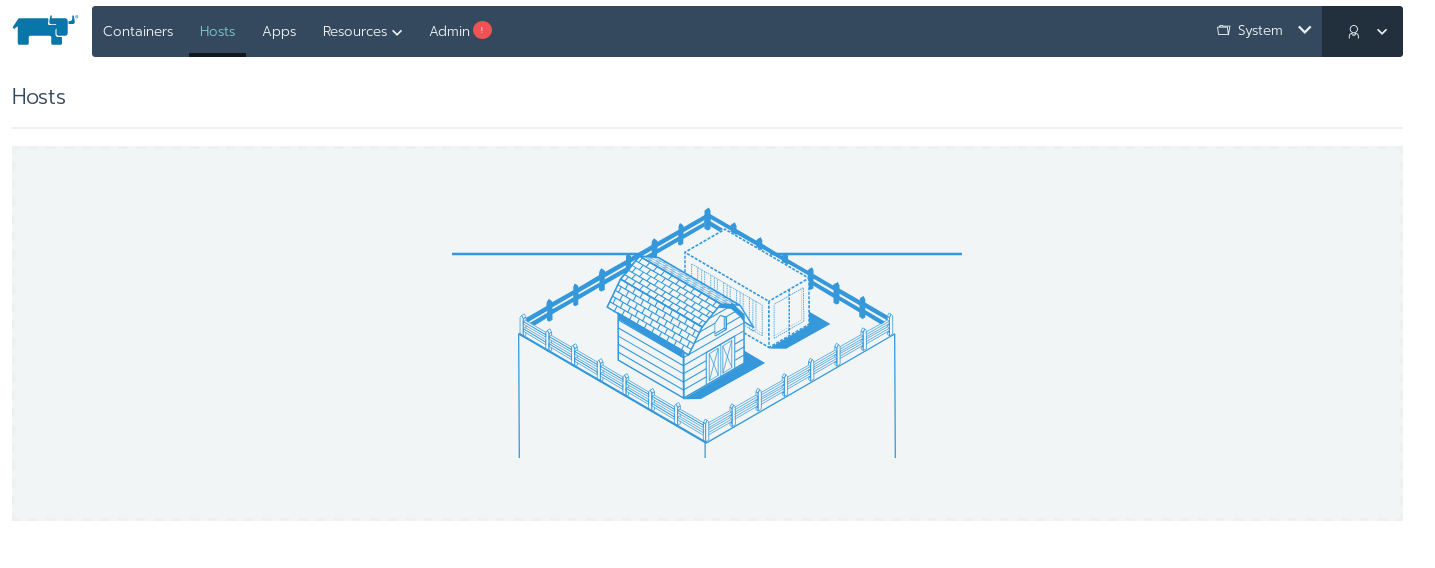

There’s a bit of a hiccup though, it looks like maybe Rnacher can’t access things properly or save it’s state or something – It doesn’t recognize any of my hosts (there’s only one). I guess it’s time to dive into the logs, stating with rancher/server, and then with the resources that were generated (maybe the API server is wrong, that’s most likely since I assume all the cluster related info is coming from there). Once I refreshed I got sent back to the set up page.

Checking the rancher/server logs

After running kubectl logs rancher-812871915-nh9pm and searching up for a bit, I found what is probably the smoking gun:

time="2017-09-29T05:50:05Z" level=error msg="Get http://localhost:8080/v3: dial tcp [::1]:8080: getsockopt: connection refused" version=v0.15.17

time="2017-09-29T05:50:05Z" level=fatal msg="Get http://localhost:8080/v3: dial tcp [::1]:8080: getsockopt: connection refused"

It’s weird, I really shouldn’t be seeing localhost:8080 in the logs at all, let me try and change the configuration, I expect to see it try and hit kubernetes:8080.

Weirdly enough though, If I go to the top right and System > Manage Clusters, it does recognize a Default cluster… So it’s somehow recognizing the clusters but not recognizing the host itself? that’s super weird. Maybe I should start checking out the logs for the things it spun up?

Plan of attack (debug really)

kubernetes:8080 does indeed access the API, using good ‘ol kubectl run test --image=tutum/curl -- sleep 10000 and kube exec -it.Welp, it looks like kubernetes:8080 does NOT point to the API server… Not sure why, maybe something’s wrong with how I set my kubernetes cluster up – I guess I’ll need to use a different way.

If I instead use the ClusterIP of the API server (which is actually the IP of the node itself, so it’s actually fine to use for now I guess), I get a connection refused to 8080, and a connection which does work to the port I use for HTTPS. I can’t think of any option I could have set that would disable access to the API only through HTTPS but I’m looking…

Note from the future - It’s not the Kubernetes API I’m supposed to be accessing/pointing to, it’s the Rancher Server’s. I’m still wandering in the dark here.

Welp, I can’t find anything that’s particularly disabling it… going to go into the api server container itself

Going back, it looks like the URL wasn’t even really important – the CATTLE_URL and CATTLE_REGISTRATION_URL are actually for… Cattle, not Kubernetes API. What I DO find is broken is that the rancher agent isn’t starting. DaemonSet from the config doens’t seem to be getting made, namespace is cattle-system maybe that’s where I haven’t found it. Well, they’re thrashing… so that’s it.

kubectl get pods -n cattle-system showed me that the pods are crashing/broken:

$ kubectl get pods -n cattle-system --watch

NAME READY STATUS RESTARTS AGE

cluster-register-bacf2810 0/1 Error 0 3m

cluster-register-d01e8bfe 0/1 Error 0 41m

rancher-agent-q58h5 0/1 Terminating 0 41m

To get a bit of a fresh start, I kubectl delete -f the saved configuration, and am going to try again, this time with an eye towards the kubectl logs or kubectl describe for the pods in the cattle-system namespace.

OK, here we go:

$ kubectl logs rancher-agent-qmgth -n cattle-system

Found container ID: 1ef5656ea031160d3ed041c65e1a69790e1399a3d00d9b2a47ff180e132f754c

Checking root: /host/run/runc

Checking file: 057ebc6d604d5e4c38e23b2f6d1f880aac8429454dd3c43620b47a685dce7349

Checking file: 0c98d61fdd6187539452bbd0e312ef25f07fe9a040065019ca4f2f4d59cf8e9a

Checking file: 1273250cc6e47044cf381eac3b742a1089a36d1f3031037efe1173acce4e494d

Checking file: 1a218464dcfe255915494e4a314ea6714037d996b8a2f40267a59546537bc331

Checking file: 1c07e0e37df34d14fb91991cae0d705a0b4169c83b60ec79a5dcbf7b9693ca79

Checking file: 1ef5656ea031160d3ed041c65e1a69790e1399a3d00d9b2a47ff180e132f754c

Found state.json: 1ef5656ea031160d3ed041c65e1a69790e1399a3d00d9b2a47ff180e132f754c

time="2017-09-29T07:02:35Z" level=info msg="Execing [/usr/bin/nsenter --mount=/proc/25224/ns/mnt -F -- /var/lib/docker/overlay/b4b74fb9530c7add7000c380f376343fe2a960241b807eaf4b1099a4d35acc86/merged/usr/bin/share-mnt --stage2 /var/lib/rancher/volumes /var/lib/kubelet -- norun]"

cp: cannot create regular file '/.r/r': No such file or directory

INFO: Starting agent for

/tmp/bootstrap.sh: line 2: paths:: command not found

/tmp/bootstrap.sh: line 3: /apis,: No such file or directory

/tmp/bootstrap.sh: line 4: /apis/,: No such file or directory

/tmp/bootstrap.sh: line 5: /apis/apiextensions.k8s.io,: No such file or directory

/tmp/bootstrap.sh: line 6: /apis/apiextensions.k8s.io/v1beta1,: No such file or directory

/tmp/bootstrap.sh: line 7: /metrics,: No such file or directory

/tmp/bootstrap.sh: line 8: /version: No such file or directory

/tmp/bootstrap.sh: line 9: ]: command not found

INFO: Starting agent for

And from there it just loops over and over (the last bit about starting agent)…

Welp, time to go in, (kubectl exec -it rancher-agent-qmgth -n cattle-system -- /bin/bash):

For some reason /tmp/bootstrap.sh is what looks like a JSON file, something like the output of what the Kubernetes API server would give:

root@localhost:/# cat /tmp/bootstrap.sh

{

"paths": [

"/apis",

"/apis/",

"/apis/apiextensions.k8s.io",

"/apis/apiextensions.k8s.io/v1beta1",

"/metrics",

"/version"

]

}

The rancher-agent pod is trying very hard to execute this file. I’m going to kubectl delete -f and take one more hard look at the configuration…

BRAINSTORM - It was asking me how OTHER things are going to access cattle, I think it needs the SERVICE address that the management server is going to use…? YUP, not enough critical reading, here’s the page again:

So what must have been happening is that the rancher-agent container that gets started up, expects that URL to produce a shell script that goes in /tmp/bootstrap.sh, and then THAT gets called. OK, so now, I’m going to change the URL that I was using in the resource to be how OTHER containers can get to rancher – which is http://rancher-svc:8080. After testing with the curl container, that Rancher API is indeed accessible.

One more time, I kubectl delete -f, and use the UI to specify the service’s address. This time, I won’t be wgeting and saving the config, I’ll just be copy-pastaing the line that shows up on the page. I DID have to change the rancher-svc in the URL to localhost:8080, since I’m port-forwarding Rancher Server to my local machine, however. The DNS name rancher-svc obviously means nothing to my local computer, but it should mean something to nodes inside the cluster.

I think I’ve just run into ANOTHER issue – I can’t actually resolve rancher-svc from inside the rancher-agent pod (despite it being resolvable from a tutum/curl pod)!

$ kubectl exec -it rancher-agent-fql1m -n cattle-system -- /bin/bash

root@localhost:/# cat /tmp/bootstrap.sh

root@localhost:/# curl

curl: try 'curl --help' or 'curl --manual' for more information

root@localhost:/# curl rancher-svc:8080

curl: (6) Could not resolve host: rancher-svc

root@localhost:/# curl https://rancher-svc:8080

curl: (6) Could not resolve host: rancher-svc

Welp, that’s bad news - /tmp/bootstrap.sh is empty (likely because of the failed DNS resolution, and thus failed request). It’s weird because I can access it from the curl container… I thought the issue might be the different namespaces (cattle-system vs default). Time to move the rancher/server deployment to cattle-system namespace as well. Also, I need to manually delete all the resources that were spun up before, and re-do everything, just to be sure DNS will propogate properly this time.

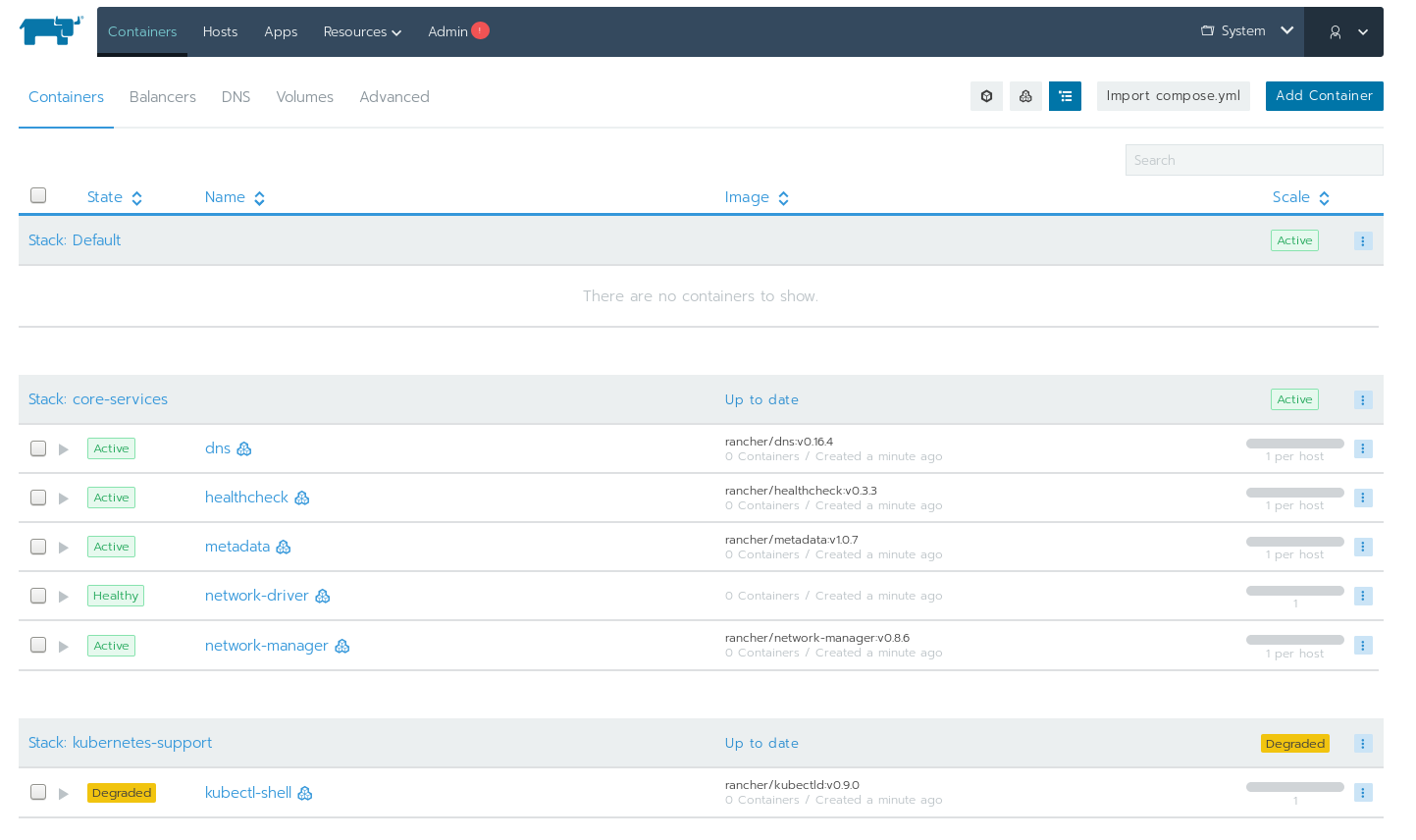

OK, so after doing that, things got better – I can now see much more, and when I visit the main page I get shown running containers:

However, the Rancher hosts page looks stuck or something… Something is still not quite right.

I am also not seeing anything present for the default namespace, and nothing for kube-system either, but I see a whole bunch of seemingly rancher-specific services like dns that aren’t up… Something is definitely off.

When I go into the agent I get this:

$ kubectl exec -it rancher-agent-7tpqn -n cattle-system -- /bin/bash

root@localhost:/# curl http://rancher-svc:8080

curl: (6) Could not resolve host: rancher-svc

I STILL can’t access rancher-svc by DNS name for some reason… Guess it wasn’t the namespace that was making it unavailable, now I’m going to sanity check if I can still access the service from within a throwaway tutum/curl container, then try using the ClusterIP of the Rancher Server directly for the Rancher Agent.

Is rancher-svc:8080 accessible from a tutum/curl container? Yep, I get a response from the API. So weird that it doesn’t work from inside the actual rancher agent container for some reason.

Will a direct cluster IP work? To test this I:

wget to get the auto-generated resource config againkubectl get pods -n cattle-system -o wide)CATTLE_URL and CATTLE_REGISTRATION_URL env variables before re-applying the config.A few minutes later It’s finally working! I see the host that I should see, and everything is working perfectly. It looks like the issue here was really the inaccessibility of the Service (I still don’t know why the rancher agent couldn’t properly find it). It’s also very much possible for me to take the agent configuration and make it a part of my resource configs repository, and I think I will. Unfortunately there’s a Kubernetes Secret right in the config…

Here’s A fully put together list of exactly what I did to get this working:

kubectl apply -f the resource configuration that creates the cattle-system namespace, the rancher-svc service, and the rancher (rancher/server image) deployment/pod.kubectl port-forward rancher-<whatever> 8080:8080 -n cattle-systemhttp://localhost:8080 in your browserrancher pod (you can find this w/ kubectl get pods -n cattle-system -o wide)kubectl apply -f command http://localhost, because Rancher API is port-forwarded right now. Don’t worry, the ClusterIP will be inside the resource configuration it downloads, and that will be right.Here’s the full version of the resource configuration that creates the cattle-system namespace and stuff before hand (step 1):

---

apiVersion: v1

kind: Namespace

metadata:

name: cattle-system

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: rancher

namespace: cattle-system

labels:

app: rancher

spec:

replicas: 1

template:

metadata:

labels:

app: rancher

spec:

containers:

- name: rancher

image: rancher/server:v2.0.0-alpha7

ports:

- containerPort: 8080

NOTE The Service was removed because it never worked, in the end I had to retrieve the assigned ClusterIP of the rancher server pod directly.

It’s pretty bad that I ended up using the assigned ClusterIP of the rancher server pod here – The service wasn’t working and I think that’s because the /etc/hosts file is actually taken from the host machine itself (/etc/hosts wasn’t the usual automatically generated Kubernetes one when I looked inside the rancher-agent pod). This must be something I don’t understand about rancher yet, it seems to be expecting to be exposed to the internet rather than be a subject of default Kubernetes DNS/routing.

I got real lost in the middle there, mostly due to my own misunderstandings/lack of careful reading of the instructions and how everything fits together, but I’m glad to have Rancher up and running!

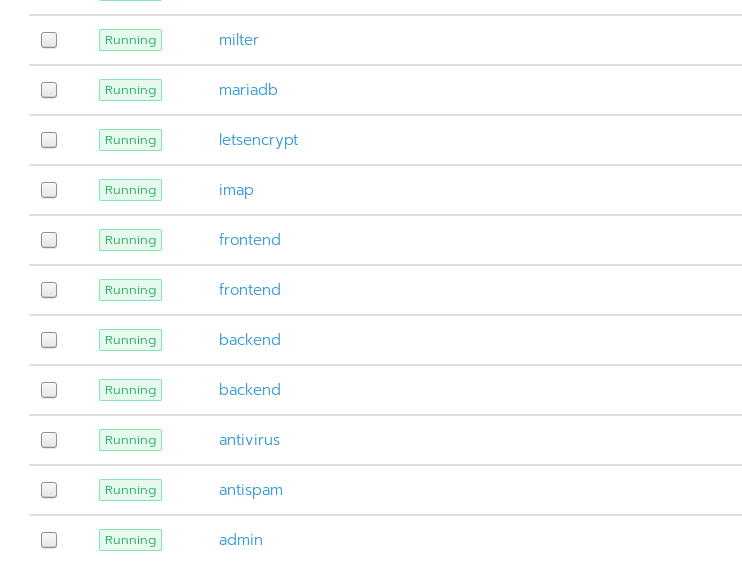

The terminology is a little different so things look a little weird in the UI. For example, all my applications are in one “stack”, and they shouldn’t be. Over the next few days I’ll have to go through my Kubernetes resource configurations for various apps and rename/refactor things to look better. I definitely appreciate having this additional method to access and manipulate my kubernetes cluster (though I’ll be kubectl port-forwarding to Rancher, since there’s no access control set up quite yet). Look at the sorry state of my container lists now:

Luckily I can just rename some containers, and also get them put in different stacks so that these lists are more orderly (BTW in the picture the two frontend/backend containers are for two different applications). It’d also be good for me to start using the environment management features, and making namespaces like staging and production and dev.

Another thing that’s also pretty great (as far as I know right now) is that it looks like Cattle DNS and other core services and Kubernetes Ingress and other core services seem to be living together in peace, with no particular effort from me. One thing I do wonder is if Rancher will only ever support using compose.yml from Docker Compose… I sure hope not, I actually very much prefer Kubernetes’s resource configs.

Hope your installation goes much smoother than mine (I’m sure it will, I just seem to run into tons of issues all the time) – Thanks to the Rancher team for making and improving/maintaining such an awesome tool.

As mentioned in the update note up above, there are two main problems with the approach as outlined in this article:

rancher/server successfully and the agent is it’s going to fail.I recently went to take a look at the rancher UI and noticed that it wasn’t letting me in – leading me to realize that I had neglected to give it a place to store configuration.

While the rancher team calls it a HA setup, if you don’t want to re-configure rancher/server every time it goes down, you need to give it a place to store it’s data. The documentation is pretty easy to follow, easiest way is just to give it a directory to put mysql database data in.

To stop my use of the ClusterIP directly, I added a service and set the hostNetwork value to false for the rancher-agent container. Feel free to read more about the hostNetwork option in the Kubernetes schema reference in there, but basically it:

Host networking requested for this pod. Use the host’s network namespace. If this option is set, the ports that will be used must be specified. Default to false.

It’s pretty obvious that this option is what was making the the agent unable to reoslve rancher-svc.

I suspect that disabling the host-based networking might break rancher’s DNS record/ingress management capabilities, so keep that in mind. Since I still use Kubernetes’ ingress, this isn’t so much of a problem for me.

Here’s the fully updated resource configuration (note that a large part of this needs to be generated by rancher/server after initial setup.

---

apiVersion: v1

kind: Service

metadata:

namespace: cattle-system

name: rancher-svc

labels:

app: rancher

spec:

selector:

app: rancher

ports:

- name: admin-ui

port: 8080

protocol: TCP

---

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: rancher

namespace: cattle-system

labels:

app: rancher

spec:

replicas: 1

template:

metadata:

labels:

app: rancher

spec:

containers:

- name: rancher

image: rancher/server:v2.0.0-alpha7

ports:

- containerPort: 8080

volumeMounts:

- name: mysql-data

mountPath: /var/lib/mysql

volumes:

- name: mysql-data

hostPath:

path: /var/data/rancher/mysql

# EVERYTHING BELOW GENERATED BY RANCHER

---

apiVersion: v1

kind: Namespace

metadata:

name: cattle-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: rancher

namespace: cattle-system

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: rancher

namespace: cattle-system

subjects:

- kind: ServiceAccount

name: rancher

namespace: cattle-system

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

---

apiVersion: v1

kind: Secret

metadata:

name: rancher-credentials-4cc9c219

namespace: cattle-system

type: Opaque

data:

url: <generated by rancher>

access-key: <generated by rancher>

secret-key: <generated by rancher>

---

apiVersion: v1

kind: Pod

metadata:

name: cluster-register-4cc9c219

namespace: cattle-system

spec:

serviceAccountName: rancher

restartPolicy: Never

containers:

- name: cluster-register

image: rancher/cluster-register:v0.1.0

volumeMounts:

- name: rancher-credentials

mountPath: /rancher-credentials

readOnly: true

volumes:

- name: rancher-credentials

secret:

secretName: rancher-credentials-4cc9c219

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: rancher-agent

namespace: cattle-system

spec:

template:

metadata:

labels:

app: rancher

type: agent

spec:

containers:

- name: rancher-agent

command:

- /run.sh

- k8srun

env:

- name: CATTLE_ORCHESTRATION

value: "kubernetes"

- name: CATTLE_SKIP_UPGRADE

value: "true"

- name: CATTLE_REGISTRATION_URL

value: http://rancher-svc:8080/v3/scripts/D771E4DD65BA6C025CEE:1483142400000:TohjJaXbB9g5w6OpGU6dsg6aic

- name: CATTLE_URL

value: "http://rancher-svc:8080/v3"

- name: CATTLE_NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

image: rancher/agent:v2.0-alpha4

securityContext:

privileged: true

volumeMounts:

- name: rancher

mountPath: /var/lib/rancher

- name: rancher-storage

mountPath: /var/run/rancher/storage

- name: rancher-agent-state

mountPath: /var/lib/cattle

- name: docker-socket

mountPath: /var/run/docker.sock

- name: lib-modules

mountPath: /lib/modules

readOnly: true

- name: proc

mountPath: /host/proc

- name: dev

mountPath: /host/dev

hostNetwork: false

hostPID: true

volumes:

- name: rancher

hostPath:

path: /var/lib/rancher

- name: rancher-storage

hostPath:

path: /var/run/rancher/storage

- name: rancher-agent-state

hostPath:

path: /var/lib/cattle

- name: docker-socket

hostPath:

path: /var/run/docker.sock

- name: lib-modules

hostPath:

path: /lib/modules

- name: proc

hostPath:

path: /proc

- name: dev

hostPath:

path: /dev

updateStrategy:

type: RollingUpdate

Of course, you’ll need to change/create the appropriate folders on your host, like /var/lib/rancher and /var/data/rancher/mysql.

The test to make sure everything is working here of course is killing you rancher/master pod and making sure you DON’T have to re-configure everything. rancher apply -f <your rancher config>.yaml and kubectl delete pod -n cattle-system <...> are you friends.