+

+

tl;dr - I set up a single instance of statping along with configuration to monitor uptime for a few sites I maintain.

I’ve written a bunch of times in the past about my k8s cluster (most posts are categorized under “k8s” or “kubernetes”), but during a recent rebuild of my cluster I forgot to put a rather important back up. It’s pretty disappointing that this happened at all – I went through the hard work of making all my deployments single-command (utilizing my still-possibly-a-terrible-idea makeinfra pattern), but I don’t have all the projects and more infrastructure related concerns in the same respository, which meant I had to go into each individual -infra repository for my other projects and run make deploy or something similar. For example, cert-manager (fantastic tool for automating certificate management on your cluster) is in the bigger “infrastructure” (not tied to any project), and projects like this blog have their own <project>-infra repositories.

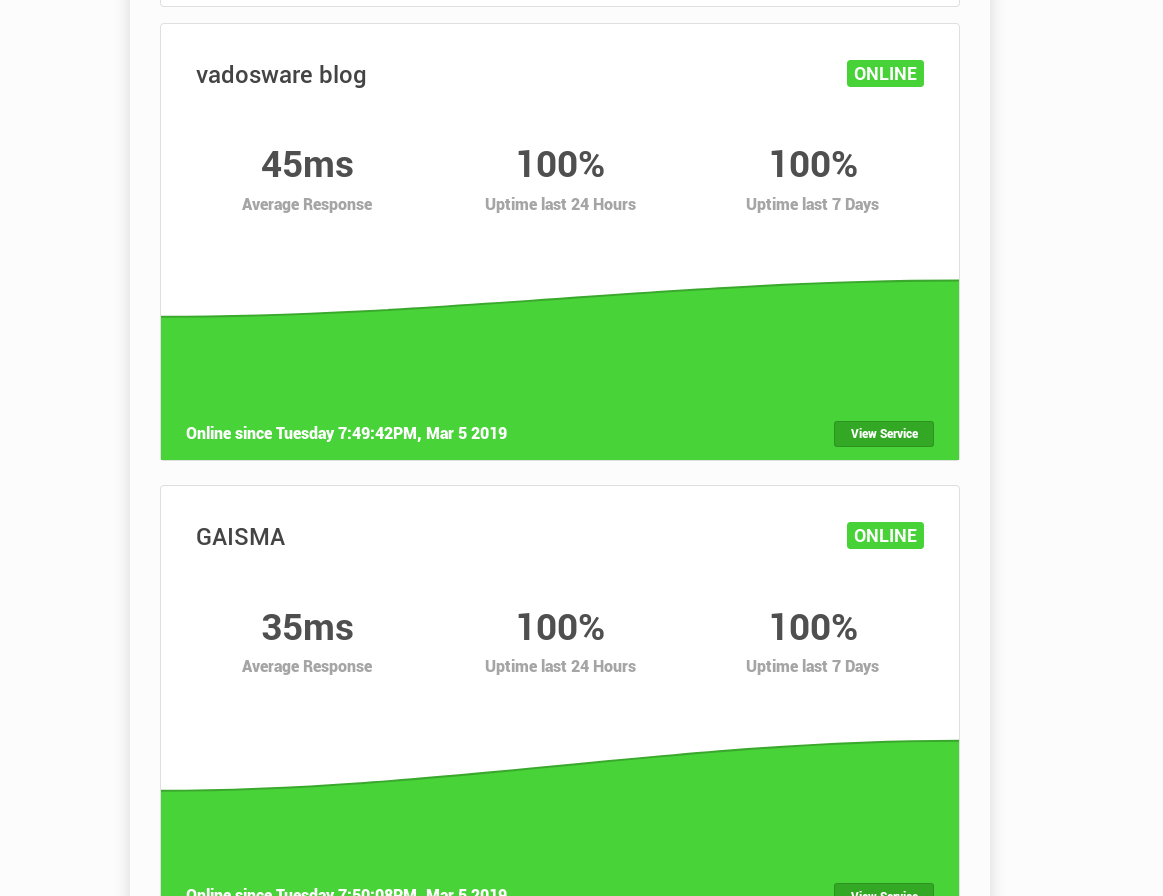

Unfortunately the project I forgot to redeploy was actually the landing page to one of my businesses – gaisma.co.jp, and it actually wasn’t up when a client went to look at it. Of course, I had no idea the site was down, so when I pointed them to it they noted that it was down. Quite an unwelcome surprise!

So there are a few things I can do to stop this from happening again:

git submodule the other projects into the main infra repoWhile the first solution is a good one (and probably something I should do period), the second one is what this post is going to be about. I’m going to set up statping which is a really nice looking self-hostable tool for simple uptime checks on one or more websites.

The real usefulness comes from automated downtime emails, which I’m going to hook up with my Gandi-provided free email and use to email myself when any sites go down.

The deployment is pretty simple – I only need one instance of statping for the whole cluster (so no DaemonSet), It’s relatively “stateless” (so no StatefulSet), and I really only need one replica (so technically I could go with a Pod). I do want to be able to visit some status.<domain>.<tld> endpoint from the web and see everything at a glance, so I’ll need a Service and an Ingress.

Here’s what that looks like:

statping/pvc.yaml

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: statping-pvc

namespace: monitoring

labels:

app: statping

tier: monitoring

spec:

storageClassName: openebs-jiva-non-ha

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 100Mi

statping/deployment.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: statping

namespace: monitoring

spec:

replicas: 1

selector:

matchLabels:

app: statping

tier: monitoring

template:

metadata:

labels:

app: statping

tier: monitoring

spec:

containers:

- name: statping

image: hunterlong/statping:v0.80.51

resources:

limits:

memory: 128Mi

cpu: 100m

ports:

- containerPort: 8080

volumeMounts:

- mountPath: /app

name: statping-config

volumes:

- name: statping-config

persistentVolumeClaim:

claimName: statping-pvc

statping/svc.yaml

---

apiVersion: v1

kind: Service

metadata:

name: statping

namespace: monitoring

spec:

selector:

app: statping

tier: monitoring

ports:

- protocol: TCP

port: 80

targetPort: 8080

statping/ingress.yaml

---

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: statping

namespace: monitoring

annotations:

ingress.kubernetes.io/ssl-redirect: "true"

ingress.kubernetes.io/limit-rps: "20"

ingress.kubernetes.io/proxy-body-size: "10m"

kubernetes.io/tls-acme: "true"

kubernetes.io/ingress.class: "traefik"

spec:

tls:

- hosts:

- subdomain.domain.tld

secretName: statping-tls

rules:

- host: subdomain.domain.tld

http:

paths:

- path: /

backend:

serviceName: statping

servicePort: 80

Another project that I was hoping to use with this is postmgr (which is almost at version 0.1.0!) – I created postmgr to make it easier to run well-configured Postfix servers but right now postmgr isn’t quite ready for dogfooding (it could stand to be a little more secure/featureful), so for now I’m deferring so some other cluster-external SMTP server (Gandi in this case).

After some make executions (which ran kubectl apply -fs), and some initial set up I was greeted with a nice uptime dashboard:

Check out more about statping at the Github Repo (thanks to hunterlong and all the contributors for making this awesome tool)! I also filed an issue asking about some documentation on config.yml, since I really wanted to be able to pre-populate the config.yml file with a configmap.

This post was a quick one but hopefully it shows how easy it is to deploy services with k8s and achieve some business value.