DDG search drop in powered by ddg.patdryburgh.com

tl;dr - I got linkerd v1 working on a small 3-tier app on my k8s cluster. Linkerd v1 sports an older (but much more battle-tested) and simpler model in that it runs a proxy on every node as a DaemonSet. Linkerd v2 runs a set of control programs (the “control plane”) and per-application sidecar containers that act as proxies, and while that’s cool I’m not going to run it just yet.

+

+

I’ve covered various individual pieces of software that help with “observability”, which is an umbrella term for day 2 concerns like monitoring/tracing/metrics/etc. I’ve written a previous series of blog posts feeling my way around the space a little bit and it’s always been in my notes to try and attack the problem in a smarter way. Integrating observability tools across every language and framework that I might use for future projects is kind of a pain – luckily ways have emerged to do this better, and people are calling it “service mesh” (here’s a blog post from Buoyant on what that means) lately.

Prior to service mesh, API gateways like Kong (they’ve got a great answer on the difference and they’re supposed to have their own service mesh offering any day now) were a way to solve this as well, you just needed to make sure requests went through the API gateway whenever extra functionality was needed. What people normally refer to when they say service mesh these days is to always forward traffic through a proxy, whether per-node or per-application. This is the main difference between linkerd v1 and linkerd v2 (formerly named Conduit). In linkerd v2, all requests go to light-weight (linkerd2-proxy was built with rust!) “sidecar” containers that run a proxy. linkerd v2 is pretty exciting and has a great future ahead of it IMO, but for now I’m going to be going with the older, more battle-tested linkerd v1 – I want to get a good feel for what the world was like before the new and shiny (I’m kind of skipping the API gateway step though, I never really liked them).

The idea is that linkerd v1 will help with various things – circuit breaking, metrics, retries, tracing, and more if we just make sure to send as much traffic as possible through it. Linkerd v1 is meant to run on every node (so a k8s DaemonSet is what we want), and handle traffic for services that run on that node.

If the earlier linked buoyant post didn’t convince you that a service mesh is a relatively good idea, here’s why I think it is explained by a scenario:

This is where the concept of service meshes comes from – Service Mesh = services behind proxies + proxy coordination/management + observability + Y where Y = other value-added services at the network layer. Depending on what kind of traffic you proxy you can get even more functionality – Proxies like Envoy support features like proxying redis traffic.

Service mesh is actually useful regardless of how you run your services, which are just generally processes running on your computers or someone elses. If there are a bunch of them and they talk to each other over an unperfect network connection, they should worry about things like circuit breaking and retry logic. If moving these concerns out of your domain (as in Domain Driven Design with the onion architecture) and into a outer layer of your app is one level of enlightenment, surely moving it out of your app entirely is the next! This is the main value proposition of a proxy that sits everywhere and forwards traffic – in exchange for creating another piece of software to run and manage and a possible point of failure, you can sidestep writing/using/enforcing use of whatever suite of libraries makes your services more resilient at the individual level, and solve the problem reasonably well in the aggregate.

With kubernetes as my platform layer, there are a few up-and-coming options for how to solve this problem (with some overlap in how they work):

At the time of this blog posting Istio has made it’s 1.0 release and is considered “production ready”, but it can’t hold a candle to linkerd that’s been out in the world and been running in production for all this time. The other big difference between the two is the mode of operation – linkerd v1 is proxy-per-node and istio/linkerd v2 are proxy-per-process and control-plane-per-cluster. It’s much easier for me to manage the former so I’m going to be going with trying out linkerd v1. I am dropping some fatures that are provided by the others on the floor though – istio can be set up to do automatic mutual TLS negotation with the help of Istio security and spiffe. My thinking at this moment is that I should probably try to walk before I start running – my workloads aren’t super critical or security intensive (I don’t really have anyone else’s data).

There was a short time where Linkerd v2 was called Conduit, and I wasn’t sure if it was going to become a completely new thing, but I’m glad they decided to rename it to “linkerd 2”, even if the naming is a little clumsy. At the time I thought it meant that they would move all the battle-testedness of linkerd forward but it looks like what’s actually happening is that the projects stayed separate and just have the same name now. That’s a little dissapointing, but then again I’m using their software completely for free and gaining massive value so I almost definitely shouldn’t complain. I’m super excited to add another dashboard I’ll barely look at (only 50% sarcasm), just because it feels like I’m doing things more correctly. Linkerd also has some pretty great support for transparent proxying, which makes me think it’s going to be pretty easy to install/integrate.

I do want to take some time to note that adding this piece is going to add another point of failure/complexity to a pretty stacked list:

To put it simply, if someone tells you “I can’t connect to service X”, you now have one (or likely more) more questions to ask in your quest to root cause, for example “is linkerd misconfigured/down”. Future me is probably gonna get mad at how much stuff there is that could possibly go wrong but current me is excited. The amount of things I’m about to get for free (easy tracing, circuit breaking, retry logic, panes of glass for request performance/monitoring) seems very worth it to me right now.

I originally was going to write this post as an examination of Envoy (+/- Istio) but I decided to give linkerd the first crack. I’ve noted why several times now but wanted to harp on it just a little more, since I do really want to try out Istio’s paradigm in the future (whether I use linkerd 2 or istio itself).

There is a bit to say about envoy and it’s growing popularity though – it’s seemingly a super solid proxy both at the edge (of your infrastructure and the WWW) or for a single application and that’s pretty amazing. It handles a bunch of types of traffic (not just HTTP) and has a plugin architecture IIRC. It’s also been used at bonkers scale @ Lyft so everyone is pretty convinced it “can scale”. It’s a pretty huge part of a bunch of the solutions that are out there (it’s basically either the main sidecar proxy or an option, like in consul connect). Envoy’s support for dynamic configuration out of the gate and well-built ride-or-die API support is a huge game changer and is fast becoming the standard for tools I want to put in my arsenal. I’m not using it right now, but I’m pretty confident I’ll work my way back around to it to get a feel for it.

As always the first step is to RTFM. For me this meant reading the linkerd v1 overview and subsequent documentation. If you’re unfamiliar with other parts of this sandwich (ex. Kubernetes), you might want to take time to read up on that too, this article will be here (probably) when you’re done.

One thing I also found myself looking up early on was linkerd support for sending information to jaeger– turns out there’s an issue and it’s basically solved/worked around by using linkerd-zipkin. Ironically, I also use the Zipkin API to interact with my Jaeger instance due to bugs/difficulty getting the jaeger native data formats working.

As the kubernetes getting started guide make pretty clear, the first step is to install the linkerd DaemonSet. The getting started docs point to a daemonset resource you can kubectl apply -f but I prefer to split out my resources and look a little more closely at them before I deploy to learn more. What I generally do is download the file they link to something like all-in-one.yaml then go through and look at/split out individual resource configs. Here’s what that looks like (at the point where I pulled the file):

infra/kubernetes/apps/linkerd/linkerd.ns.yaml

---

apiVersion: v1

kind: Namespace

metadata:

name: linkerd

infra/kubernetes/apps/linkerd/linkerd.serviceaccount.yaml

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: linkerd

infra/kubernetes/apps/linkerd/linkerd.rbac.yaml

---

# grant linkerd/namerd permissions to enable service discovery

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: linkerd-endpoints-reader

rules:

- apiGroups: [""] # "" indicates the core API group

resources: ["endpoints", "services", "pods"] # pod access is required for the *-legacy.yml examples in this folder

verbs: ["get", "watch", "list"]

- apiGroups: [ "extensions" ]

resources: [ "ingresses" ]

verbs: ["get", "watch", "list"]

---

# grant namerd permissions to custom resource definitions in k8s 1.8+ and third party resources in k8s < 1.8 for dtab storage

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: namerd-dtab-storage

rules:

- apiGroups: ["l5d.io"]

resources: ["dtabs"]

verbs: ["get", "watch", "list", "update", "create"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: linkerd-role-binding

subjects:

- kind: ServiceAccount

name: linkerd

namespace: default

roleRef:

kind: ClusterRole

name: linkerd-endpoints-reader

apiGroup: rbac.authorization.k8s.io

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: namerd-role-binding

subjects:

- kind: ServiceAccount

name: linkerd

namespace: default

roleRef:

kind: ClusterRole

name: namerd-dtab-storage

apiGroup: rbac.authorization.k8s.io

infra/kubernetes/apps/linkerd/linkerd.ds.yaml

---

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

labels:

app: l5d

name: l5d

namespace: linkerd

spec:

template:

metadata:

labels:

app: l5d

spec:

# I uncommented the line below because I use CNI... Who doesn't??

hostNetwork: true # Uncomment to use host networking (eg for CNI)

containers:

- name: l5d

image: buoyantio/linkerd:1.4.6

args:

- /io.buoyant/linkerd/config/config.yaml

env:

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

ports:

- name: http-outgoing

containerPort: 4140

hostPort: 4140

- name: http-incoming

containerPort: 4141

- name: h2-outgoing

containerPort: 4240

hostPort: 4240

- name: h2-incoming

containerPort: 4241

- name: grpc-outgoing

containerPort: 4340

hostPort: 4340

- name: grpc-incoming

containerPort: 4341

## Initially I won't be using the http ingress -- linkerd won't be able to bind to 80 because traefik is there.

## I'm also a little unsure why there's no containerPort binding for TLS connections but I'll look at that later

# - name: http-ingress

# containerPort: 80

# - name: h2-ingress

# containerPort: 8080

volumeMounts:

- name: "l5d-config"

mountPath: "/io.buoyant/linkerd/config"

readOnly: true

# Run `kubectl proxy` as a sidecar to give us authenticated access to the

# Kubernetes API.

- name: kubectl

image: buoyantio/kubectl:v1.8.5

args:

- "proxy"

- "-p"

- "8001"

volumes:

- name: l5d-config

configMap:

name: "l5d-config"

infra/kubernetes/apps/linkerd/linkerd.svc.yaml

---

apiVersion: v1

kind: Service

metadata:

name: l5d

namespace: linkerd

spec:

selector:

app: l5d

type: LoadBalancer

ports:

- name: http-outgoing

port: 4140

- name: http-incoming

port: 4141

- name: h2-outgoing

port: 4240

- name: h2-incoming

port: 4241

- name: grpc-outgoing

port: 4340

- name: grpc-incoming

port: 4341

## No ingress for now (see note in daemonset)

# - name: http-ingress

# port: 80

# - name: h2-ingress

# port: 8080

infra/kubernetes/apps/linkerd/linkerd.configmap.yaml

################################################################################

# Linkerd Service Mesh

#

# This is a basic Kubernetes config file to deploy a service mesh of Linkerd

# instances onto your Kubernetes cluster that is capable of handling HTTP,

# HTTP/2 and gRPC calls with some reasonable defaults.

#

# To configure your applications to use Linkerd for HTTP traffic you can set the

# `http_proxy` environment variable to `$(NODE_NAME):4140` where `NODE_NAME` is

# the name of node on which the application instance is running. The

# `NODE_NAME` environment variable can be set with the downward API.

#

# If your application does not support the `http_proxy` environment variable or

# if you want to configure your application to use Linkerd for HTTP/2 or gRPC

# traffic, you must configure your application to send traffic directly to

# Linkerd:

#

# * $(NODE_NAME):4140 for HTTP

# * $(NODE_NAME):4240 for HTTP/2

# * $(NODE_NAME):4340 for gRPC

#

# If you are sending HTTP or HTTP/2 traffic directly to Linkerd, you must set

# the Host/Authority header to `<service>` or `<service>.<namespace>` where

# `<service>` and `<namespace>` are the names of the service and namespace

# that you want to proxy to. If unspecified, `<namespace>` defaults to

# `default`.

#

# If your application receives HTTP, HTTP/2, and/or gRPC traffic it must have a

# Kubernetes Service object with ports named `http`, `h2`, and/or `grpc`

# respectively.

#

# You can deploy this to your Kubernetes cluster by running:

# kubectl create ns linkerd

# kubectl apply -n linkerd -f servicemesh.yml

#

# There are sections of this config that can be uncommented to enable:

# * CNI compatibility

# * Automatic retries

# * Zipkin tracing

################################################################################

---

apiVersion: v1

kind: ConfigMap

metadata:

name: l5d-config

namespace: linkerd

data:

config.yaml: |-

admin:

ip: 0.0.0.0

port: 9990

# Namers provide Linkerd with service discovery information. To use a

# namer, you reference it in the dtab by its prefix. We define 4 namers:

# * /io.l5d.k8s gets the address of the target app

# * /io.l5d.k8s.http gets the address of the http-incoming Linkerd router on the target app's node

# * /io.l5d.k8s.h2 gets the address of the h2-incoming Linkerd router on the target app's node

# * /io.l5d.k8s.grpc gets the address of the grpc-incoming Linkerd router on the target app's node

namers:

- kind: io.l5d.k8s

- kind: io.l5d.k8s

prefix: /io.l5d.k8s.http

transformers:

# The daemonset transformer replaces the address of the target app with

# the address of the http-incoming router of the Linkerd daemonset pod

# on the target app's node.

- kind: io.l5d.k8s.daemonset

namespace: linkerd

port: http-incoming

service: l5d

hostNetwork: true # Uncomment if using host networking (eg for CNI)

- kind: io.l5d.k8s

prefix: /io.l5d.k8s.h2

transformers:

# The daemonset transformer replaces the address of the target app with

# the address of the h2-incoming router of the Linkerd daemonset pod

# on the target app's node.

- kind: io.l5d.k8s.daemonset

namespace: linkerd

port: h2-incoming

service: l5d

hostNetwork: true # Uncomment if using host networking (eg for CNI)

- kind: io.l5d.k8s

prefix: /io.l5d.k8s.grpc

transformers:

# The daemonset transformer replaces the address of the target app with

# the address of the grpc-incoming router of the Linkerd daemonset pod

# on the target app's node.

- kind: io.l5d.k8s.daemonset

namespace: linkerd

port: grpc-incoming

service: l5d

hostNetwork: true # Uncomment if using host networking (eg for CNI)

- kind: io.l5d.rewrite

prefix: /portNsSvcToK8s

pattern: "/{port}/{ns}/{svc}"

name: "/k8s/{ns}/{port}/{svc}"

# Telemeters export metrics and tracing data about Linkerd, the services it

# connects to, and the requests it processes.

telemetry:

- kind: io.l5d.prometheus # Expose Prometheus style metrics on :9990/admin/metrics/prometheus

- kind: io.l5d.recentRequests

sampleRate: 0.25 # Tune this sample rate before going to production

# - kind: io.l5d.zipkin # Uncomment to enable exporting of zipkin traces

# host: zipkin-collector.default.svc.cluster.local # Zipkin collector address

# port: 9410

# sampleRate: 1.0 # Set to a lower sample rate depending on your traffic volume

# Usage is used for anonymized usage reporting. You can set the orgId to

# identify your organization or set `enabled: false` to disable entirely.

usage:

enabled: false

#orgId: linkerd-examples-servicemesh

# Routers define how Linkerd actually handles traffic. Each router listens

# for requests, applies routing rules to those requests, and proxies them

# to the appropriate destinations. Each router is protocol specific.

# For each protocol (HTTP, HTTP/2, gRPC) we define an outgoing router and

# an incoming router. The application is expected to send traffic to the

# outgoing router which proxies it to the incoming router of the Linkerd

# running on the target service's node. The incoming router then proxies

# the request to the target application itself. We also define HTTP and

# HTTP/2 ingress routers which act as Ingress Controllers and route based

# on the Ingress resource.

routers:

- label: http-outgoing

protocol: http

servers:

- port: 4140

ip: 0.0.0.0

# This dtab looks up service names in k8s and falls back to DNS if they're

# not found (e.g. for external services). It accepts names of the form

# "service" and "service.namespace", defaulting the namespace to

# "default". For DNS lookups, it uses port 80 if unspecified. Note that

# dtab rules are read bottom to top. To see this in action, on the Linkerd

# administrative dashboard, click on the "dtab" tab, select "http-outgoing"

# from the dropdown, and enter a service name like "a.b". (Or click on the

# "requests" tab to see recent traffic through the system and how it was

# resolved.)

dtab: |

/ph => /$/io.buoyant.rinet ; # /ph/80/google.com -> /$/io.buoyant.rinet/80/google.com

/svc => /ph/80 ; # /svc/google.com -> /ph/80/google.com

/svc => /$/io.buoyant.porthostPfx/ph ; # /svc/google.com:80 -> /ph/80/google.com

/k8s => /#/io.l5d.k8s.http ; # /k8s/default/http/foo -> /#/io.l5d.k8s.http/default/http/foo

/portNsSvc => /#/portNsSvcToK8s ; # /portNsSvc/http/default/foo -> /k8s/default/http/foo

/host => /portNsSvc/http/default ; # /host/foo -> /portNsSvc/http/default/foo

/host => /portNsSvc/http ; # /host/default/foo -> /portNsSvc/http/default/foo

/svc => /$/io.buoyant.http.domainToPathPfx/host ; # /svc/foo.default -> /host/default/foo

client:

kind: io.l5d.static

configs:

# Use HTTPS if sending to port 443

- prefix: "/$/io.buoyant.rinet/443/{service}"

tls:

commonName: "{service}"

- label: http-incoming

protocol: http

servers:

- port: 4141

ip: 0.0.0.0

interpreter:

kind: default

transformers:

- kind: io.l5d.k8s.localnode

# hostNetwork: true # Uncomment if using host networking (eg for CNI)

dtab: |

/k8s => /#/io.l5d.k8s ; # /k8s/default/http/foo -> /#/io.l5d.k8s/default/http/foo

/portNsSvc => /#/portNsSvcToK8s ; # /portNsSvc/http/default/foo -> /k8s/default/http/foo

/host => /portNsSvc/http/default ; # /host/foo -> /portNsSvc/http/default/foo

/host => /portNsSvc/http ; # /host/default/foo -> /portNsSvc/http/default/foo

/svc => /$/io.buoyant.http.domainToPathPfx/host ; # /svc/foo.default -> /host/default/foo

- label: h2-outgoing

protocol: h2

servers:

- port: 4240

ip: 0.0.0.0

dtab: |

/ph => /$/io.buoyant.rinet ; # /ph/80/google.com -> /$/io.buoyant.rinet/80/google.com

/svc => /ph/80 ; # /svc/google.com -> /ph/80/google.com

/svc => /$/io.buoyant.porthostPfx/ph ; # /svc/google.com:80 -> /ph/80/google.com

/k8s => /#/io.l5d.k8s.h2 ; # /k8s/default/h2/foo -> /#/io.l5d.k8s.h2/default/h2/foo

/portNsSvc => /#/portNsSvcToK8s ; # /portNsSvc/h2/default/foo -> /k8s/default/h2/foo

/host => /portNsSvc/h2/default ; # /host/foo -> /portNsSvc/h2/default/foo

/host => /portNsSvc/h2 ; # /host/default/foo -> /portNsSvc/h2/default/foo

/svc => /$/io.buoyant.http.domainToPathPfx/host ; # /svc/foo.default -> /host/default/foo

client:

kind: io.l5d.static

configs:

# Use HTTPS if sending to port 443

- prefix: "/$/io.buoyant.rinet/443/{service}"

tls:

commonName: "{service}"

- label: h2-incoming

protocol: h2

servers:

- port: 4241

ip: 0.0.0.0

interpreter:

kind: default

transformers:

- kind: io.l5d.k8s.localnode

# hostNetwork: true # Uncomment if using host networking (eg for CNI)

dtab: |

/k8s => /#/io.l5d.k8s ; # /k8s/default/h2/foo -> /#/io.l5d.k8s/default/h2/foo

/portNsSvc => /#/portNsSvcToK8s ; # /portNsSvc/h2/default/foo -> /k8s/default/h2/foo

/host => /portNsSvc/h2/default ; # /host/foo -> /portNsSvc/h2/default/foo

/host => /portNsSvc/h2 ; # /host/default/foo -> /portNsSvc/h2/default/foo

/svc => /$/io.buoyant.http.domainToPathPfx/host ; # /svc/foo.default -> /host/default/foo

- label: grpc-outgoing

protocol: h2

servers:

- port: 4340

ip: 0.0.0.0

identifier:

kind: io.l5d.header.path

segments: 1

dtab: |

/hp => /$/inet ; # /hp/linkerd.io/8888 -> /$/inet/linkerd.io/8888

/svc => /$/io.buoyant.hostportPfx/hp ; # /svc/linkerd.io:8888 -> /hp/linkerd.io/8888

/srv => /#/io.l5d.k8s.grpc/default/grpc; # /srv/service/package -> /#/io.l5d.k8s.grpc/default/grpc/service/package

/svc => /$/io.buoyant.http.domainToPathPfx/srv ; # /svc/package.service -> /srv/service/package

client:

kind: io.l5d.static

configs:

# Always use TLS when sending to external grpc servers

- prefix: "/$/inet/{service}"

tls:

commonName: "{service}"

- label: gprc-incoming

protocol: h2

servers:

- port: 4341

ip: 0.0.0.0

identifier:

kind: io.l5d.header.path

segments: 1

interpreter:

kind: default

transformers:

- kind: io.l5d.k8s.localnode

# hostNetwork: true # Uncomment if using host networking (eg for CNI)

dtab: |

/srv => /#/io.l5d.k8s/default/grpc ; # /srv/service/package -> /#/io.l5d.k8s/default/grpc/service/package

/svc => /$/io.buoyant.http.domainToPathPfx/srv ; # /svc/package.service -> /srv/service/package

# HTTP Ingress Controller listening on port 80

- protocol: http

label: http-ingress

servers:

- port: 80

ip: 0.0.0.0

clearContext: true

identifier:

kind: io.l5d.ingress

dtab: /svc => /#/io.l5d.k8s

# HTTP/2 Ingress Controller listening on port 8080

- protocol: h2

label: h2-ingress

servers:

- port: 8080

ip: 0.0.0.0

clearContext: true

identifier:

kind: io.l5d.ingress

dtab: /svc => /#/io.l5d.k8s

There’s quite a bit that was new to me in the ConfigMap so I took some time to read through it. I definitely didn’t understand all of it, but there’s a great deep dive talk I need to rewatch which should shed some more light. The note at the top is not mine, it came from their all-in-one resource config – I just left it in the configmap since I figure that’s where I’d be looking if things went wrong. To summarize, things suck if your app doesn’t support http_proxy, and you have to make sure to name your services’s ports properly.

It looks like I need to look at more documentation and see what other traffic linkerd can proxy – in particular how it proxies TLS traffic, and maybe even database traffic (if it can do it), for the future. BTW If you’re a bit confused about those paths, I use the makeinfra pattern which somewhat explains it – in the apps/linkerd folder I also have a Makefile:

.PHONY: install uninstall \

ns serviceaccount rbac configmap ds svc

KUBECTL := kubectl

install: ns serviceaccount rbac configmap ds svc

rbac:

$(KUBECTL) apply -f linkerd.rbac.yaml

configmap:

$(KUBECTL) apply -f linkerd.configmap.yaml

ds:

$(KUBECTL) apply -f linkerd.ds.yaml

ns:

$(KUBECTL) apply -f linkerd.ns.yaml

serviceaccount:

$(KUBECTL) apply -f linkerd.serviceaccount.yaml

svc:

$(KUBECTL) apply -f linkerd.svc.yaml

uninstall:

$(KUBECTL) delete -f linkerd.svc.yaml

$(KUBECTL) delete -f linkerd.ds.yaml

$(KUBECTL) delete -f linkerd.rbac.yaml

$(KUBECTL) delete -f linkerd.serviceaccount.yaml

OK, time to install this baby into the actual cluster – for me that means a super easy make command in the linkerd folder. To ensure everything was running, you can either use the one liner on the linkerd documentation:

kubectl -n linkerd port-forward $(kubectl -n linkerd get pod -l app=l5d -o jsonpath='{.items[0].metadata.name}') 9990 &

Or, you can do what I did and just do the steps manually (to find out that things aren’t going well):

$ k get pods -n linkerd

NAME READY STATUS RESTARTS AGE

l5d-fn4p4 1/2 CrashLoopBackOff 2 1m

Of course, nothing’s ever that easy! Let’s find out why it’s crash looping:

$ k logs l5d-fn4p4 l5d -n linkerd

-XX:+AggressiveOpts -XX:+AlwaysPreTouch -XX:+CMSClassUnloadingEnabled -XX:CMSInitiatingOccupancyFraction=70 -XX:+CMSParallelRemarkEnabled -XX:+CMSScavengeBeforeRemark -XX:InitialHeapSize=33554432 -XX:MaxHeapSize=1073741824 -XX:MaxNewSize=357916672 -XX:MaxTenuringThreshold=6 -XX:OldPLABSize=16 -XX:+PerfDisableSharedMem -XX:+PrintCommandLineFlags -XX:+ScavengeBeforeFullGC -XX:-TieredCompilation -XX:+UseCMSInitiatingOccupancyOnly -XX:+UseCompressedClassPointers -XX:+UseCompressedOops -XX:+UseConcMarkSweepGC -XX:+UseParNewGC -XX:+UseStringDeduplication

Sep 22, 2018 2:33:07 AM com.twitter.finagle.http.HttpMuxer$ $anonfun$new$1

INFO: HttpMuxer [/admin/metrics.json] = com.twitter.finagle.stats.MetricsExporter (com.twitter.finagle.stats.MetricsExporter)

Sep 22, 2018 2:33:07 AM com.twitter.finagle.http.HttpMuxer$ $anonfun$new$1

INFO: HttpMuxer [/admin/per_host_metrics.json] = com.twitter.finagle.stats.HostMetricsExporter (com.twitter.finagle.stats.HostMetricsExporter)

I 0922 02:33:08.105 UTC THREAD1: linkerd 1.4.6 (rev=3d083135e65b24d2d8bbadb5eba1773752b7e3ae) built at 20180813-141940

I 0922 02:33:08.564 UTC THREAD1: Finagle version 18.7.0 (rev=271f741636e03171d61180f03f6cbfe532de1396) built at 20180710-150313

I 0922 02:33:10.289 UTC THREAD1: tracer: io.buoyant.telemetry.recentRequests.RecentRequetsTracer@557d873b

I 0922 02:33:10.511 UTC THREAD1: Tracer: com.twitter.finagle.zipkin.thrift.ScribeZipkinTracer

I 0922 02:33:10.571 UTC THREAD1: Resolver [inet] = com.twitter.finagle.InetResolver (com.twitter.finagle.InetResolver@52b46d52)

I 0922 02:33:10.571 UTC THREAD1: Resolver [fixedinet] = com.twitter.finagle.FixedInetResolver (com.twitter.finagle.FixedInetResolver@7327a447)

I 0922 02:33:10.572 UTC THREAD1: Resolver [neg] = com.twitter.finagle.NegResolver$ (com.twitter.finagle.NegResolver$@2954f6ab)

I 0922 02:33:10.573 UTC THREAD1: Resolver [nil] = com.twitter.finagle.NilResolver$ (com.twitter.finagle.NilResolver$@58fbd02e)

I 0922 02:33:10.573 UTC THREAD1: Resolver [fail] = com.twitter.finagle.FailResolver$ (com.twitter.finagle.FailResolver$@163042ea)

I 0922 02:33:10.574 UTC THREAD1: Resolver [flag] = com.twitter.server.FlagResolver (com.twitter.server.FlagResolver@11d045b4)

I 0922 02:33:10.574 UTC THREAD1: Resolver [zk] = com.twitter.finagle.zookeeper.ZkResolver (com.twitter.finagle.zookeeper.ZkResolver@1fbf088b)

I 0922 02:33:10.574 UTC THREAD1: Resolver [zk2] = com.twitter.finagle.serverset2.Zk2Resolver (com.twitter.finagle.serverset2.Zk2Resolver@1943c1f2)

I 0922 02:33:11.164 UTC THREAD1: serving http admin on /0.0.0.0:9990

I 0922 02:33:11.196 UTC THREAD1: serving http-outgoing on /0.0.0.0:4140

I 0922 02:33:11.231 UTC THREAD1: serving http-incoming on /0.0.0.0:4141

I 0922 02:33:11.275 UTC THREAD1: serving h2-outgoing on /0.0.0.0:4240

I 0922 02:33:11.293 UTC THREAD1: serving h2-incoming on /0.0.0.0:4241

I 0922 02:33:11.311 UTC THREAD1: serving grpc-outgoing on /0.0.0.0:4340

I 0922 02:33:11.326 UTC THREAD1: serving gprc-incoming on /0.0.0.0:4341

I 0922 02:33:11.369 UTC THREAD1: serving http-ingress on /0.0.0.0:80

java.net.BindException: Failed to bind to /0.0.0.0:80: Address already in use

at com.twitter.finagle.netty4.ListeningServerBuilder$$anon$1.<init> (ListeningServerBuilder.scala:159)

at com.twitter.finagle.netty4.ListeningServerBuilder.bindWithBridge (ListeningServerBuilder.scala:72)

at com.twitter.finagle.netty4.Netty4Listener.listen (Netty4Listener.scala:94)

at com.twitter.finagle.server.StdStackServer.newListeningServer (StdStackServer.scala:72)

at com.twitter.finagle.server.StdStackServer.newListeningServer$ (StdStackServer.scala:60)

at com.twitter.finagle.Http$Server.newListeningServer (Http.scala:408)

at com.twitter.finagle.server.ListeningStackServer$$anon$1.<init> (ListeningStackServer.scala:78)

at com.twitter.finagle.server.ListeningStackServer.serve (ListeningStackServer.scala:37)

at com.twitter.finagle.server.ListeningStackServer.serve$ (ListeningStackServer.scala:36)

at com.twitter.finagle.Http$Server.superServe (Http.scala:564)

at com.twitter.finagle.Http$Server.serve (Http.scala:575)

at io.buoyant.linkerd.ProtocolInitializer$ServerInitializer.serve (ProtocolInitializer.scala:169)

at io.buoyant.linkerd.Main$.$anonfun$initRouter$1 (Main.scala:96)

at scala.collection.TraversableLike.$anonfun$map$1 (TraversableLike.scala:234)

at scala.collection.immutable.List.foreach (List.scala:378)

at scala.collection.TraversableLike.map (TraversableLike.scala:234)

at scala.collection.TraversableLike.map$ (TraversableLike.scala:227)

at scala.collection.immutable.List.map (List.scala:284)

at io.buoyant.linkerd.Main$.initRouter (Main.scala:94)

at io.buoyant.linkerd.Main$.$anonfun$main$3 (Main.scala:46)

at scala.collection.TraversableLike.$anonfun$map$1 (TraversableLike.scala:234)

at scala.collection.immutable.List.foreach (List.scala:378)

at scala.collection.TraversableLike.map (TraversableLike.scala:234)

at scala.collection.TraversableLike.map$ (TraversableLike.scala:227)

at scala.collection.immutable.List.map (List.scala:284)

at io.buoyant.linkerd.Main$.main (Main.scala:46)

at sun.reflect.NativeMethodAccessorImpl.invoke0 (Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke (NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke (DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke (Method.java:498)

at com.twitter.app.App.$anonfun$nonExitingMain$4 (App.scala:364)

at scala.Option.foreach (Option.scala:257)

at com.twitter.app.App.nonExitingMain (App.scala:363)

at com.twitter.app.App.nonExitingMain$ (App.scala:344)

at io.buoyant.linkerd.Main$.nonExitingMain (Main.scala:20)

at com.twitter.app.App.main (App.scala:333)

at com.twitter.app.App.main$ (App.scala:331)

at io.buoyant.linkerd.Main$.main (Main.scala:20)

at io.buoyant.linkerd.Main.main (Main.scala)

Exception thrown in main on startup

Well, that makes sense – I definitely don’t want linkerd being my only right now – traefik is running and it would absolutely disrupt everything. it looks like I’ll have to disable all the “CNI” areas of the config, because I can’t have linkerd try and take over port 80 just yet. At this point I had to go back and change the configuration I noted earlier. This meant editing:

hostNetwork: truehostNetwork: true specifiedhostNetwork (80 & 8080)After changing this stuff I re-ran make to redeploy everything, then had to delete the existing linkerd pod (l5d-fn4p4) to let it try and re-deploy. With that set the output is much nicer:

$ k get pods -n linkerd

NAME READY STATUS RESTARTS AGE

l5d-8bxq5 2/2 Running 0 5s

And the logs from the pod:

$ k logs l5d-8bxq5 -c l5d -n linkerd

-XX:+AggressiveOpts -XX:+AlwaysPreTouch -XX:+CMSClassUnloadingEnabled -XX:CMSInitiatingOccupancyFraction=70 -XX:+CMSParallelRemarkEnabled -XX:+CMSScavengeBeforeRemark -XX:InitialHeapSize=33554432 -XX:MaxHeapSize=1073741824 -XX:MaxNewSize=357916672 -XX:MaxTenuringThreshold=6 -XX:OldPLABSize=16 -XX:+PerfDisableSharedMem -XX:+PrintCommandLineFlags -XX:+ScavengeBeforeFullGC -XX:-TieredCompilation -XX:+UseCMSInitiatingOccupancyOnly -XX:+UseCompressedClassPointers -XX:+UseCompressedOops -XX:+UseConcMarkSweepGC -XX:+UseParNewGC -XX:+UseStringDeduplication

Sep 22, 2018 2:39:50 AM com.twitter.finagle.http.HttpMuxer$ $anonfun$new$1

INFO: HttpMuxer[/admin/metrics.json] = com.twitter.finagle.stats.MetricsExporter(com.twitter.finagle.stats.MetricsExporter)

Sep 22, 2018 2:39:50 AM com.twitter.finagle.http.HttpMuxer$ $anonfun$new$1

INFO: HttpMuxer[/admin/per_host_metrics.json] = com.twitter.finagle.stats.HostMetricsExporter(com.twitter.finagle.stats.HostMetricsExporter)

I 0922 02:39:51.119 UTC THREAD1: linkerd 1.4.6 (rev=3d083135e65b24d2d8bbadb5eba1773752b7e3ae) built at 20180813-141940

I 0922 02:39:51.575 UTC THREAD1: Finagle version 18.7.0 (rev=271f741636e03171d61180f03f6cbfe532de1396) built at 20180710-150313

I 0922 02:39:53.235 UTC THREAD1: tracer: io.buoyant.telemetry.recentRequests.RecentRequetsTracer@55a07409

I 0922 02:39:53.441 UTC THREAD1: Tracer: com.twitter.finagle.zipkin.thrift.ScribeZipkinTracer

I 0922 02:39:53.505 UTC THREAD1: Resolver[inet] = com.twitter.finagle.InetResolver(com.twitter.finagle.InetResolver@52b46d52)

I 0922 02:39:53.506 UTC THREAD1: Resolver[fixedinet] = com.twitter.finagle.FixedInetResolver(com.twitter.finagle.FixedInetResolver@7327a447)

I 0922 02:39:53.506 UTC THREAD1: Resolver[neg] = com.twitter.finagle.NegResolver$(com.twitter.finagle.NegResolver$@2954f6ab)

I 0922 02:39:53.507 UTC THREAD1: Resolver[nil] = com.twitter.finagle.NilResolver$(com.twitter.finagle.NilResolver$@58fbd02e)

I 0922 02:39:53.507 UTC THREAD1: Resolver[fail] = com.twitter.finagle.FailResolver$(com.twitter.finagle.FailResolver$@163042ea)

I 0922 02:39:53.507 UTC THREAD1: Resolver[flag] = com.twitter.server.FlagResolver(com.twitter.server.FlagResolver@11d045b4)

I 0922 02:39:53.507 UTC THREAD1: Resolver[zk] = com.twitter.finagle.zookeeper.ZkResolver(com.twitter.finagle.zookeeper.ZkResolver@1fbf088b)

I 0922 02:39:53.508 UTC THREAD1: Resolver[zk2] = com.twitter.finagle.serverset2.Zk2Resolver(com.twitter.finagle.serverset2.Zk2Resolver@1943c1f2)

I 0922 02:39:54.055 UTC THREAD1: serving http admin on /0.0.0.0:9990

I 0922 02:39:54.069 UTC THREAD1: serving http-outgoing on /0.0.0.0:4140

I 0922 02:39:54.101 UTC THREAD1: serving http-incoming on /0.0.0.0:4141

I 0922 02:39:54.134 UTC THREAD1: serving h2-outgoing on /0.0.0.0:4240

I 0922 02:39:54.155 UTC THREAD1: serving h2-incoming on /0.0.0.0:4241

I 0922 02:39:54.171 UTC THREAD1: serving grpc-outgoing on /0.0.0.0:4340

I 0922 02:39:54.184 UTC THREAD1: serving gprc-incoming on /0.0.0.0:4341

I 0922 02:39:54.226 UTC THREAD1: serving http-ingress on /0.0.0.0:80

I 0922 02:39:54.259 UTC THREAD1: serving h2-ingress on /0.0.0.0:8080

I 0922 02:39:54.269 UTC THREAD1: initialized

It’s a little weird, because I’m definitely using CNI, but I can’t afford to just let linkerd take over my node mappings just yet – so I think everythings still fine, I just have to direct requests to linkerd as if it was just another deployment/service which is fine by me. I’m not sure exactly why “CNI” configurations need hostNetwork, CNI the acronym doesn’t explain it fully – guess I’ll look into this later when it bites me in the ass?

OK, now let’s kubectl port-forward to get into the container and look at the dashboard! Here’s the command I ran:

$ k port-forward l5d-8bxq5 9990:9990 -n linkerd

Forwarding from 127.0.0.1:9990 -> 9990

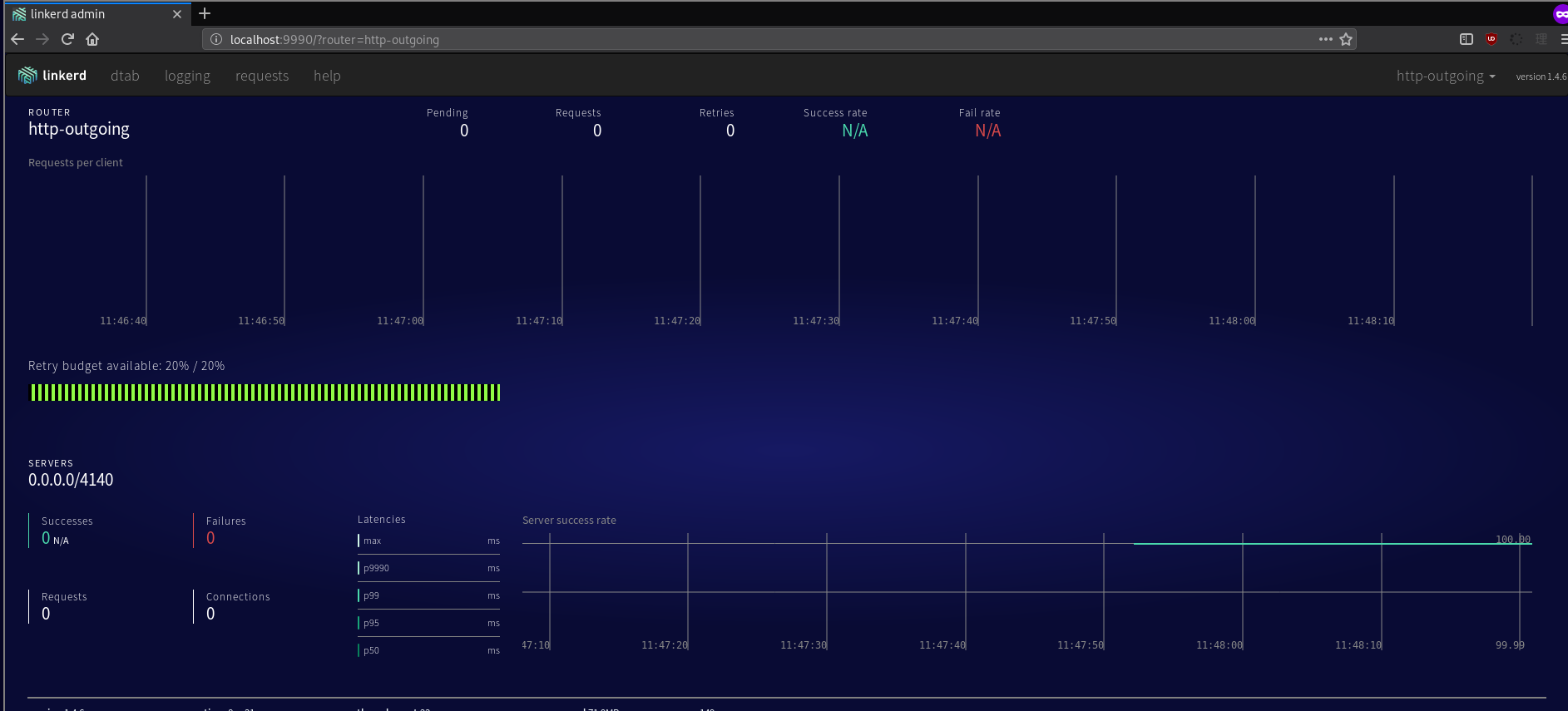

And here’s the dashboard:

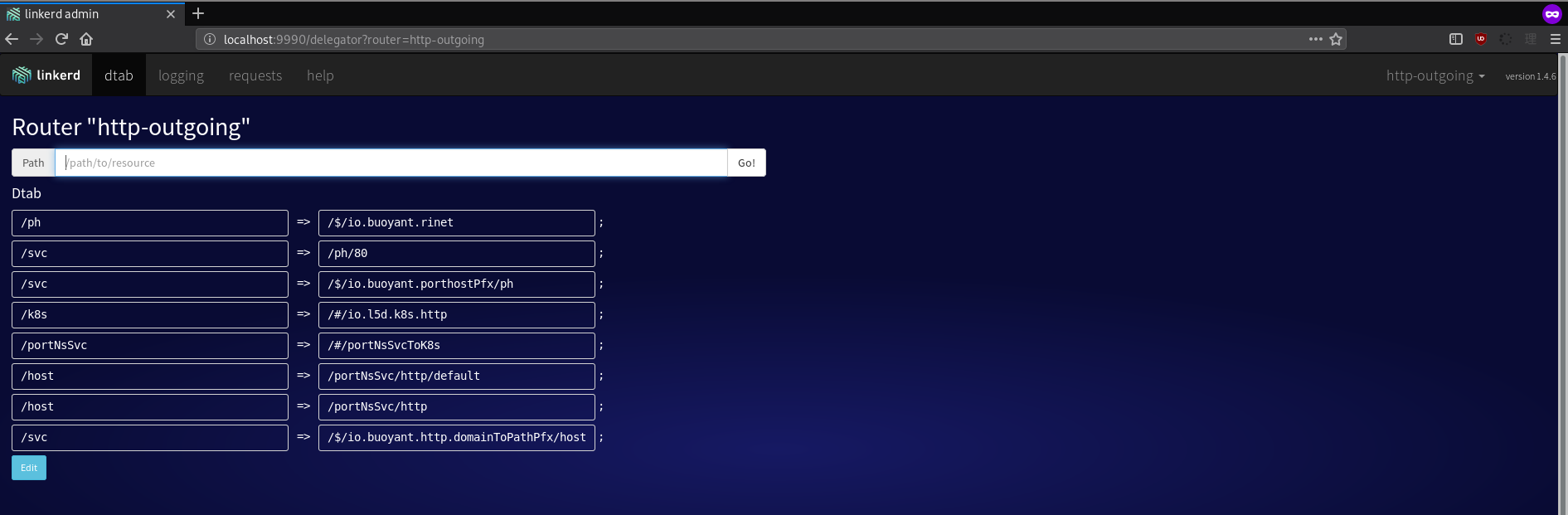

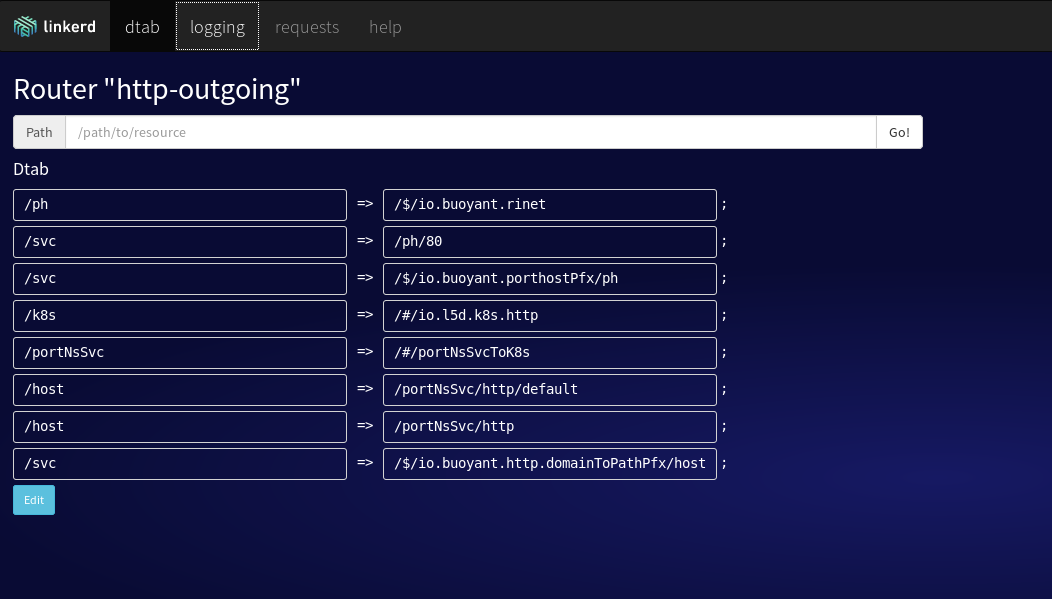

And a peek into the dtabs listing page (dtabs are extremely important in the linkerd world):

Awesome! I don’t see any blatant errors, so I’m going to assume everything is fine…

As is obvious by the events of the previous sections – in addition to service-to-service communication, linkerd can also be used as an ingress controller (as stated in the documentation. I currently use and am very happy with traefik as my ingress (and it works properly with all my other installed tooling), so I’m not going to try and do all my ingress through linkerd but it seems like something that would be pretty cool.

One of the great things about kubernetes is that you can have multiple ingress controllers running at the same time, but unfortunately only one ingress controller can bind to the host’s port 80 and 443 (if that’s what it’s trying to do), so I can’t use host networking for now, and resultingly I can’t use linkerd as my ingress. I am pretty sure I can point traefik at linkerd however, by changing the ingresses for my apps to point to linkerd’s Service rather than the apps’ Service(s). For now I’m electing to only proxy to linkerd for internal service-to-service/pod traffic.

Assuming everything is fine is well and good of course, but before I try to intercept/proxy traffic between my actual app’s frontend (NGINX-served) and back (Haskell REST-ish API), it’s a good idea to try and test on some unrelated containers first. What I can think to do is make a container with a HTTP server and one that does nothing but send requests to the other container over and over and see the requests proxied through linkerd. We’re going to use some containers that make this super easy for us:

Honorable mention to kennship/http-echo – it’s way more downloaded but like 4x the size of go-echo (NodeJS packaging vs Golang packaging). Anyway, let’s get on to just running these containers, but making sure to http_proxy them – I really hope http_proxy works because integrating with linkerd is kind of a huge PITA without.

server-pod.allinone.yaml

---

apiVersion: v1

kind: Pod

metadata:

name: l5d-test-server

labels:

app: l5d-test

spec:

containers:

- name: server

image: paddycarey/go-echo

imagePullPolicy: IfNotPresent

ports:

- name: http

containerPort: 8000

---

apiVersion: v1

kind: Service

metadata:

name: l5d-test-server

spec:

selector:

app: l5d-test

ports:

- name: http

port: 80

targetPort: 8000

protocol: TCP

client-pod.yaml

---

apiVersion: v1

kind: Pod

metadata:

name: l5d-test-client

labels:

app: l5d-test

spec:

containers:

- name: server

image: plavignotte/continuous-curl

imagePullPolicy: IfNotPresent

args:

- "l5d-test-server:8000"

env:

- name: DEBUG

value: "1"

- name: LOOP

value: "10" # curl every 10 seconds

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: http_proxy

## this really should be going to the node name, but we couldn't use the host network in setting that up

## because I wasn't ready to use linkerd as my ingress controller, and didn't actually turn it off (if you can)

## Should work fine I just point it at the linkerd service though

# value: $(NODE_NAME):4140

value: l5d.linkerd.svc.cluster.local:4140

NOTE It took a little bit of experimentation to end up at the right/working URLs/configuration, and the paragrams below will show some of the steps I went through.

Initially everything was misconfigured and the proxy was picking up a request but they were all failing:

This is bad because obviously I don’t expect the curl to fail every 5 seconds, but it’s encouraging because it means that linkerd is indeed being proxied to correctly at least! Question now is to figure out what’s going wrong with the client and why the requests are failing. Off the top of my head I can think of two things:

First thing I did was to shell (kubectl exec -it l5d-test-client /bin/sh into the box and try curling to see what happened:

/ # curl l5d-test-server -v

* Rebuilt URL to: l5d-test-server/

* Trying 10.97.153.232...

* Connected to l5d.linkerd.svc.cluster.local (10.97.153.232) port 4140 (#0)

> GET http://l5d-test-server/ HTTP/1.1

> Host: l5d-test-server

> User-Agent: curl/7.49.1

> Accept: */*

>

>

< HTTP/1.1 502 Bad Gateway

< l5d-err: Exceeded+10.seconds+binding+timeout+while+resolving+name%3A+%2Fsvc%2Fl5d-test-server

< Content-Length: 78

< Content-Type: text/plain

<

* Connection #0 to host l5d.linkerd.svc.cluster.local left intact

Exceeded 10.seconds binding timeout while resolving name: /svc/l5d-test-server

/ #

Whoa, so this is actually a timeout on the side of linkerd itself! It translated my request for l5d-test-server to /svc/l5d-test-server. Maybe I need to be more thorough in my URL to make the translation better, I don’t see namespace specified and I’m pretty sure the dtab rules were more specific than just the service name! So I went in all the way and tried a super-specific service:

/ # curl l5d-test-server.default.svc.cluster.local -v

* Rebuilt URL to: l5d-test-server.default.svc.cluster.local/

* Trying 10.97.153.232...

* Connected to l5d.linkerd.svc.cluster.local (10.97.153.232) port 4140 (#0)

> GET http://l5d-test-server.default.svc.cluster.local/ HTTP/1.1

> Host: l5d-test-server.default.svc.cluster.local

> User-Agent: curl/7.49.1

> Accept: */*

>

< HTTP/1.1 502 Bad Gateway

< l5d-err: Exceeded+10.seconds+binding+timeout+while+resolving+name%3A+%2Fsvc%2Fl5d-test-server.default.svc.cluster.local

< Content-Length: 104

< Content-Type: text/plain

<

* Connection #0 to host l5d.linkerd.svc.cluster.local left intact

Exceeded 10.seconds binding timeout while resolving name: /svc/l5d-test-server.default.svc.cluster.local

No dice still :(. Time to start examining my linkerd configuration – the service must not have been properly registered with linkerd when it came up – otherwise linkerd should be able to find it. Looking at the dtab page I didn’t see anything obvious:

OK, so maybe there was a problem registering the service in the first place? let’s check the linkerd logs, surely it would have noted something about a service that it needed to watch coming up?

$ k logs -f l5d-8bxq5 -c l5d -n linkerd

--- TONS OF LOGS ---

E 0922 08:59:50.010 UTC THREAD50: retrying k8s request to /api/v1/namespaces/default/endpoints/l5d-test-server on unexpected response code 403 with message {"kind":"Status","apiVersion":"v1","metadata":{},"status":"Failure","message":"endpoints \"l5d-test-server\" is forbidden: User \"system:serviceaccount:linkerd:default\" cannot get endpoints in the namespace \"default\"","reason":"Forbidden","details":{"name":"l5d-test-server","kind":"endpoints"},"code":403}

E 0922 08:59:50.020 UTC THREAD50: retrying k8s request to /api/v1/namespaces/default/endpoints/l5d-test-server on unexpected response code 403 with message {"kind":"Status","apiVersion":"v1","metadata":{},"status":"Failure","message":"endpoints \"l5d-test-server\" is forbidden: User \"system:serviceaccount:linkerd:default\" cannot get endpoints in the namespace \"default\"","reason":"Forbidden","details":{"name":"l5d-test-server","kind":"endpoints"},"code":403}

E 0922 08:59:50.037 UTC THREAD50: retrying k8s request to /api/v1/namespaces/svc/endpoints/default on unexpected response code 403 with message {"kind":"Status","apiVersion":"v1","metadata":{},"status":"Failure","message":"endpoints \"default\" is forbidden: User \"system:serviceaccount:linkerd:default\" cannot get endpoints in the namespace \"svc\"","reason":"Forbidden","details":{"name":"default","kind":"endpoints"},"code":403}

Well that’s pretty obvious, I made a mistake by creating a service account that was bound to the namespace linkerd and not using it explicitly from the linkerd DaemonSet (this is fixed in the resource configs above). It’s weird that linkerd is trying to use a service account in the default namespace to start with – the pod started by the daemonset should be using the namespace it started in, not the namespace of the workload (the test server/client are being run in default, the linkerd DaemonSet and Pods are in linkerd). I found an old Github issue along with a Buoyant post on the issue – it looks like the linkerd service account needs to be in the default namespace. I just went back and removed all mention of namespaces from the ServiceAccount and other RBAC-related resources…

Weirdly enough, even after changing all the namespaces to match, and also adding CluterRoleBindings for the default namespace, things were still broken – the same errors kept coming up – system:serviceaccount:linkerd:default couldn’t lok at endpoints in the namespace default. Of course, I’d forgotten to change serviceAccountName on the linkerd DaemonSet itself to linkerd. After doing that, I manually killed the DS pod and let it get restarted and saw some better output:

$ k logs -f l5d-8ww5j -c l5d -n linkerd

-XX:+AggressiveOpts -XX:+AlwaysPreTouch -XX:+CMSClassUnloadingEnabled -XX:CMSInitiatingOccupancyFraction=70 -XX:+CMSParallelRemarkEnabled -XX:+CMSScavengeBeforeRemark -XX:InitialHeapSize=33554432 -XX:MaxHeapSize=1073741824 -XX:MaxNewSize=357916672 -XX:MaxTenuringThreshold=6 -XX:OldPLABSize=16 -XX:+PerfDisableSharedMem -XX:+PrintCommandLineFlags -XX:+ScavengeBeforeFullGC -XX:-TieredCompilation -XX:+UseCMSInitiatingOccupancyOnly -XX:+UseCompressedClassPointers -XX:+UseCompressedOops -XX:+UseConcMarkSweepGC -XX:+UseParNewGC -XX:+UseStringDeduplication

Sep 22, 2018 9:42:20 AM com.twitter.finagle.http.HttpMuxer$ $anonfun$new$1

INFO: HttpMuxer[/admin/metrics.json] = com.twitter.finagle.stats.MetricsExporter(com.twitter.finagle.stats.MetricsExporter)

Sep 22, 2018 9:42:20 AM com.twitter.finagle.http.HttpMuxer$ $anonfun$new$1

INFO: HttpMuxer[/admin/per_host_metrics.json] = com.twitter.finagle.stats.HostMetricsExporter(com.twitter.finagle.stats.HostMetricsExporter)

I 0922 09:42:21.194 UTC THREAD1: linkerd 1.4.6 (rev=3d083135e65b24d2d8bbadb5eba1773752b7e3ae) built at 20180813-141940

I 0922 09:42:21.674 UTC THREAD1: Finagle version 18.7.0 (rev=271f741636e03171d61180f03f6cbfe532de1396) built at 20180710-150313

I 0922 09:42:23.398 UTC THREAD1: tracer: io.buoyant.telemetry.recentRequests.RecentRequetsTracer@6ff2adb

I 0922 09:42:23.616 UTC THREAD1: Tracer: com.twitter.finagle.zipkin.thrift.ScribeZipkinTracer

I 0922 09:42:23.681 UTC THREAD1: Resolver[inet] = com.twitter.finagle.InetResolver(com.twitter.finagle.InetResolver@52b46d52)

I 0922 09:42:23.682 UTC THREAD1: Resolver[fixedinet] = com.twitter.finagle.FixedInetResolver(com.twitter.finagle.FixedInetResolver@7327a447)

I 0922 09:42:23.682 UTC THREAD1: Resolver[neg] = com.twitter.finagle.NegResolver$(com.twitter.finagle.NegResolver$@2954f6ab)

I 0922 09:42:23.683 UTC THREAD1: Resolver[nil] = com.twitter.finagle.NilResolver$(com.twitter.finagle.NilResolver$@58fbd02e)

I 0922 09:42:23.683 UTC THREAD1: Resolver[fail] = com.twitter.finagle.FailResolver$(com.twitter.finagle.FailResolver$@163042ea)

I 0922 09:42:23.683 UTC THREAD1: Resolver[flag] = com.twitter.server.FlagResolver(com.twitter.server.FlagResolver@11d045b4)

I 0922 09:42:23.683 UTC THREAD1: Resolver[zk] = com.twitter.finagle.zookeeper.ZkResolver(com.twitter.finagle.zookeeper.ZkResolver@1fbf088b)

I 0922 09:42:23.683 UTC THREAD1: Resolver[zk2] = com.twitter.finagle.serverset2.Zk2Resolver(com.twitter.finagle.serverset2.Zk2Resolver@1943c1f2)

I 0922 09:42:24.257 UTC THREAD1: serving http admin on /0.0.0.0:9990

I 0922 09:42:24.271 UTC THREAD1: serving http-outgoing on /0.0.0.0:4140

I 0922 09:42:24.332 UTC THREAD1: serving http-incoming on /0.0.0.0:4141

I 0922 09:42:24.371 UTC THREAD1: serving h2-outgoing on /0.0.0.0:4240

I 0922 09:42:24.387 UTC THREAD1: serving h2-incoming on /0.0.0.0:4241

I 0922 09:42:24.403 UTC THREAD1: serving grpc-outgoing on /0.0.0.0:4340

I 0922 09:42:24.415 UTC THREAD1: serving gprc-incoming on /0.0.0.0:4341

I 0922 09:42:24.458 UTC THREAD1: serving http-ingress on /0.0.0.0:80

I 0922 09:42:24.491 UTC THREAD1: serving h2-ingress on /0.0.0.0:8080

I 0922 09:42:24.502 UTC THREAD1: initialized

I 0922 09:42:32.141 UTC THREAD35 TraceId:33dd5593c699a66d: FailureAccrualFactory marking connection to "%/io.l5d.k8s.localnode/10.244.0.225/#/io.l5d.k8s/default/http/l5d-test-server" as dead. Remote Address: Inet(/10.244.0.205:80,Map(nodeName -> ubuntu-1804-bionic-64-minimal))

I 0922 09:42:32.154 UTC THREAD38 TraceId:33dd5593c699a66d: FailureAccrualFactory marking connection to "%/io.l5d.k8s.localnode/10.244.0.225/#/io.l5d.k8s/default/http/l5d-test-server" as dead. Remote Address: Inet(/10.244.0.219:80,Map(nodeName -> ubuntu-1804-bionic-64-minimal))

I 0922 09:42:42.252 UTC THREAD32 TraceId:d82c90b75286d825: FailureAccrualFactory marking connection to "%/io.l5d.k8s.localnode/10.244.0.225/#/io.l5d.k8s/default/http/l5d-test-server" as dead. Remote Address: Inet(/10.244.0.219:80,Map(nodeName -> ubuntu-1804-bionic-64-minimal))

I 0922 09:42:42.257 UTC THREAD33 TraceId:d82c90b75286d825: FailureAccrualFactory marking connection to "%/io.l5d.k8s.localnode/10.244.0.225/#/io.l5d.k8s/default/http/l5d-test-server" as dead. Remote Address: Inet(/10.244.0.205:80,Map(nodeName -> ubuntu-1804-bionic-64-minimal))

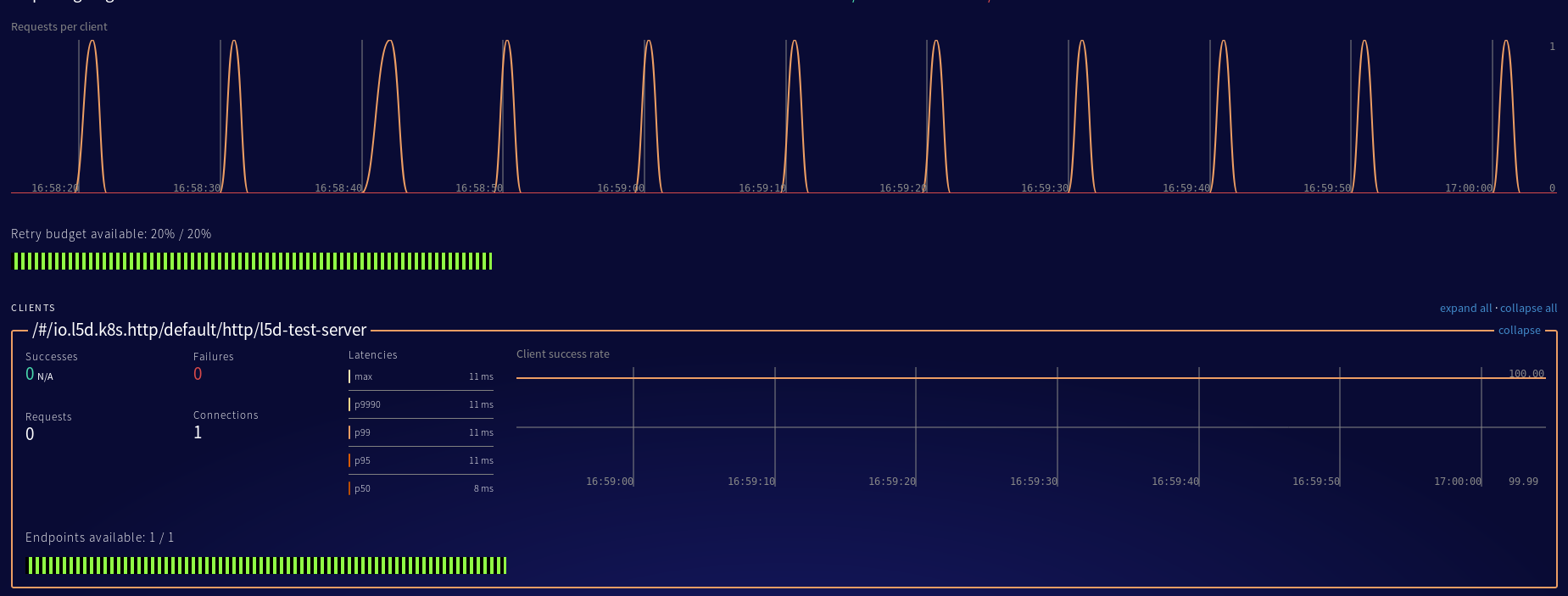

I’m a little worried about the marking <x> as dead messages but at least the permission errors aren’t happening! Let’s check back in at the dashboard – here’s what I saw:

OK, things have changed a little bit (I zoomed out a little to show it)! I can see a new “Requests Per Client” section that’s accurate (1 request every ~5 seconds), as well as seeing the l5d-test-server represented on the listing! Before all I saw was the server itself I believe (the cyan/blue-green line). Unfortunately, the requests are still failing. I’m not entirely sure why, but let’s jump back in and see if things have changed when we curl from the client itself:

$ k exec -it l5d-test-client /bin/sh

/ # curl l5d-test-server.default.svc.cluster.local -v

* Rebuilt URL to: l5d-test-server.default.svc.cluster.local/

* Trying 10.97.153.232...

* Connected to l5d.linkerd.svc.cluster.local (10.97.153.232) port 4140 (#0)

> GET http://l5d-test-server.default.svc.cluster.local/ HTTP/1.1

> Host: l5d-test-server.default.svc.cluster.local

> User-Agent: curl/7.49.1

> Accept: */*

>

< HTTP/1.1 502 Bad Gateway

< l5d-err: Unable+to+establish+connection+to+l5d-test-server.default.svc.cluster.local%2F10.99.105.121%3A80.%0A%0Aservice+name%3A+%2Fsvc%2Fl5d-test-server.default.svc.cluster.local%0Aclient+name%3A+%2F%24%2Fio.buoyant.rinet%2F80%2Fl5d-test-server.default.svc.cluster.local%0Aaddresses%3A+%5Bl5d-test-server.default.svc.cluster.local%2F10.99.105.121%3A80%5D%0Aselected+address%3A+l5d-test-server.default.svc.cluster.local%2F10.99.105.121%3A80%0Adtab+resolution%3A%0A++%2Fsvc%2Fl5d-test-server.default.svc.cluster.local%0A++%2Fph%2F80%2Fl5d-test-server.default.svc.cluster.local+%28%2Fsvc%3D%3E%2Fph%2F80%29%0A++%2F%24%2Fio.buoyant.rinet%2F80%2Fl5d-test-server.default.svc.cluster.local+%28%2Fph%3D%3E%2F%24%2Fio.buoyant.rinet%29%0A

< Content-Length: 609

< Content-Type: text/plain

<

Unable to establish connection to l5d-test-server.default.svc.cluster.local/10.99.105.121:80.

service name: /svc/l5d-test-server.default.svc.cluster.local

client name: /$/io.buoyant.rinet/80/l5d-test-server.default.svc.cluster.local

addresses: [l5d-test-server.default.svc.cluster.local/10.99.105.121:80]

selected address: l5d-test-server.default.svc.cluster.local/10.99.105.121:80

dtab resolution:

/svc/l5d-test-server.default.svc.cluster.local

/ph/80/l5d-test-server.default.svc.cluster.local (/svc=>/ph/80)

/$/io.buoyant.rinet/80/l5d-test-server.default.svc.cluster.local (/ph=>/$/io.buoyant.rinet)

* Connection #0 to host l5d.linkerd.svc.cluster.local left intact

OK, different error now! It looks like it wa sunable to connect to the server, but I’ve got a way different set of errors, along with much more information about what’s happening! Now I can go snooping around and see if this dtab resolution is what it should be and figure out where the problem actually is inside linkerd. Looking at the linkerd dashboard I can already see that the resolution URL should PROBABLY look something like /#/io.l5d.k8s.http/default/http/l5d-test-server (which expands to /%/io.l5d.k8s.daemonset/linkerd/http-incoming/l5d/#/io.l5d.k8s.http/default/http/l5d-test-server), not /svc/l5d-test-server.default.svc.cluster.local or any of the other resolutions that got printed out. I tried again with a less explicit DNS name, only using l5d-test-server this time:

/ # curl l5d-test-server -v

* Rebuilt URL to: l5d-test-server/

* Trying 10.97.153.232...

* Connected to l5d.linkerd.svc.cluster.local (10.97.153.232) port 4140 (#0)

> GET http://l5d-test-server/ HTTP/1.1

> Host: l5d-test-server

> User-Agent: curl/7.49.1

> Accept: */*

>

< HTTP/1.1 502 Bad Gateway

< Via: 1.1 linkerd

< l5d-success-class: 0.0

< l5d-err: Unable+to+establish+connection+to+10.244.0.205%3A80.%0A%0Aservice+name%3A+%2Fsvc%2Fl5d-test-server%0Aclient+name%3A+%2F%25%2Fio.l5d.k8s.localnode%2F10.244.0.225%2F%23%2Fio.l5d.k8s%2Fdefault%2Fhttp%2Fl5d-test-server%0Aaddresses%3A+%5B10.244.0.205%3A80%2C+10.244.0.219%3A80%5D%0Aselected+address%3A+10.244.0.205%3A80%0Adtab+resolution%3A%0A++%2Fsvc%2Fl5d-test-server%0A++%2Fhost%2Fl5d-test-server+%28%2Fsvc%3D%3E%2F%24%2Fio.buoyant.http.domainToPathPfx%2Fhost%29%0A++%2FportNsSvc%2Fhttp%2Fdefault%2Fl5d-test-server+%28%2Fhost%3D%3E%2FportNsSvc%2Fhttp%2Fdefault%29%0A++%2Fk8s%2Fdefault%2Fhttp%2Fl5d-test-server+%28%2FportNsSvc%3D%3E%2F%23%2FportNsSvcToK8s%29%0A++%2F%23%2Fio.l5d.k8s%2Fdefault%2Fhttp%2Fl5d-test-server+%28%2Fk8s%3D%3E%2F%23%2Fio.l5d.k8s%29%0A++%2F%25%2Fio.l5d.k8s.localnode%2F10.244.0.225%2F%23%2Fio.l5d.k8s%2Fdefault%2Fhttp%2Fl5d-test-server+%28SubnetLocalTransformer%29%0A

< Content-Length: 687

< Content-Type: text/plain

<

Unable to establish connection to 10.244.0.205:80.

service name: /svc/l5d-test-server

client name: /%/io.l5d.k8s.localnode/10.244.0.225/#/io.l5d.k8s/default/http/l5d-test-server

addresses: [10.244.0.205:80, 10.244.0.219:80]

selected address: 10.244.0.205:80

dtab resolution:

/svc/l5d-test-server

/host/l5d-test-server (/svc=>/$/io.buoyant.http.domainToPathPfx/host)

/portNsSvc/http/default/l5d-test-server (/host=>/portNsSvc/http/default)

/k8s/default/http/l5d-test-server (/portNsSvc=>/#/portNsSvcToK8s)

/#/io.l5d.k8s/default/http/l5d-test-server (/k8s=>/#/io.l5d.k8s)

/%/io.l5d.k8s.localnode/10.244.0.225/#/io.l5d.k8s/default/http/l5d-test-server (SubnetLocalTransformer)

* Connection #0 to host l5d.linkerd.svc.cluster.local left intact

One of the resolutions considered is very close to what I think it should be resolving to (based on what was on the dashboard), but actually came up as negative – /#/io.l5d.k8s.http/default/http/l5d-test-serrver (neg), it looks like something about the test-server must have been incorrectly configured. I looked back at the test server config and I found the issue – I’d forgotten to set up the port configuration for the server container itself, and check what port the go-echo image actually used – it’s port 8000! After re-deploying the test server and also curling to the right port, I got a successful request through!

/ # curl l5d-test-server -v

* Rebuilt URL to: l5d-test-server/

* Trying 10.97.153.232...

* Connected to l5d.linkerd.svc.cluster.local (10.97.153.232) port 4140 (#0)

> GET http://l5d-test-server/ HTTP/1.1

> Host: l5d-test-server

> User-Agent: curl/7.49.1

> Accept: */*

>

< HTTP/1.1 200 OK

< Via: 1.1 linkerd, 1.1 linkerd

< l5d-success-class: 1.0

< Date: Mon, 24 Sep 2018 07:56:07 GMT

< Content-Length: 393

< Content-Type: application/json

<

* Connection #0 to host l5d.linkerd.svc.cluster.local left intact

{ "url": "/","method": "GET","headers":{ "Accept": ["*/*"],"Content-Length": ["0"],"L5d-Ctx-Trace": ["BgYrXQ7LCXDgZTk9m6NRFw4domXGW/f+AAAAAAAAAAA="],"L5d-Dst-Client": ["/%/io.l5d.k8s.localnode/10.244.0.225/#/io.l5d.k8s/default/http/l5d-test-server"],"L5d-Dst-Service": ["/svc/l5d-test-server"],"L5d-Reqid": ["0e1da265c65bf7fe"],"User-Agent": ["curl/7.49.1"],"Via": ["1.1 linkerd, 1.1 linkerd"]},"body": ""}

/ #

Awesome! So Linkerd is successfully building the right dtab, and proxying requests to services! Now I can see that the requests stay pegged to 100% successful in the web admin panel:

Now that linkerd is up and running, I wanted to take some time to look into how it integrates with other monitoring tools I have installed on my cluster, in particular [Prometheus][prometheus] and Jaeger. I really like the UI that linkerd provides, but of course, I want my other tools to keep working!

Great news is that linkerd already supports publish prometheus metrics, so prometheus can scrape it for information – all you need to do is tell prometheus to scrape the appropraite /metrics endpoint. Since I would never want to have to input this again, I’ll be editing my prometheus configmap to include the config:

---

apiVersion: v1

kind: ConfigMap

metadata:

name: prometheus-config

namespace: monitoring

data:

prometheus.yml: |-

global:

scrape_interval: 15s

external_labels:

monitor: prm-monitor

scrape_configs:

- job_name: prometheus

scrape_interval: 5s

static_configs:

- targets: ["localhost:9090"]

- job_name: node_exporter

scrape_interval: 5s

static_configs:

- targets: ["node-exporter:9100"]

- job_name: linkerd_exporter

scrape_interval: 5s

metrics_path: /admin/metrics/prometheus

static_configs:

- targets: ["l5d.linkerd.svc.cluster.local:9990"]

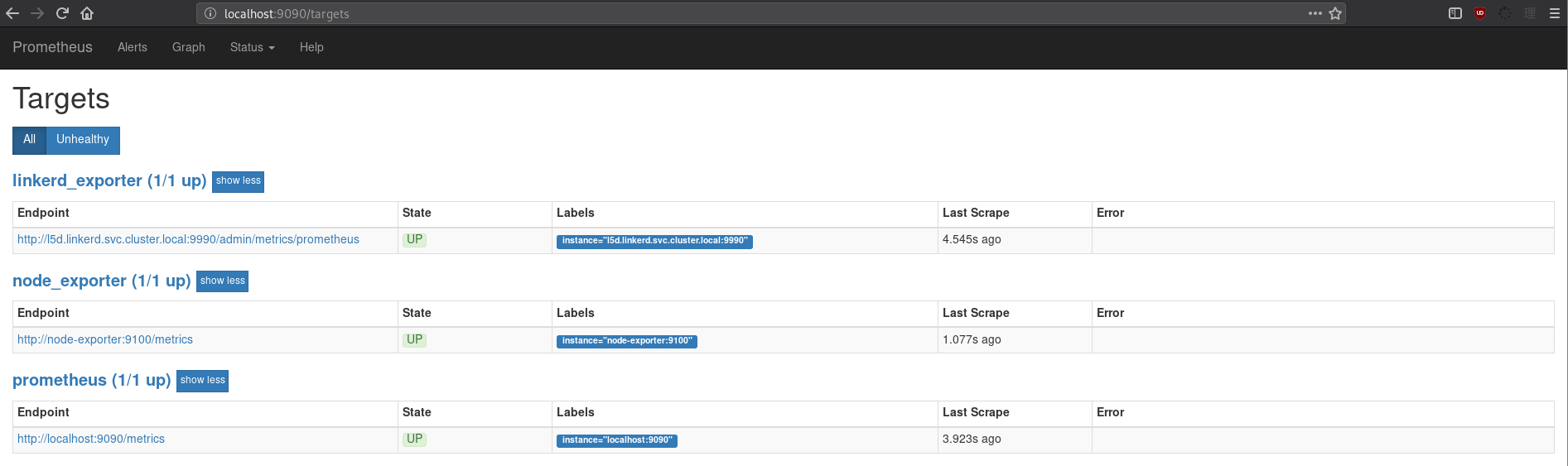

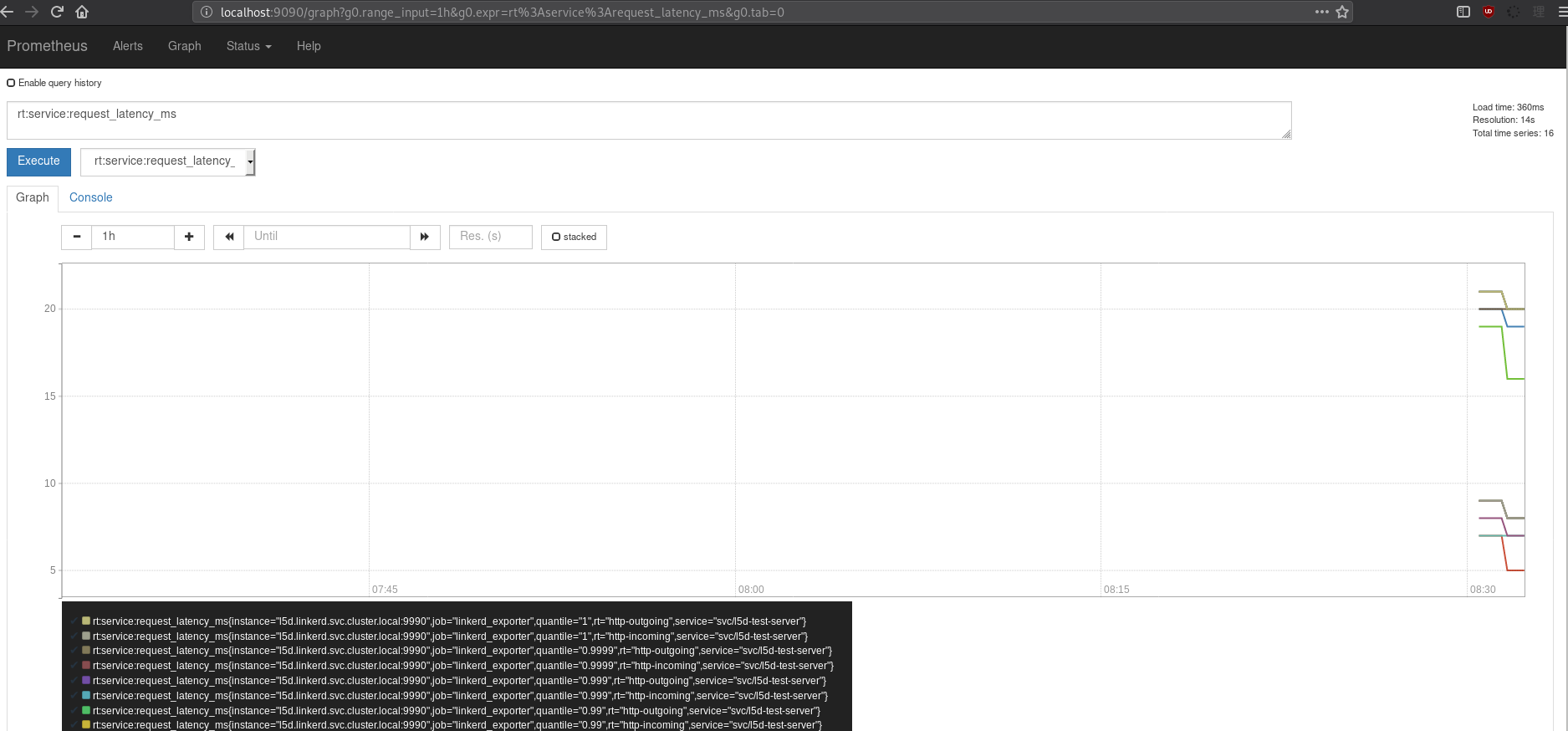

After updating the Prometheus configuration and restarting the prometheus pod so the configuration could be re-slurped (I just deleted the old pod), I needed to also update the l5d service in the linkerd namespace to allow traffic to it on 9990 (so that prometheus can hit it’s metrics endpoint. A quick kubectl port-forward <prometheus pod> 9090:9090 -n monitoring and I’m off to look at some of the data:

Linkerd has integration to forward traces to Zipkin which works for me because Jaeger supports Zipkin’s transfer format! This means I can just uncomment the configuration for zipkin exporting and point it at my cluster-wide Jeager instance and I should be able to see things there. You can find more information on how to configure linkerd for this usecase in the linkerd documentation. Let’s make some edits to the ConfigMap for linkerd:

- kind: io.l5d.zipkin # Uncomment to enable exporting of zipkin traces

host: jaeger.monitoring.svc.cluster.local # Zipkin collector address

port: 9411 # my jaeger instance uses 9411 for zipkin

sampleRate: 1.0 # Set to a lower sample rate depending on your traffic volume

Unfortunately I didn’t actually see the traces show up properly in Jaeger so there might be some discrepancies (imperfect Zipkin API support on the Jaeger side?), and the free-tracing usecase might not work as well as I thought. I am sending the important metrics to Jaeger myself (and that’s still working) from the actual app so I’m just going to overlook this bit for now and not look into it too deeply.

It was pretty straightforward to set up linkerd v1 on my super small cluster. Of course, things get more interesting with more nodes and more interactions and more apps, but I don’t think the growth in amount of work is linear – once you’ve got a decent linkerd config you can just deploy more linkerds and they should do the right thing because so many decisions get delegated to Kubernetes. It was pretty easy to work through all of this, and having the additional pane of glass is nice.

There’s a ton more that linkerd can be made to do (traffic shifting @ deployment, using linkerd for ingress, dtab mgic), but I feel like I’ve gotten a good introduction and some experience deploying linkerd v1, and I hope you’ve enjoyed the inside look. I’m going to very likely try out Linkerd v2 as well in the near future and will write about it when I do!