+

+

+

+

tl;dr - I installed Loki and Fluent Bit on my Kubernetes cluster for some light log retention, in the past I’ve used EFKK but this setup is lighter and easier for low maintenance projects.

Assuming you have a Grafana instance handy, Fluent Bit + Loki is pretty great for a low effort log aggregation! It’s a relatively “new” stack compared to options like Graylog.

Another great option in this space is Graylog! I’m not picking it for my cluster since it’s a little heavy weight (and I’m not exactly keen to develop more MongoDB admin experience). MongoDB is a solid piece of engineering at this point, and there are smart people working on it, but I’m basically a Postgres zealot at this point, why even try to hide it.

Is there anything wrong with ELK/EFKK? Nope! If you’ve got the ELK/EFKK stack set up already then there’s no need to switch! I think that Fluent Bit + Loki for me works a lot more practically with how I plan to use the logging for most applications I run that are in steady state. That and I save a bunch of compute which is nice.

Anyway let’s get on with it.

First thing we need to set up is Loki! Loki is one of the Grafana suite of tools that is centered around log management – released F/OSS under the AGPL and seems to be a pretty tight piece of software.

As usual I’ve broken down the setup more than most others care to (no all-in-one kubectl apply for me!) – so I’ll go bit by bit and leave some notes on what’s happening. Loki is quite simple so it’s quite easy.

loki.configmap.yamlFirst we’ll need a ConfigMap for Loki:

apiVersion: v1

kind: ConfigMap

metadata:

name: loki

namespace: logging

data:

local-config.yaml: |

auth_enabled: false

server:

http_listen_port: 3100

grpc_listen_port: 9096

common:

path_prefix: /data/loki

storage:

filesystem:

chunks_directory: /data/loki/chunks

rules_directory: /data/loki/rules

replication_factor: 1

ring:

instance_addr: 127.0.0.1

kvstore:

store: inmemory

schema_config:

configs:

- from: 2020-10-24

store: boltdb-shipper

object_store: filesystem

schema: v11

index:

prefix: index_

period: 24h

ruler:

alertmanager_url: http://grafana.monitoring.svc.cluster.local:9093

# By default, Loki will send anonymous, but uniquely-identifiable usage and configuration

# analytics to Grafana Labs. These statistics are sent to https://stats.grafana.org/

#

# Statistics help us better understand how Loki is used, and they show us performance

# levels for most users. This helps us prioritize features and documentation.

# For more information on what's sent, look at

# https://github.com/grafana/loki/blob/main/pkg/usagestats/stats.go

# Refer to the buildReport method to see what goes into a report.

#

# If you would like to disable reporting, uncomment the following lines:

analytics:

reporting_enabled: false This is mostly in line with the defaults from Loki configuration docs.

loki.pvc.yamlWe’ll also want a place for Loki to store data, so let’s set up a PersistentVolumeClaim:

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: loki

spec:

storageClassName: localpv-zfs-postgres

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 16GiThen we’ll need

loki.deployment.yamlWe don’t need to get fancy (ex. a StatefulSet with the actual Loki instance for now, a single replica Deployment is more than good enough!

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: loki

spec:

revisionHistoryLimit: 2

replicas: 1

strategy:

type: Recreate

selector:

matchLabels:

app: loki

component: loki

tier: logging

template:

metadata:

labels:

app: loki

component: loki

tier: logging

spec:

automountServiceAccountToken: false

# runtimeClassName: kata

securityContext:

runAsUser: 10001

fsGroup: 10001

runAsGroup: 10001

runAsNonRoot: true

containers:

- name: loki

# 2.5.0 as of 2022/04/11

image: grafana/loki@sha256:c4f9965d93379a7a69b4d21b07e8544d5005375abeff3727ecd266e527bab9d3

imagePullPolicy: IfNotPresent

ports:

- containerPort: 3100

- containerPort: 9096

resources:

requests:

cpu: 100m

memory: 128Mi

limits:

cpu: 500m

memory: 2Gi

# Probes

livenessProbe:

failureThreshold: 3

tcpSocket:

port: 3100

initialDelaySeconds: 5

periodSeconds: 30

successThreshold: 1

timeoutSeconds: 5

readinessProbe:

tcpSocket:

port: 3100

initialDelaySeconds: 5

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 5

volumeMounts:

- name: config

mountPath: /etc/loki/local-config.yaml

subPath: local-config.yaml

- name: data

mountPath: /data

volumes:

- name: config

configMap:

name: loki

- name: data

persistentVolumeClaim:

claimName: lokiNOTE here I’m using Recreate due to the use of a PersistentVolumeClaim that is not ReadWriteMany. Since the PVC ReadWriteOnce (remember this is on a node per-node), it’s quite possible that a new RollingUpdate instance may be on another node and be unable to connect to storage.

Loki does store data locally (the way I’m using it) but if you wanted to get more scale out of your instances (and better stability through rollouts) you would probably set it up to use ephemeral storage + S3.

loki.svc.yamlAnd to allow other

---

apiVersion: v1

kind: Service

metadata:

name: loki

spec:

selector:

app: loki

component: loki

tier: logging

ports:

- name: http

port: 3100

- name: grpc

port: 9096NOTE This loki instance will not be accessible from outside the cluster, so I don’t have an Ingress or an IngressRoute (Traefik-specific CRD) configured – just this ClusterIP (by default) service.

Now that we have Loki set up and running, we’re going to want to set up Fluent Bit to sit on every node (hint: a DaemonSet, obviously), and look at logs from running workloads (and anything else that wants to write to it).

fluent-bit-config.configmap.yamlDunno why I put -config in the name, but here’s the configmap for Fluent Bit

apiVersion: v1

kind: ConfigMap

metadata:

name: fluent-bit-config

namespace: logging

labels:

k8s-app: fluent-bit

data:

# Configuration files: server, input, filters and output

# ======================================================

fluent-bit.conf: |

[SERVICE]

Flush 1

Log_Level info

Daemon off

Parsers_File parsers.conf

HTTP_Server On

HTTP_Listen 0.0.0.0

HTTP_Port 2020

@INCLUDE input-kubernetes.conf

@INCLUDE filter-kubernetes.conf

@INCLUDE output-loki.conf

input-kubernetes.conf: |

[INPUT]

Name tail

Tag kube.*

Path /var/log/containers/*.log

Parser cri

DB /var/log/flb_kube.db

Mem_Buf_Limit 100MB

Skip_Long_Lines On

Refresh_Interval 10

filter-kubernetes.conf: |

[FILTER]

Name kubernetes

Match kube.*

Kube_URL https://kubernetes.default.svc:443

Kube_CA_File /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

Kube_Token_File /var/run/secrets/kubernetes.io/serviceaccount/token

Kube_Tag_Prefix kube.var.log.containers.

Merge_Log On

Merge_Log_Key log_processed

K8S-Logging.Parser On

K8S-Logging.Exclude Off

output-loki.conf: |

[OUTPUT]

name loki

match *

labels job=fluentbit

Host ${FLUENT_LOKI_HOST}

Port ${FLUENT_LOKI_PORT}

auto_kubernetes_labels on

parsers.conf: |

[PARSER]

Name apache

Format regex

Regex ^(?<host>[^ ]*) [^ ]* (?<user>[^ ]*) \[(?<time>[^\]]*)\] "(?<method>\S+)(?: +(?<path>[^\"]*?)(?: +\S*)?)?" (?<code>[^ ]*) (?<size>[^ ]*)(?: "(?<referer>[^\"]*)" "(?<agent>[^\"]*)")?$

Time_Key time

Time_Format %d/%b/%Y:%H:%M:%S %z

[PARSER]

Name apache2

Format regex

Regex ^(?<host>[^ ]*) [^ ]* (?<user>[^ ]*) \[(?<time>[^\]]*)\] "(?<method>\S+)(?: +(?<path>[^ ]*) +\S*)?" (?<code>[^ ]*) (?<size>[^ ]*)(?: "(?<referer>[^\"]*)" "(?<agent>[^\"]*)")?$

Time_Key time

Time_Format %d/%b/%Y:%H:%M:%S %z

[PARSER]

Name apache_error

Format regex

Regex ^\[[^ ]* (?<time>[^\]]*)\] \[(?<level>[^\]]*)\](?: \[pid (?<pid>[^\]]*)\])?( \[client (?<client>[^\]]*)\])? (?<message>.*)$

[PARSER]

Name nginx

Format regex

Regex ^(?<remote>[^ ]*) (?<host>[^ ]*) (?<user>[^ ]*) \[(?<time>[^\]]*)\] "(?<method>\S+)(?: +(?<path>[^\"]*?)(?: +\S*)?)?" (?<code>[^ ]*) (?<size>[^ ]*)(?: "(?<referer>[^\"]*)" "(?<agent>[^\"]*)")?$

Time_Key time

Time_Format %d/%b/%Y:%H:%M:%S %z

[PARSER]

Name json

Format json

Time_Key time

Time_Format %d/%b/%Y:%H:%M:%S %z

[PARSER]

Name docker

Format json

Time_Key time

Time_Format %Y-%m-%dT%H:%M:%S.%L

Time_Keep On

[PARSER]

# http://rubular.com/r/tjUt3Awgg4

Name cri

Format regex

Regex ^(?<time>[^ ]+) (?<stream>stdout|stderr) (?<logtag>[^ ]*) (?<message>.*)$

Time_Key time

Time_Format %Y-%m-%dT%H:%M:%S.%L%z

[PARSER]

Name syslog

Format regex

Regex ^\<(?<pri>[0-9]+)\>(?<time>[^ ]* {1,2}[^ ]* [^ ]*) (?<host>[^ ]*) (?<ident>[a-zA-Z0-9_\/\.\-]*)(?:\[(?<pid>[0-9]+)\])?(?:[^\:]*\:)? *(?<message>.*)$

Time_Key time

Time_Format %b %d %H:%M:%S This is also pretty darn close to the standard boilerplate instructions for Fluent Bit when configuring with Kubernetes! Not much to see here.

fluent-bit.serviceaccount.yamlThe Fluent Bit DaemonSet is going to need to do a whole bunch of high privilege stuff, so let’s set up a ServiceAccount for it:

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: fluent-bit

namespace: loggingfluent-bit.rbac.yamlHere we can provide the accesss to the ServiceAccount:

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: fluent-bit-read

rules:

- apiGroups: [""]

resources:

- namespaces

- pods

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: fluent-bit-read

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: fluent-bit-read

subjects:

- kind: ServiceAccount

name: fluent-bit

namespace: loggingfluent-bit.ds.yamlAnd finally, the big kahuna – the DaemonSet that’s going to run Fluent Bit across the cluster:

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluent-bit

namespace: logging

labels:

app: fluentbit

component: fluentbit

tier: logging

k8s-app: fluent-bit-logging

version: v1

kubernetes.io/cluster-service: "true"

spec:

selector:

matchLabels:

k8s-app: fluent-bit-logging

app: fluentbit

component: fluentbit

tier: logging

template:

metadata:

labels:

k8s-app: fluent-bit-logging

app: fluentbit

component: fluentbit

tier: logging

version: v1

kubernetes.io/cluster-service: "true"

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "2020"

prometheus.io/path: /api/v1/metrics/prometheus

spec:

terminationGracePeriodSeconds: 10

serviceAccountName: fluent-bit

tolerations:

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

- operator: "Exists"

effect: "NoExecute"

- operator: "Exists"

effect: "NoSchedule"

containers:

- name: fluent-bit

# 1.9.2 as of 2022/04/11

image: fluent/fluent-bit@sha256:90a2394abc14c9709146e7d9a81edcc165ff26b8144024eeb1f359662c648dbf

imagePullPolicy: Always

resources:

requests:

cpu: 100m

memory: 128Mi

limits:

cpu: 500m

memory: 512Mi

ports:

- containerPort: 2020

env:

# Loki

- name: FLUENT_LOKI_HOST

value: "loki"

- name: FLUENT_LOKI_PORT

value: "3100"

- name: FLUENT_LOKI_AUTO_KUBERNETES_LABELS

value: "true"

volumeMounts:

- name: varlog

mountPath: /var/log

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

- name: fluent-bit-config

mountPath: /fluent-bit/etc/

volumes:

- name: varlog

hostPath:

path: /var/log

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

- name: fluent-bit-config

configMap:

name: fluent-bit-configAgain, no rocket science here – the Fluent Bit documentation on Kubernetes is pretty good.

kustomization.yaml and Makefilebase/kustomization.yamlnamespace: logging

generatorOptions:

disableNameSuffixHash: true

resources:

# Loki

- loki.pvc.yaml

- loki.configmap.yaml

- loki.deployment.yaml

- loki.svc.yaml

# Fluent Bit

- fluent-bit.serviceaccount.yaml

- fluent-bit.rbac.yaml

- fluent-bit-config.configmap.yaml

- fluent-bit.ds.yamlMakefileThe Makefile has a lot of my secret sauce/usual setup in it, so it’s optional (you can use whatever you normally use to execute kustomize, like FluxCD.

I’m including it here for those who are interested in the MakeInfra pattern which I hock from time to time!

.PHONY: all install uninstall \

generated-folder generate clean \

ns \

# Loki

loki loki-pvc loki-deployment loki-svc \

loki-uninstall loki-svc-uninstall loki-deployment-uninstall loki-pvc-uninstall loki-configmap-uninstall \

# Fluentbit

fluentbit fluentbit-serviceaccount fluentbit-rbac fluentbit-ds \

fluentbit-uninstall fluentbit-ds-uninstall fluentbit-rbac-uninstall fluentbit-serviceaccount-uninstall

KUSTOMIZE_OPTS ?= --load-restrictor=LoadRestrictionsNone

KUSTOMIZE ?= kustomize $(KUSTOMIZE_OPTS)

KUBECTL ?= kubectl

all: install

install: ns loki fluentbit

uninstall: fluentbit-uninstall loki-uninstall

###########

# Tooling #

###########

check-cluster-name:

ifeq (,$(CLUSTER))

$(error "CLUSTER not set")

endif

GENERATED_FOLDER ?= generated/$(CLUSTER)

#############

# Top Level #

#############

generated-folder: check-cluster-name

mkdir -p $(GENERATED_FOLDER)

generate: check-cluster-name generated-folder

$(KUSTOMIZE) build -o $(GENERATED_FOLDER) overlays/$(CLUSTER)

clean: generated-folder

rm -rf $(GENERATED_FOLDER)/*

ns:

$(KUBECTL) apply -f logging.ns.yaml

secret: generate

$(KUBECTL) apply -f $(GENERATED_FOLDER)/default_v1_secret_fluentbit.yaml

secret-uninstall: generate

$(KUBECTL) delete -f $(GENERATED_FOLDER)/default_v1_secret_fluentbit.yaml || true

########

# Loki #

########

loki: loki-configmap loki-pvc loki-deployment loki-svc

loki-uninstall: loki-svc-uninstall loki-deployment-uninstall loki-pvc-uninstall loki-configmap-uninstall

loki-configmap: generate

$(KUBECTL) apply -f $(GENERATED_FOLDER)/v1_configmap_loki.yaml

loki-configmap-uninstall:

$(KUBECTL) delete -f $(GENERATED_FOLDER)/v1_configmap_loki.yaml || true

loki-pvc: generate

$(KUBECTL) apply -f $(GENERATED_FOLDER)/v1_persistentvolumeclaim_loki.yaml

loki-pvc-uninstall: generate

@if [ "$$DELETE_DATA" == "yes" ] ; then \

echo "[info] DELETE_DATA set to 'yes', deleting Loki PVCs..."; \

$(KUBECTL) delete -f $(GENERATED_FOLDER)/v1_persistentvolumeclaim_loki.yaml || true; \

else \

echo "[warn] DELETE_DATA not set, not deleting the Loki PVCs"; \

fi

loki-deployment: generate

$(KUBECTL) apply -f $(GENERATED_FOLDER)/apps_v1_deployment_loki.yaml

loki-deployment-uninstall: generate

$(KUBECTL) delete -f $(GENERATED_FOLDER)/apps_v1_deployment_loki.yaml || true

loki-svc: generate

$(KUBECTL) apply -f $(GENERATED_FOLDER)/v1_service_loki.yaml

loki-svc-uninstall: generate

$(KUBECTL) apply -f $(GENERATED_FOLDER)/v1_service_loki.yaml || true

#############

# FluentBit #

#############

fluentbit: fluentbit-serviceaccount fluentbit-configmap fluentbit-rbac fluentbit-ds

fluentbit-uninstall: fluentbit-ds-uninstall fluentbit-rbac-uninstall \

fluentbit-configmap-uninstall fluentbit-serviceaccount-uninstall

fluentbit-serviceaccount: generate

$(KUBECTL) apply -f $(GENERATED_FOLDER)/v1_serviceaccount_fluent-bit.yaml

fluentbit-seviceaccount-uninstall: generate

$(KUBECTL) delete -f $(GENERATED_FOLDER)/v1_serviceaccount_fluent-bit.yaml || true

fluentbit-configmap: generate

$(KUBECTL) apply -f $(GENERATED_FOLDER)/v1_configmap_fluent-bit-config.yaml

fluentbit-configmap-uninstall:

$(KUBECTL) delete -f $(GENERATED_FOLDER)/v1_configmap_fluent-bit-config.yaml || true

fluentbit-rbac: generate

$(KUBECTL) apply -f $(GENERATED_FOLDER)/rbac.authorization.k8s.io_v1_clusterrole_fluent-bit-read.yaml

$(KUBECTL) apply -f $(GENERATED_FOLDER)/rbac.authorization.k8s.io_v1_clusterrolebinding_fluent-bit-read.yaml

fluentbit-rbac-uninstall: generate

$(KUBECTL) delete -f $(GENERATED_FOLDER)/rbac.authorization.k8s.io_v1_clusterrole_fluent-bit-read.yaml || true

$(KUBECTL) delete -f $(GENERATED_FOLDER)/rbac.authorization.k8s.io_v1_clusterrolebinding_fluent-bit-read.yaml || true

fluentbit-ds: generate

$(KUBECTL) apply -f $(GENERATED_FOLDER)/apps_v1_daemonset_fluent-bit.yaml

fluentbit-ds-uninstall: generate

$(KUBECTL) delete -f $(GENERATED_FOLDER)/apps_v1_daemonset_fluent-bit.yaml || true

If you’re running your own Grafana instance like I am, you’re probably trying to manage it with as much infrastructure-as-code as you can, so here are the changes I had to make to my setup:

grafana-provisioning.configmap.yamlThis is the static configuration for Grafana provisioning I use:

- name: cluster-loki

uid: "_cluster-loki"

type: loki

access: proxy

version: 1

orgId: 1

editable: true

is_default: true

url: "http://loki.logging.svc.cluster.local:3100"As is probably obvious, this ads a cluster wide Loki instance, so that Grafana can make use of it.

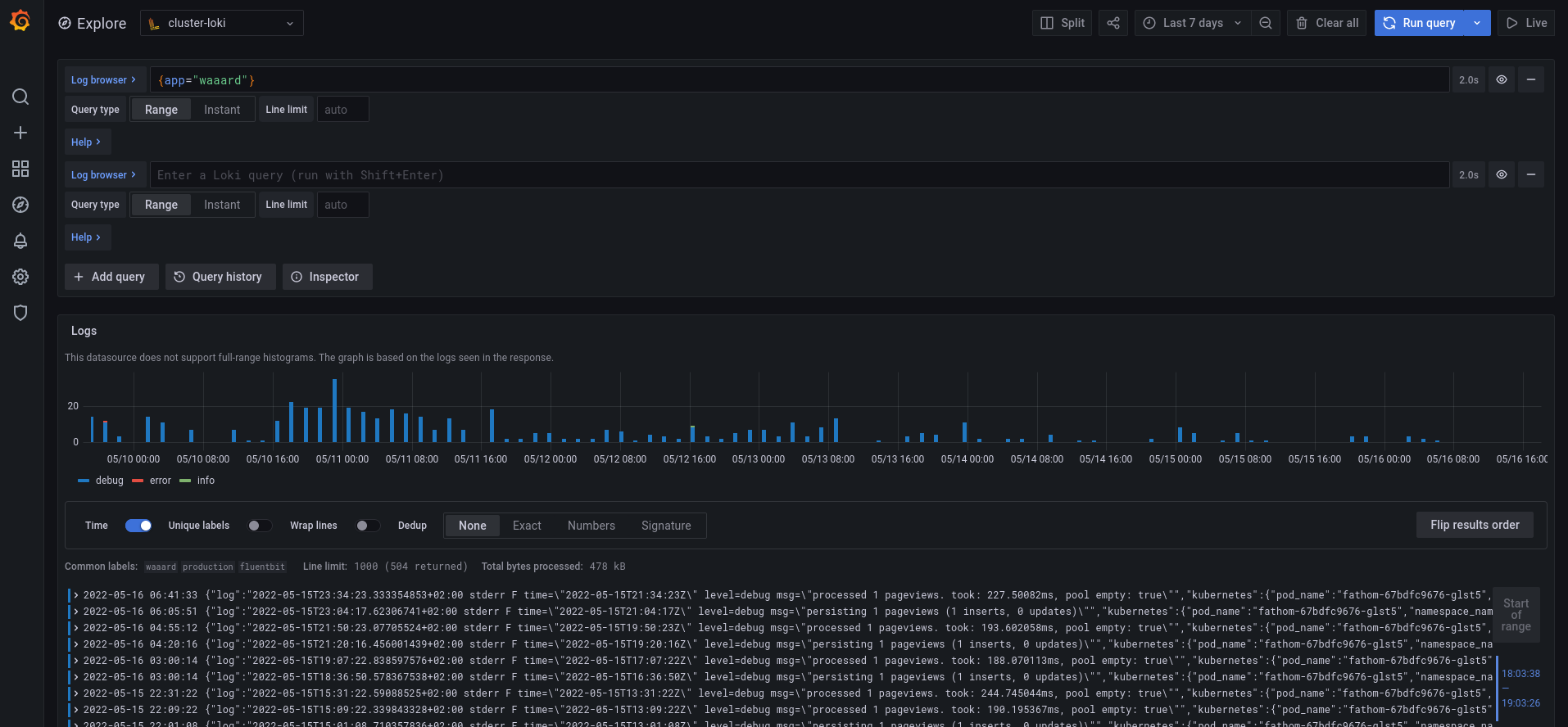

Once you have Grafana set up, you can use the Explore tab to browse logs:

While looking around the ecosystem for other logging solutions I happened upon Apache SkyWalking which looks pretty impressive and mostly complete as a managing solution (UI and all!).

I don’t hear much about it, so it’s a bit interesting, but taking a look at the architecture it doesn’t look too unreasonable (there’s quite a lot of complexity described but a bucnh of it is optional).

Unlike Graylog Skywalking can be backed by Postgres, and while it’s a newer solution it does look pretty reasonably constructed. I’m curious to see if search can be fully backed by Postgres’s FTS but it looks like Skywalking is extensible enough that if I wanted to I could get it done if it doesn’t exist already.

Adding in TimescaleDB support would be amazing too but I don’t want to get ahead of myself… With all the stuff I’m working on I’d probably never get to it!

Well it’s a a pretty straight forward setup – hopefully it’s been easy to follow and get yourself started with Loki.